I've been running my TrueNas setup for about 2 years now. Been great so far - but I've recently hit a bit a snag and and could use your expertise!

It's a pretty basic setup:

VM with 32 GB RAM & 2 cores

4 - 4TB SATA in RAIDZ1 that are passed through from the host to the NAS system

1 Pool, 2 Datasets (one new one w/ deduped enabled, other not deduped)

I'm trying to transfer about 1 TB of backups from a dataset (which dedup isn't enabled) to a new dataset (which is enabled) to take advantage of deduplication for backups. The process goes like this:

1) copy one of the 25GB daily backup files OUT of the un-deduped dataset to a linked system which temporarily hold it in RAM

2) then copy the data back into the deduped dataset from the temp system

3) clear the temporary hold location

4) repeat

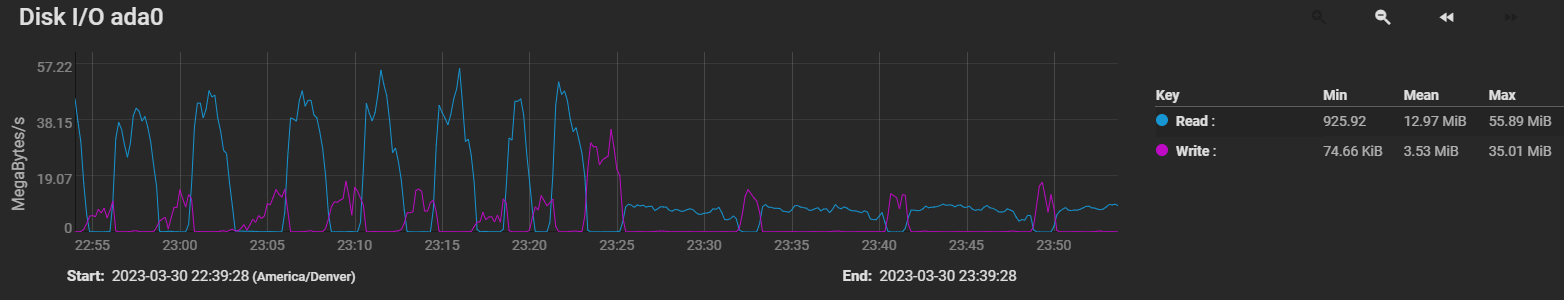

For awhile (30-60 minutes) it runs great! Getting fantastic dedup rates and everything. Then after some time it looks to hit a wall. The read rate TANKs hard and the only thing I have found that fixes it is a full reboot of the NAS system.

Thing's I've tried:

updated to the most recent version TrueNAS-13.0-U4 from 12.0-U8.1

set vfs.zfs.arc.meta_min=8589934592 (suspected ARC metadata thrashing)

disable smb service

The only thing I can get to fix it is doing a full reboot of the system in which case the speeds return to normal.

Here's a visual so you can get the idea. Let me know what other logs or data you want to see. Thanks in advance!!!

It's a pretty basic setup:

VM with 32 GB RAM & 2 cores

4 - 4TB SATA in RAIDZ1 that are passed through from the host to the NAS system

1 Pool, 2 Datasets (one new one w/ deduped enabled, other not deduped)

I'm trying to transfer about 1 TB of backups from a dataset (which dedup isn't enabled) to a new dataset (which is enabled) to take advantage of deduplication for backups. The process goes like this:

1) copy one of the 25GB daily backup files OUT of the un-deduped dataset to a linked system which temporarily hold it in RAM

2) then copy the data back into the deduped dataset from the temp system

3) clear the temporary hold location

4) repeat

For awhile (30-60 minutes) it runs great! Getting fantastic dedup rates and everything. Then after some time it looks to hit a wall. The read rate TANKs hard and the only thing I have found that fixes it is a full reboot of the NAS system.

Thing's I've tried:

updated to the most recent version TrueNAS-13.0-U4 from 12.0-U8.1

set vfs.zfs.arc.meta_min=8589934592 (suspected ARC metadata thrashing)

disable smb service

The only thing I can get to fix it is doing a full reboot of the system in which case the speeds return to normal.

Here's a visual so you can get the idea. Let me know what other logs or data you want to see. Thanks in advance!!!

Code:

root@NAS[~]# arc_summary

------------------------------------------------------------------------

ZFS Subsystem Report Fri Mar 31 00:07:07 2023

FreeBSD 13.1-RELEASE-p7 zpl version 5

Machine: NAS.landoscloud.com (amd64) spa version 5000

ARC status: HEALTHY

Memory throttle count: 0

ARC size (current): 74.9 % 23.2 GiB

Target size (adaptive): 75.8 % 23.5 GiB

Min size (hard limit): 3.2 % 1022.4 MiB

Max size (high water): 30:1 30.9 GiB

Most Frequently Used (MFU) cache size: 58.2 % 12.2 GiB

Most Recently Used (MRU) cache size: 41.8 % 8.8 GiB

Metadata cache size (hard limit): 75.0 % 23.2 GiB

Metadata cache size (current): 4.0 % 941.6 MiB

Dnode cache size (hard limit): 34.5 % 8.0 GiB

Dnode cache size (current): 1.7 % 136.6 MiB

ARC hash breakdown:

Elements max: 427.3k

Elements current: 100.0 % 427.3k

Collisions: 316.6k

Chain max: 4

Chains: 20.4k

ARC misc:

Deleted: 3.5M

Mutex misses: 3.1k

Eviction skips: 84.5k

Eviction skips due to L2 writes: 0

L2 cached evictions: 0 Bytes

L2 eligible evictions: 189.9 GiB

L2 eligible MFU evictions: 1.1 % 2.1 GiB

L2 eligible MRU evictions: 98.9 % 187.7 GiB

L2 ineligible evictions: 240.5 GiB

ARC total accesses (hits + misses): 35.8M

Cache hit ratio: 94.0 % 33.6M

Cache miss ratio: 6.0 % 2.2M

Actual hit ratio (MFU + MRU hits): 93.9 % 33.6M

Data demand efficiency: 64.0 % 1.7M

Data prefetch efficiency: < 0.1 % 1.4M

Cache hits by cache type:

Most frequently used (MFU): 83.8 % 28.2M

Most recently used (MRU): 16.2 % 5.4M

Most frequently used (MFU) ghost: 0.2 % 59.0k

Most recently used (MRU) ghost: < 0.1 % 16.3k

Cache hits by data type:

Demand data: 3.3 % 1.1M

Prefetch data: < 0.1 % 14

Demand metadata: 96.7 % 32.5M

Prefetch metadata: < 0.1 % 5.7k

Cache misses by data type:

Demand data: 29.1 % 628.7k

Prefetch data: 63.0 % 1.4M

Demand metadata: 7.6 % 164.0k

Prefetch metadata: 0.3 % 6.7k

DMU prefetch efficiency: 1.8M

Hit ratio: 51.6 % 949.9k

Miss ratio: 48.4 % 889.3k

L2ARC not detected, skipping section

Tunables:

abd_scatter_enabled 1

abd_scatter_min_size 4097

allow_redacted_dataset_mount 0

anon_data_esize 0

anon_metadata_esize 0

anon_size 2015012864

arc.average_blocksize 8192

arc.dnode_limit 0

arc.dnode_limit_percent 10

arc.dnode_reduce_percent 10

arc.evict_batch_limit 10

arc.eviction_pct 200

arc.grow_retry 0

arc.lotsfree_percent 10

arc.max 0

arc.meta_adjust_restarts 4096

arc.meta_limit 0

arc.meta_limit_percent 75

arc.meta_min 8589934592

arc.meta_prune 10000

arc.meta_strategy 1

arc.min 0

arc.min_prefetch_ms 0

arc.min_prescient_prefetch_ms 0

arc.p_dampener_disable 1

arc.p_min_shift 0

arc.pc_percent 0

arc.prune_task_threads 1

arc.shrink_shift 0

arc.sys_free 0

arc_free_target 173776

arc_max 0

arc_min 0

arc_no_grow_shift 5

async_block_max_blocks 18446744073709551615

autoimport_disable 1

btree_verify_intensity 0

ccw_retry_interval 300

checksum_events_per_second 20

commit_timeout_pct 5

compressed_arc_enabled 1

condense.indirect_commit_entry_delay_ms 0

condense.indirect_obsolete_pct 25

condense.indirect_vdevs_enable 1

condense.max_obsolete_bytes 1073741824

condense.min_mapping_bytes 131072

condense_pct 200

crypt_sessions 0

dbgmsg_enable 1

dbgmsg_maxsize 4194304

dbuf.cache_shift 5

dbuf.metadata_cache_max_bytes 18446744073709551615

dbuf.metadata_cache_shift 6

dbuf_cache.hiwater_pct 10

dbuf_cache.lowater_pct 10

dbuf_cache.max_bytes 18446744073709551615

dbuf_state_index 0

ddt_data_is_special 1

deadman.checktime_ms 60000

deadman.enabled 1

deadman.failmode wait

deadman.synctime_ms 600000

deadman.ziotime_ms 300000

debug 0

debugflags 0

dedup.prefetch 0

default_bs 9

default_ibs 15

delay_min_dirty_percent 60

delay_scale 500000

dirty_data_max 3430569164

dirty_data_max_max 4294967296

dirty_data_max_max_percent 25

dirty_data_max_percent 10

dirty_data_sync_percent 20

disable_ivset_guid_check 0

dmu_object_alloc_chunk_shift 7

dmu_offset_next_sync 1

dmu_prefetch_max 134217728

dtl_sm_blksz 4096

embedded_slog_min_ms 64

flags 0

fletcher_4_impl [fastest] scalar superscalar superscalar4 sse2 ssse3 avx2 avx512f

free_bpobj_enabled 1

free_leak_on_eio 0

free_min_time_ms 1000

history_output_max 1048576

immediate_write_sz 32768

initialize_chunk_size 1048576

initialize_value 16045690984833335022

keep_log_spacemaps_at_export 0

l2arc.exclude_special 0

l2arc.feed_again 1

l2arc.feed_min_ms 200

l2arc.feed_secs 1

l2arc.headroom 2

l2arc.headroom_boost 200

l2arc.meta_percent 33

l2arc.mfuonly 0

l2arc.noprefetch 1

l2arc.norw 0

l2arc.rebuild_blocks_min_l2size 1073741824

l2arc.rebuild_enabled 0

l2arc.trim_ahead 0

l2arc.write_boost 8388608

l2arc.write_max 8388608

l2arc_feed_again 1

l2arc_feed_min_ms 200

l2arc_feed_secs 1

l2arc_headroom 2

l2arc_noprefetch 1

l2arc_norw 0

l2arc_write_boost 8388608

l2arc_write_max 8388608

l2c_only_size 0

livelist.condense.new_alloc 0

livelist.condense.sync_cancel 0

livelist.condense.sync_pause 0

livelist.condense.zthr_cancel 0

livelist.condense.zthr_pause 0

livelist.max_entries 500000

livelist.min_percent_shared 75

lua.max_instrlimit 100000000

lua.max_memlimit 104857600

max_async_dedup_frees 100000

max_auto_ashift 14

max_dataset_nesting 50

max_log_walking 5

max_logsm_summary_length 10

max_missing_tvds 0

max_missing_tvds_cachefile 2

max_missing_tvds_scan 0

max_nvlist_src_size 0

max_recordsize 1048576

metaslab.aliquot 1048576

metaslab.bias_enabled 1

metaslab.debug_load 0

metaslab.debug_unload 0

metaslab.df_alloc_threshold 131072

metaslab.df_free_pct 4

metaslab.df_max_search 16777216

metaslab.df_use_largest_segment 0

metaslab.find_max_tries 100

metaslab.force_ganging 16777217

metaslab.fragmentation_factor_enabled 1

metaslab.fragmentation_threshold 70

metaslab.lba_weighting_enabled 1

metaslab.load_pct 50

metaslab.max_size_cache_sec 3600

metaslab.mem_limit 25

metaslab.preload_enabled 1

metaslab.preload_limit 10

metaslab.segment_weight_enabled 1

metaslab.sm_blksz_no_log 16384

metaslab.sm_blksz_with_log 131072

metaslab.switch_threshold 2

metaslab.try_hard_before_gang 0

metaslab.unload_delay 32

metaslab.unload_delay_ms 600000

mfu_data_esize 12987881984

mfu_ghost_data_esize 1798595072

mfu_ghost_metadata_esize 25378304

mfu_ghost_size 1823973376

mfu_metadata_esize 40105472

mfu_size 13099472896

mg.fragmentation_threshold 95

mg.noalloc_threshold 0

min_auto_ashift 9

min_metaslabs_to_flush 1

mru_data_esize 7640310784

mru_ghost_data_esize 16270403584

mru_ghost_metadata_esize 90891776

mru_ghost_size 16361295360

mru_metadata_esize 362282496

mru_size 9407368704

multihost.fail_intervals 10

multihost.history 0

multihost.import_intervals 20

multihost.interval 1000

multilist_num_sublists 0

no_scrub_io 0

no_scrub_prefetch 0

nocacheflush 0

nopwrite_enabled 1

obsolete_min_time_ms 500

pd_bytes_max 52428800

per_txg_dirty_frees_percent 30

prefetch.array_rd_sz 1048576

prefetch.disable 0

prefetch.max_distance 67108864

prefetch.max_idistance 67108864

prefetch.max_sec_reap 2

prefetch.max_streams 8

prefetch.min_distance 4194304

prefetch.min_sec_reap 1

read_history 0

read_history_hits 0

rebuild_max_segment 1048576

rebuild_scrub_enabled 1

rebuild_vdev_limit 33554432

reconstruct.indirect_combinations_max 4096

recover 0

recv.queue_ff 20

recv.queue_length 16777216

recv.write_batch_size 1048576

removal_suspend_progress 0

remove_max_segment 16777216

resilver_disable_defer 0

resilver_min_time_ms 3000

scan_blkstats 0

scan_checkpoint_intval 7200

scan_fill_weight 3

scan_ignore_errors 0

scan_issue_strategy 0

scan_legacy 0

scan_max_ext_gap 2097152

scan_mem_lim_fact 20

scan_mem_lim_soft_fact 20

scan_strict_mem_lim 0

scan_suspend_progress 0

scan_vdev_limit 4194304

scrub_min_time_ms 1000

send.corrupt_data 0

send.no_prefetch_queue_ff 20

send.no_prefetch_queue_length 1048576

send.override_estimate_recordsize 0

send.queue_ff 20

send.queue_length 16777216

send.unmodified_spill_blocks 1

send_holes_without_birth_time 1

slow_io_events_per_second 20

spa.asize_inflation 24

spa.discard_memory_limit 16777216

spa.load_print_vdev_tree 0

spa.load_verify_data 1

spa.load_verify_metadata 1

spa.load_verify_shift 4

spa.slop_shift 5

space_map_ibs 14

special_class_metadata_reserve_pct 25

standard_sm_blksz 131072

super_owner 0

sync_pass_deferred_free 2

sync_pass_dont_compress 8

sync_pass_rewrite 2

sync_taskq_batch_pct 75

top_maxinflight 1000

traverse_indirect_prefetch_limit 32

trim.extent_bytes_max 134217728

trim.extent_bytes_min 32768

trim.metaslab_skip 0

trim.queue_limit 10

trim.txg_batch 32

txg.history 100

txg.timeout 5

unflushed_log_block_max 131072

unflushed_log_block_min 1000

unflushed_log_block_pct 400

unflushed_log_txg_max 1000

unflushed_max_mem_amt 1073741824

unflushed_max_mem_ppm 1000

user_indirect_is_special 1

validate_skip 0

vdev.aggregate_trim 0

vdev.aggregation_limit 1048576

vdev.aggregation_limit_non_rotating 131072

vdev.async_read_max_active 3

vdev.async_read_min_active 1

vdev.async_write_active_max_dirty_percent 60

vdev.async_write_active_min_dirty_percent 30

vdev.async_write_max_active 5

vdev.async_write_min_active 1

vdev.bio_delete_disable 0

vdev.bio_flush_disable 0

vdev.cache_bshift 16

vdev.cache_max 16384

vdev.cache_size 0

vdev.def_queue_depth 32

vdev.default_ms_count 200

vdev.default_ms_shift 29

vdev.file.logical_ashift 9

vdev.file.physical_ashift 9

vdev.initializing_max_active 1

vdev.initializing_min_active 1

vdev.max_active 1000

vdev.max_auto_ashift 14

vdev.min_auto_ashift 9

vdev.min_ms_count 16

vdev.mirror.non_rotating_inc 0

vdev.mirror.non_rotating_seek_inc 1

vdev.mirror.rotating_inc 0

vdev.mirror.rotating_seek_inc 5

vdev.mirror.rotating_seek_offset 1048576

vdev.ms_count_limit 131072

vdev.nia_credit 5

vdev.nia_delay 5

vdev.queue_depth_pct 1000

vdev.read_gap_limit 32768

vdev.rebuild_max_active 3

vdev.rebuild_min_active 1

vdev.removal_ignore_errors 0

vdev.removal_max_active 2

vdev.removal_max_span 32768

vdev.removal_min_active 1

vdev.removal_suspend_progress 0

vdev.remove_max_segment 16777216

vdev.scrub_max_active 3

vdev.scrub_min_active 1

vdev.sync_read_max_active 10

vdev.sync_read_min_active 10

vdev.sync_write_max_active 10

vdev.sync_write_min_active 10

vdev.trim_max_active 2

vdev.trim_min_active 1

vdev.validate_skip 0

vdev.write_gap_limit 4096

version.acl 1

version.ioctl 15

version.module v2023012500-zfs_9ef0b67f8

version.spa 5000

version.zpl 5

vnops.read_chunk_size 1048576

vol.mode 2

vol.recursive 0

vol.unmap_enabled 1

wrlog_data_max 6861138328

xattr_compat 1

zap_iterate_prefetch 1

zevent.len_max 512

zevent.retain_expire_secs 900

zevent.retain_max 2000

zfetch.max_distance 67108864

zfetch.max_idistance 67108864

zil.clean_taskq_maxalloc 1048576

zil.clean_taskq_minalloc 1024

zil.clean_taskq_nthr_pct 100

zil.maxblocksize 131072

zil.nocacheflush 0

zil.replay_disable 0

zil.slog_bulk 786432

zio.deadman_log_all 0

zio.dva_throttle_enabled 1

zio.exclude_metadata 0

zio.requeue_io_start_cut_in_line 1

zio.slow_io_ms 30000

zio.taskq_batch_pct 80

zio.taskq_batch_tpq 0

zio.use_uma 1

VDEV cache disabled, skipping section

ZIL committed transactions: 1.3k

Commit requests: 79

Flushes to stable storage: 79

Transactions to SLOG storage pool: 0 Bytes 0

Transactions to non-SLOG storage pool: 2.4 MiB 78