TrueNAS-12.0-U8 Core

ESXi 6.7u3

Two Pools in the system;

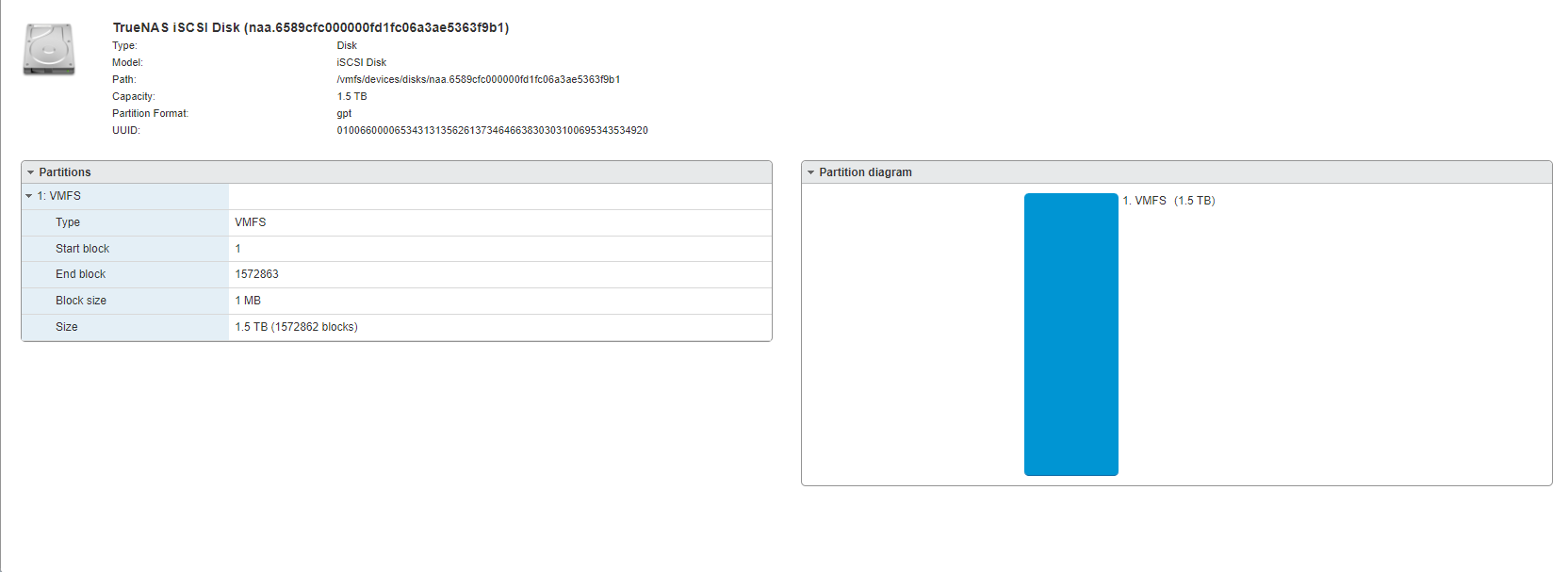

Pool-01 which has a zvol and is presented to the ESXi cluster via iscsi, formatted as a VMFS6 datastore

Pool-02 which has a zvol and is presented to the ESXi cluster via iscsi, unable to be added as a VMFS6 datastore. It appears it does format a GPT partition, just fails to add it. Will accept VMFS5 with no issues

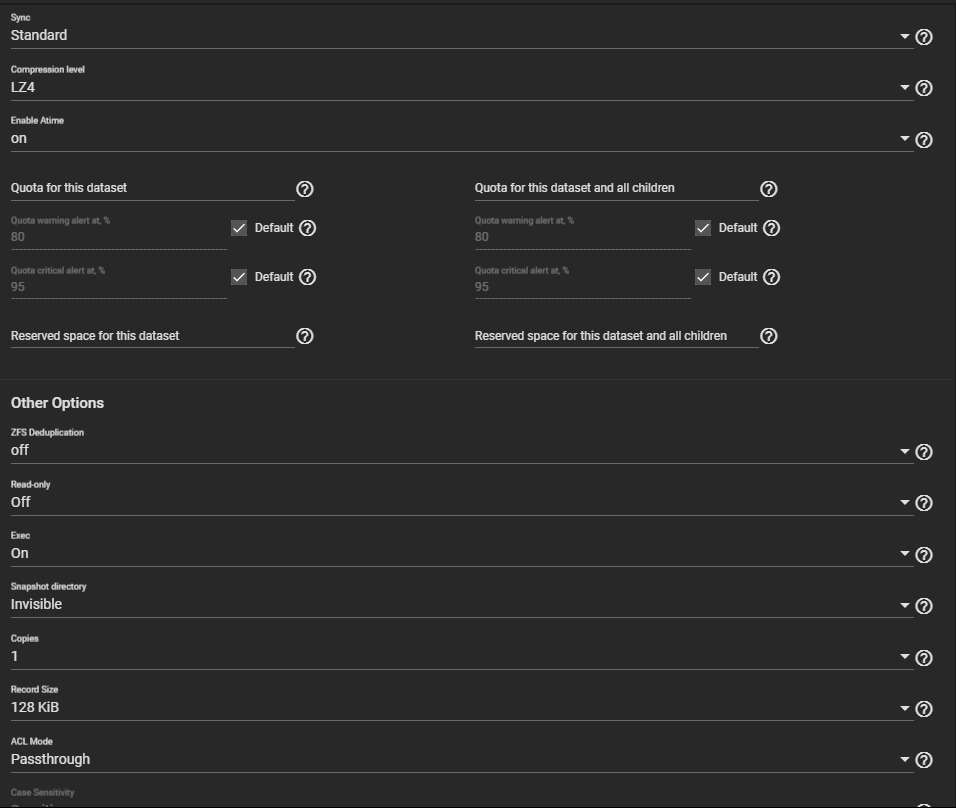

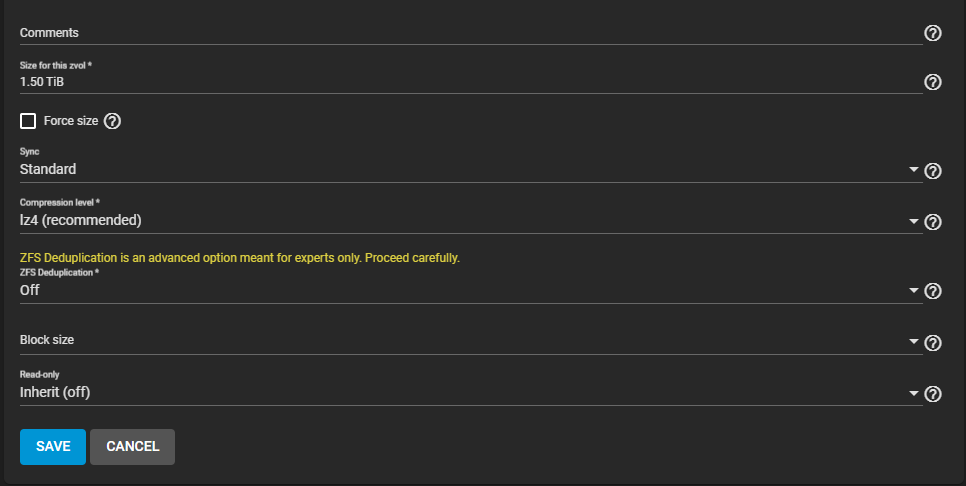

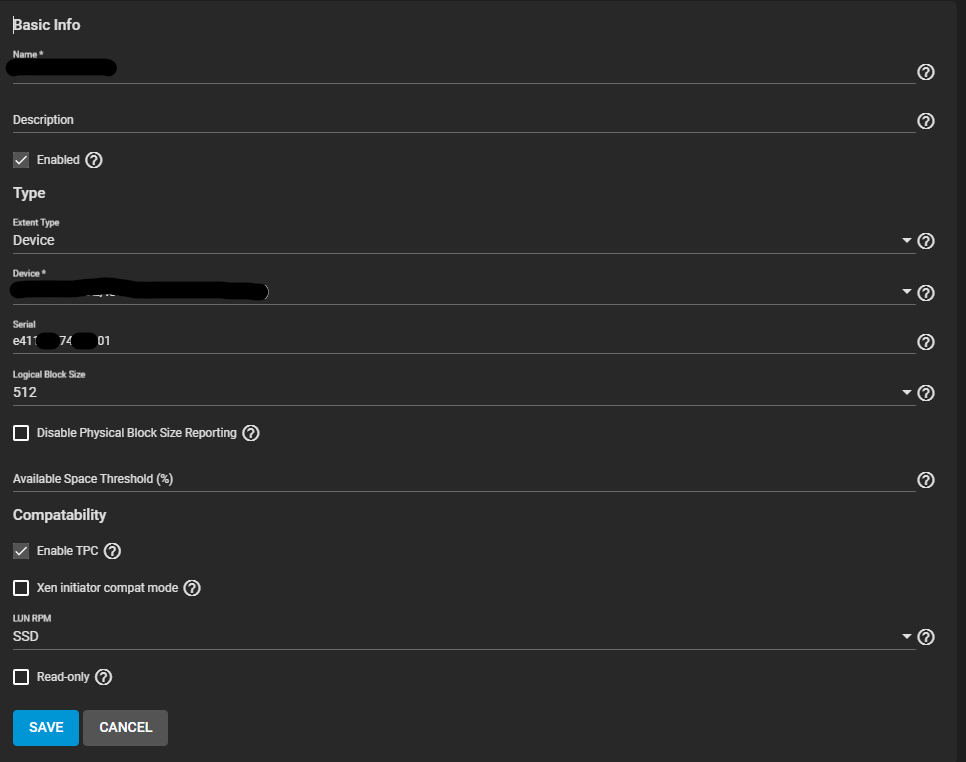

Both pools, zvols, iscsi shares are setup identical. Can't seem to figure out what the issue is here.

I have read the following posts:

www.truenas.com

www.truenas.com

But I don't feel either really pertain to me.

vCenter tells me to pull the vmkernel logs, which are posted below.

Not sure if this has any weight but the iscsi shares are being advertised over SFP+ NICs: https://www.amazon.com/gp/product/B0073YUJM0/ref=ppx_yo_dt_b_search_asin_title?ie=UTF8&psc=1

(after failing, the partition shows as formatted).

ESXi 6.7u3

Two Pools in the system;

Pool-01 which has a zvol and is presented to the ESXi cluster via iscsi, formatted as a VMFS6 datastore

Pool-02 which has a zvol and is presented to the ESXi cluster via iscsi, unable to be added as a VMFS6 datastore. It appears it does format a GPT partition, just fails to add it. Will accept VMFS5 with no issues

Both pools, zvols, iscsi shares are setup identical. Can't seem to figure out what the issue is here.

I have read the following posts:

Can't configure VMFS6 over iSCSI

Hi all! I have a 2x TrueNAS scale server implementation with iSCSI VMFS6 datastores configured for ESXi. Both servers are running the latest Scale release (22.02 RC2). Due to something completely unrelated to VMWare, I wound up rebuilding one of the servers and re-configured everything from...

Broadcom Community - VMTN, Mainframe, Symantec, Carbon Black

Broadcom Community - VMTN, Mainframe, Symantec, Carbon Black

communities.vmware.com

But I don't feel either really pertain to me.

vCenter tells me to pull the vmkernel logs, which are posted below.

Not sure if this has any weight but the iscsi shares are being advertised over SFP+ NICs: https://www.amazon.com/gp/product/B0073YUJM0/ref=ppx_yo_dt_b_search_asin_title?ie=UTF8&psc=1

HP 586444-001 NC550SFP dual-port 10GbE

Code:

2022-03-19T13:42:58.515Z cpu5:2098116)SunRPC: 1099: Destroying world 0x20162e 2022-03-19T13:43:29.515Z cpu0:2098116)SunRPC: 1099: Destroying world 0x201631 2022-03-19T13:44:00.521Z cpu5:2098116)SunRPC: 1099: Destroying world 0x2016b1 2022-03-19T13:45:01.516Z cpu5:2098116)SunRPC: 1099: Destroying world 0x20172a 2022-03-19T13:45:32.515Z cpu4:2098116)SunRPC: 1099: Destroying world 0x201737 2022-03-19T13:46:03.515Z cpu2:2098116)SunRPC: 1099: Destroying world 0x201740 2022-03-19T13:46:11.429Z cpu0:2100822 opID=8567e66e)World: 11950: VC opID l0xvb35o-15109-auto-bnt-h5:70001557-98-29-7987 maps to vmkernel opID 8567e66e 2022-03-19T13:46:11.429Z cpu0:2100822 opID=8567e66e)LVM: 10438: Initialized naa.6589cfc000000fd1fc06a3ae5363f9b1:1, devID 6235dea3-9cc073dc-9255-6cb3115e3476 2022-03-19T13:46:11.513Z cpu0:2100822 opID=8567e66e)LVM: 13563: Deleting device <naa.6589cfc000000fd1fc06a3ae5363f9b1:1>dev OpenCount: 0, postRescan: False 2022-03-19T13:46:11.518Z cpu4:2100822 opID=8567e66e)LVM: 10532: Zero volumeSize specified: using available space (1649248567296). 2022-03-19T13:46:11.537Z cpu4:2100822 opID=8567e66e)WARNING: Vol3: 3208: Datastore/6235dea3-aff6f824-0e54-6cb3115e3476: Invalid CG offset 65536 2022-03-19T13:46:11.537Z cpu4:2100822 opID=8567e66e)FSS: 2350: Failed to create FS on dev [6235dea3-8dd48bc3-1643-6cb3115e3476] fs [Datastore] type [vmfs6] fbSize 1048576 => Bad parameter 2022-03-19T13:46:14.518Z cpu4:2097268)LVM: 16795: One or more LVM devices have been discovered.

(after failing, the partition shows as formatted).

Last edited: