I've progressed through the article on b3n.org about setting up FreeNAS as a VM. Ive given it 2 dedicated cores and 16 GB of dedicated RAM.

I followed all the recommendations as best I could. I have the following setup:

supermicro x10 srh-clnf4

Intel(R) Xeon(R) CPU E5-2650 (12 core w. hyperthreading)

LSI 3008 (onboard) flashed to IT mode.

64 gb ddr4 2400 ram

6 wd red x 2 TB each (setup in raid10)

100 Gb Intel s3700 x2 (mirror) as ZIL

I installed embedded vsphere 6.5, the most recent release

Also installed FreeNAS Build FreeNAS-9.10.2-U3 (e1497f269)

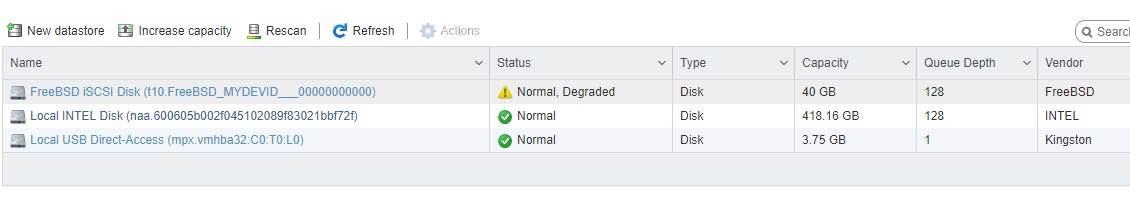

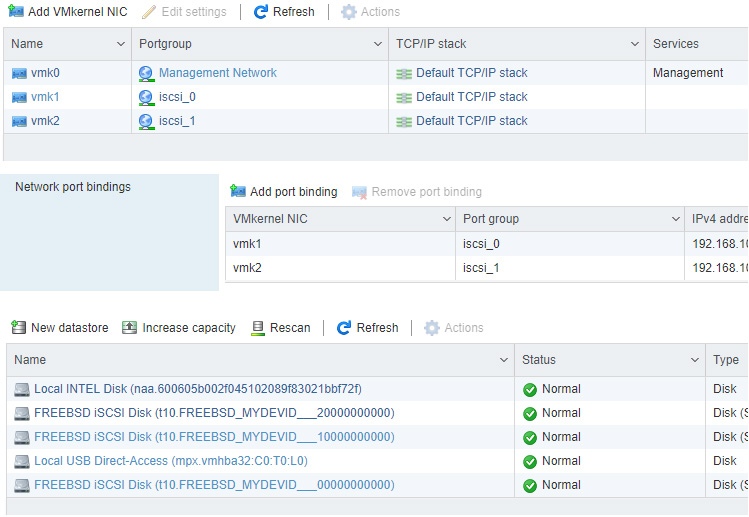

I followed this guide as best as I could, and worked very well up to this point. But you'll see here, my device is shown as "normal, degraded."

As an aside, I have to hit rescan/refresh on the iscsi software adapter followed by a refresh to see it as a device. But more importantly, I see the device as "normal, degraded." I'm not sure if this is due to not having multipathing. I have no external storage (its all attached to the mobo), so I don't see the point in having a second path, unless its to make this degraded message go away.

Any idea if I should troubleshoot this further, or its just a quirk of iscsi/multipathing? Attached are the snapshots of my FreeNAS iscsi share configuration.

I followed all the recommendations as best I could. I have the following setup:

supermicro x10 srh-clnf4

Intel(R) Xeon(R) CPU E5-2650 (12 core w. hyperthreading)

LSI 3008 (onboard) flashed to IT mode.

64 gb ddr4 2400 ram

6 wd red x 2 TB each (setup in raid10)

100 Gb Intel s3700 x2 (mirror) as ZIL

I installed embedded vsphere 6.5, the most recent release

Also installed FreeNAS Build FreeNAS-9.10.2-U3 (e1497f269)

I followed this guide as best as I could, and worked very well up to this point. But you'll see here, my device is shown as "normal, degraded."

As an aside, I have to hit rescan/refresh on the iscsi software adapter followed by a refresh to see it as a device. But more importantly, I see the device as "normal, degraded." I'm not sure if this is due to not having multipathing. I have no external storage (its all attached to the mobo), so I don't see the point in having a second path, unless its to make this degraded message go away.

Any idea if I should troubleshoot this further, or its just a quirk of iscsi/multipathing? Attached are the snapshots of my FreeNAS iscsi share configuration.

Attachments

Last edited by a moderator: