dirtycamacho

Cadet

- Joined

- Jun 8, 2023

- Messages

- 8

Hi all,

First, I apologize if there is an existing thread documenting this issue, but I could not find anything quite related. Full system specs are included at the end of this post, but some high-level information to set the stage:

My TrueNAS system is comprised of 3 pools:

After using FreeNAS and TrueNAS Core for the past 4 years I finally made the switch to Scale about 2 weeks ago in hopes of taking advantage of Linux containers. The upgrade seemed to go well, I tested everything and was back up and running in under 30 minutes.

The following morning I woke up to an alert that one of the Intel SSDs from the vm-ssd-pool was in a faulted state due to too many r/w errors. Sure enough, there were a combined 15 r/w errors on one drive. I have a spare, but performed all the testing and troubleshooting I could first so as to not replace a perfectly fine drive. I ran a SMART short and long test on the faulted drive and, although I am not the best at reading the results, nothing stood out to me as being indicative of a failure or even pre-failure. I ran a scrub on the pool and there were no errors returned whatsoever. So I made a note to keep an eye on that drive, performed a zpool clear, and went on with my day.

Later that same day I received an alert that another of the Intel SSDs from the vm-ssd-pool had been marked with a degraded state due to r/w errors. Followed the same process with the same result.

Over the next few days I continued to receive these errors from each drive in the vm-ssd-pool, but after the first day I stopped clearing the pool. On Wednesday (yesterday) morning I shut down the server, removed the drives, dusted out the system, cleaned contact points, and reseated the drives. At first all seemed well, but this morning I woke up to the same thing. Now all drives have r/w errors.

I'm trying to figure out what could be going on here. The SSDs from the user-ssd-pool, attached to the same backplane and HBA, are working just fine and producing no errors, and the same can be said of the SSDs from the freenas-boot pool (though they are directly connected to the motherboard, so not a direct comparison). AND all of the errored drives have been in use in this system for over 2 years without error until the upgrade from Core to Scale. I can see a drive or two starting to fail, but I find it very hard to believe that all 6 drives are failing at the same time when they were humming along happily before the upgrade.

Any and all assistance provided is greatly appreciated. I just need to get to the bottom of this before it becomes a larger issue.

I'll reach the character limit by posting the output from smartctl on each drive, so the outputs will be in the first reply to this post.

First, I apologize if there is an existing thread documenting this issue, but I could not find anything quite related. Full system specs are included at the end of this post, but some high-level information to set the stage:

My TrueNAS system is comprised of 3 pools:

- freenas-boot - System Dataset Pool, comprised of 2x PNY CS900 120GB SATA SSDs

- user-ssd-pool - Used for hosting network shares, comprised of 8x HGST Ultrastar HSCAC2DA2SUN1.6T 1.6TB SAS SSDs (These drives have DIF enabled which I know I need to correct, but this was an issue that was only made prevalent after the upgrade from Core to Scale and not the main focus of the issue at hand; they're all functioning fine)

- vm-ssd-pool - Used for iSCSI shared storage for a virtualization cluster, comprised of 6x Intel SSDSC2BB600G4 600GB SATA SSDs

After using FreeNAS and TrueNAS Core for the past 4 years I finally made the switch to Scale about 2 weeks ago in hopes of taking advantage of Linux containers. The upgrade seemed to go well, I tested everything and was back up and running in under 30 minutes.

The following morning I woke up to an alert that one of the Intel SSDs from the vm-ssd-pool was in a faulted state due to too many r/w errors. Sure enough, there were a combined 15 r/w errors on one drive. I have a spare, but performed all the testing and troubleshooting I could first so as to not replace a perfectly fine drive. I ran a SMART short and long test on the faulted drive and, although I am not the best at reading the results, nothing stood out to me as being indicative of a failure or even pre-failure. I ran a scrub on the pool and there were no errors returned whatsoever. So I made a note to keep an eye on that drive, performed a zpool clear, and went on with my day.

Later that same day I received an alert that another of the Intel SSDs from the vm-ssd-pool had been marked with a degraded state due to r/w errors. Followed the same process with the same result.

Over the next few days I continued to receive these errors from each drive in the vm-ssd-pool, but after the first day I stopped clearing the pool. On Wednesday (yesterday) morning I shut down the server, removed the drives, dusted out the system, cleaned contact points, and reseated the drives. At first all seemed well, but this morning I woke up to the same thing. Now all drives have r/w errors.

I'm trying to figure out what could be going on here. The SSDs from the user-ssd-pool, attached to the same backplane and HBA, are working just fine and producing no errors, and the same can be said of the SSDs from the freenas-boot pool (though they are directly connected to the motherboard, so not a direct comparison). AND all of the errored drives have been in use in this system for over 2 years without error until the upgrade from Core to Scale. I can see a drive or two starting to fail, but I find it very hard to believe that all 6 drives are failing at the same time when they were humming along happily before the upgrade.

Any and all assistance provided is greatly appreciated. I just need to get to the bottom of this before it becomes a larger issue.

I'll reach the character limit by posting the output from smartctl on each drive, so the outputs will be in the first reply to this post.

Case - Supermicro C216

Motherboard - Supermicro X11SS

CPU - Intel Xeon E3-1260L v5 @ 2.90GHz

RAM - 2x Crucial 16GB DDR4 2666MHZ ECC Memory CT16G4XFD8266

Hard drives - 2x PNY CS900 120GB SATA SSDs (ZFS Mirror), 8x HGST Ultrastar HSCAC2DA2SUN1.6T 1.6TB SAS SSDs (4x Mirrored vdevs creating 'RAID10'), 6x Intel SSDSC2BB600G4 600GB SATA SSDs (3x Mirrored vdevs creating 'RAID10')

Controller - Onboard SATA for boot pool, AVAGO/LSI SAS9300-8i HBA (IT Mode) connected to 24-port backplane with SAS expander via 2x SFF-8643 cables for all other drives in the system

Network Card - Chelsio T520-SO 2-Port 10G SFP+ (connected via DAC cable)

Motherboard - Supermicro X11SS

CPU - Intel Xeon E3-1260L v5 @ 2.90GHz

RAM - 2x Crucial 16GB DDR4 2666MHZ ECC Memory CT16G4XFD8266

Hard drives - 2x PNY CS900 120GB SATA SSDs (ZFS Mirror), 8x HGST Ultrastar HSCAC2DA2SUN1.6T 1.6TB SAS SSDs (4x Mirrored vdevs creating 'RAID10'), 6x Intel SSDSC2BB600G4 600GB SATA SSDs (3x Mirrored vdevs creating 'RAID10')

Controller - Onboard SATA for boot pool, AVAGO/LSI SAS9300-8i HBA (IT Mode) connected to 24-port backplane with SAS expander via 2x SFF-8643 cables for all other drives in the system

Network Card - Chelsio T520-SO 2-Port 10G SFP+ (connected via DAC cable)

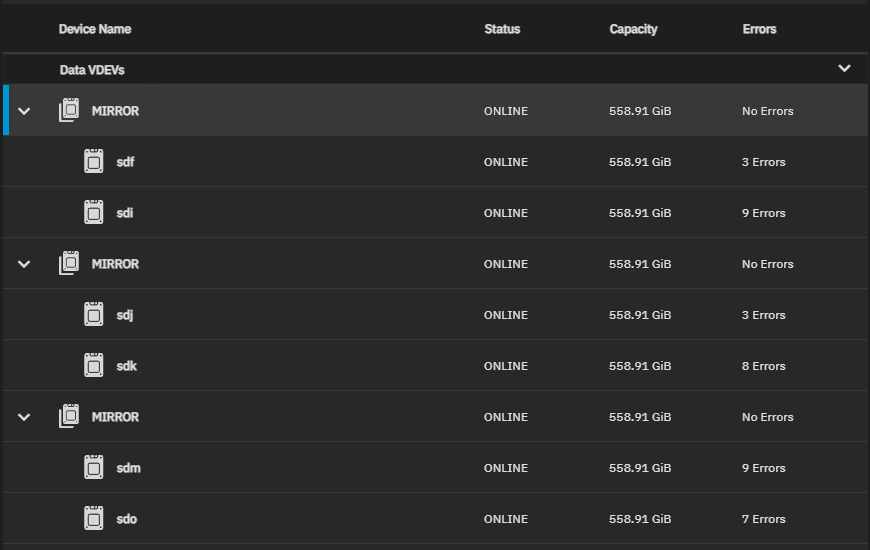

zpool status vm-ssd-pool

pool: vm-ssd-pool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub repaired 0B in 00:19:34 with 0 errors on Thu Jun 8 21:27:43 2023

config:

NAME STATE READ WRITE CKSUM

vm-ssd-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

507ef7fa-7c25-11ea-87c8-a0369f202eac ONLINE 0 3 0

50a0fced-7c25-11ea-87c8-a0369f202eac ONLINE 4 5 0

mirror-1 ONLINE 0 0 0

5085030a-7c25-11ea-87c8-a0369f202eac ONLINE 6 2 0

509c0543-7c25-11ea-87c8-a0369f202eac ONLINE 2 1 0

mirror-2 ONLINE 0 0 0

41490268-70ad-11eb-9419-00074339f020 ONLINE 0 7 0

414e033c-70ad-11eb-9419-00074339f020 ONLINE 1 8 0

errors: No known data errors

pool: vm-ssd-pool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub repaired 0B in 00:19:34 with 0 errors on Thu Jun 8 21:27:43 2023

config:

NAME STATE READ WRITE CKSUM

vm-ssd-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

507ef7fa-7c25-11ea-87c8-a0369f202eac ONLINE 0 3 0

50a0fced-7c25-11ea-87c8-a0369f202eac ONLINE 4 5 0

mirror-1 ONLINE 0 0 0

5085030a-7c25-11ea-87c8-a0369f202eac ONLINE 6 2 0

509c0543-7c25-11ea-87c8-a0369f202eac ONLINE 2 1 0

mirror-2 ONLINE 0 0 0

41490268-70ad-11eb-9419-00074339f020 ONLINE 0 7 0

414e033c-70ad-11eb-9419-00074339f020 ONLINE 1 8 0

errors: No known data errors