TLDR: I'm looking for the documentation on how ZFS writes to unbalanced virtual devices.

I've seen several posts explaining how ZFS handles a new virtual device being added to a pool, and these have been contradictory to each other, or to my own experiments. The read/write speed on my NAS is less than expected. I'm looking to speed it up.

I'm running TrueNas Scale 22.12.3.2, with ZFS version zfs-2.1.11-1.

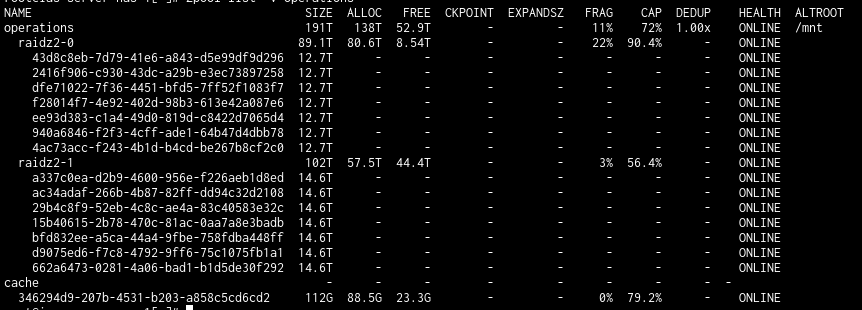

My NAS contains 2 virtual devices, running raidz2. One is made of seven 14TB SAS drives (WUH721414AL5204) with a sustained transfer rate of 267 MB. The other has seven 16TB SAS drives (ST16000NM000J-2TW103) with a sustained transfer rate of 270 MB/s.

The NAS uses GZIP compression.

A 128 GB SSD is being used as a cache.

The theoretical max read/write speed should be 267 * (7-2) * 2 = 2670 MB/s.

I've created a 10GB file from /dev/urandom. Testing the read speed with

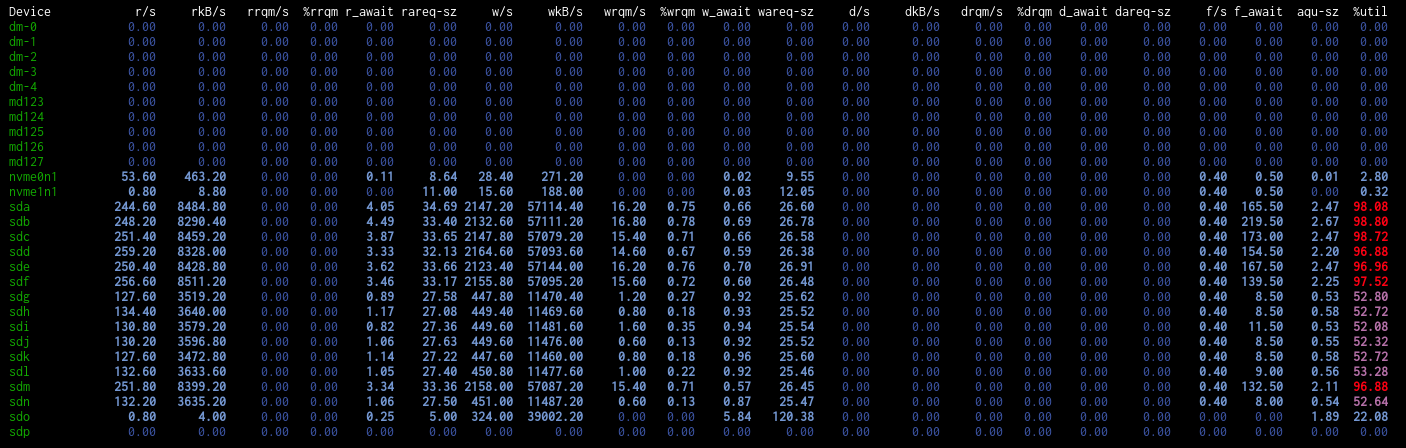

The 14TB drives seem to have maxed out their utilisation (the drives running at 95%+). The drives running at 50%+ are the 16TB drives.

I've looked at the open zfs page, https://openzfs.github.io/openzfs-docs/Getting Started/index.html, but I'm unable to find docs on writing to unbalanced vdevs. If it were the case that data is written to the least full vdevs until all vdevs are balanced, the 14 TB vdev wouldn't be running at 98% utilisation. If it were the case that the data is written to each vdev proportional to the amount of free space, then I would presume that the 16TB vdev would have a much higher utilisation than 50% since it is writing far more data (note the 14TB vdev is 90% full). If it were the case that data is written to each vdev in proportion to the current speed of each disk, then I would expect a 98% utilisation on both vdevs.

Does anyone have a link to the relevant documentation please?

I've seen several posts explaining how ZFS handles a new virtual device being added to a pool, and these have been contradictory to each other, or to my own experiments. The read/write speed on my NAS is less than expected. I'm looking to speed it up.

I'm running TrueNas Scale 22.12.3.2, with ZFS version zfs-2.1.11-1.

My NAS contains 2 virtual devices, running raidz2. One is made of seven 14TB SAS drives (WUH721414AL5204) with a sustained transfer rate of 267 MB. The other has seven 16TB SAS drives (ST16000NM000J-2TW103) with a sustained transfer rate of 270 MB/s.

The NAS uses GZIP compression.

A 128 GB SSD is being used as a cache.

The theoretical max read/write speed should be 267 * (7-2) * 2 = 2670 MB/s.

I've created a 10GB file from /dev/urandom. Testing the read speed with

dd if=random_file of=/dev/null bs=1M count=10000 from this file gives a read speed of 810 MB/s. Testing the write speed with dd if=random_file of=file2 bs=1M count=10000 gives a write speed of 539 MB/s.The 14TB drives seem to have maxed out their utilisation (the drives running at 95%+). The drives running at 50%+ are the 16TB drives.

I've looked at the open zfs page, https://openzfs.github.io/openzfs-docs/Getting Started/index.html, but I'm unable to find docs on writing to unbalanced vdevs. If it were the case that data is written to the least full vdevs until all vdevs are balanced, the 14 TB vdev wouldn't be running at 98% utilisation. If it were the case that the data is written to each vdev proportional to the amount of free space, then I would presume that the 16TB vdev would have a much higher utilisation than 50% since it is writing far more data (note the 14TB vdev is 90% full). If it were the case that data is written to each vdev in proportion to the current speed of each disk, then I would expect a 98% utilisation on both vdevs.

Does anyone have a link to the relevant documentation please?