jenesuispasbavard

Dabbler

- Joined

- Jan 1, 2023

- Messages

- 16

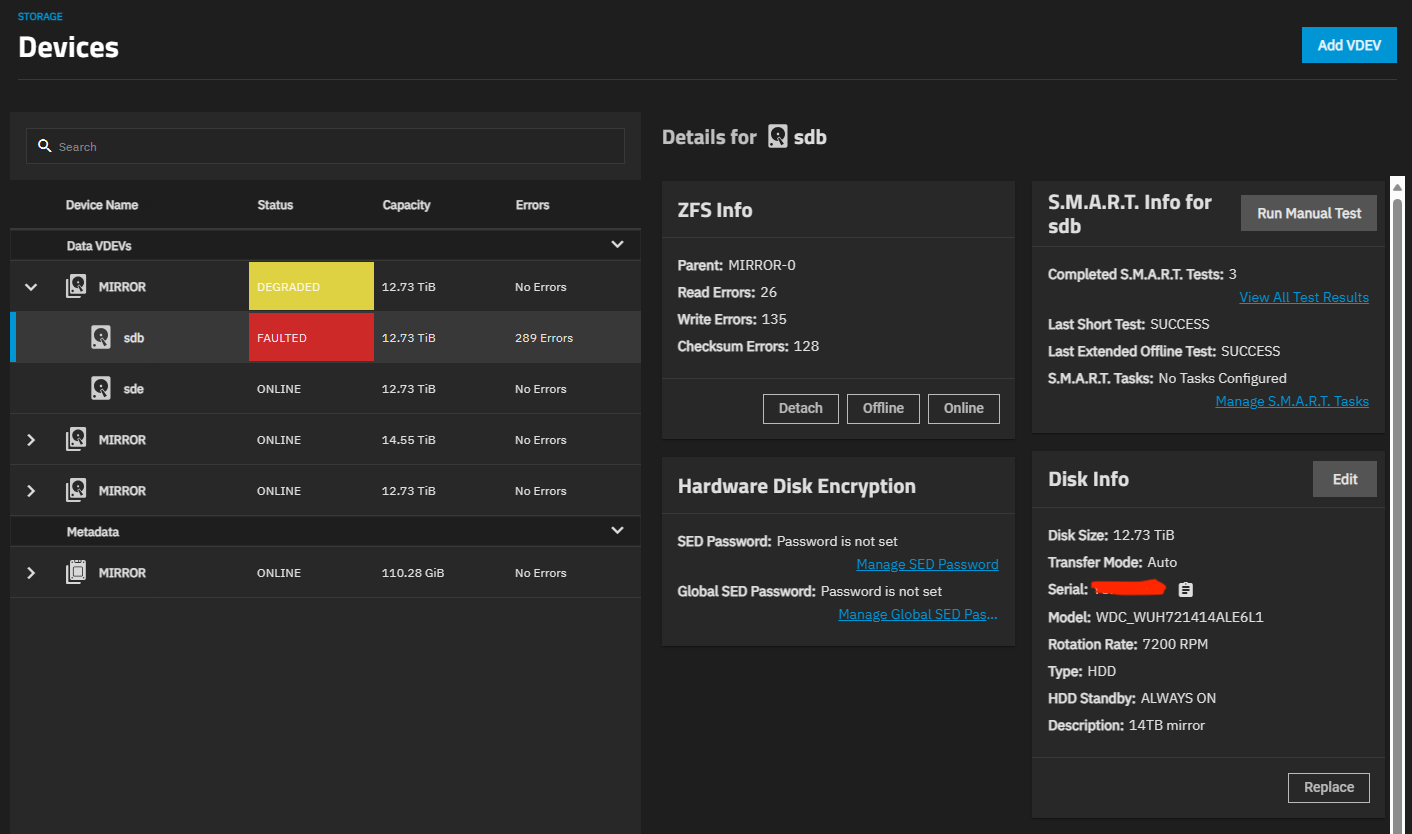

Ok, so at work this morning I got a series of alarming emails from my TrueNAS box (see pool configuration in screenshot):

Should I be worried that a drive is failing? Short SMART test came back normal, LONG one running now. Or was this a case of some corrupted data being recovered from the working drive in a mirror?

Here's a screenshot of the state of the pool when the I/O errors started showing up (now it's all back to normal - ONLINE instead of DEGRADED/FAULTED):

- 10:00 - ZFS device fault for pool storagepool on moi-truenas

- The number of I/O errors associated with a ZFS device exceeded acceptable levels. ZFS has marked the device as faulted.

- 10:01 - Pool storagepool state is DEGRADED: One or more devices are faulted in response to persistent errors. Sufficient replicas exist for the pool to continue functioning in a degraded state. The following devices are not healthy:

- Disk WDC_WUH721414ALE6L1 <serial-number> is FAULTED

- 10:23 - ZFS device fault for pool storagepool on moi-truenas

- ZFS has detected that a device was removed.

- 10:24 - Pool storagepool state is ONLINE: One or more devices is currently being resilvered. The pool will continue to function, possibly in a degraded state. (this alert cleared alert 2.)

- 10:24 - ZFS resilver_finish event for storagepool on moi-truenas

- scan: resilvered 1.36G in 00:00:13 with 0 errors on Wed Nov 8 10:24:20 2023

- 10:25 - cleared alert 4.

Should I be worried that a drive is failing? Short SMART test came back normal, LONG one running now. Or was this a case of some corrupted data being recovered from the working drive in a mirror?

Here's a screenshot of the state of the pool when the I/O errors started showing up (now it's all back to normal - ONLINE instead of DEGRADED/FAULTED):