Hi Everyone,

Custom Hardware build of TrueNAS

TrueNAS-12.0-U3

24 GB of RAM

2x Intel(R) Xeon(R) CPU E5603 @ 1.60GHz

36x Disks

34x Disks members of Pool called "DATA"

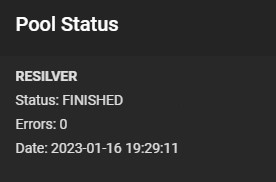

RESILVER Status below:

Status: FINISHED

Errors: 0

Date: 2023-01-16 19:29:11

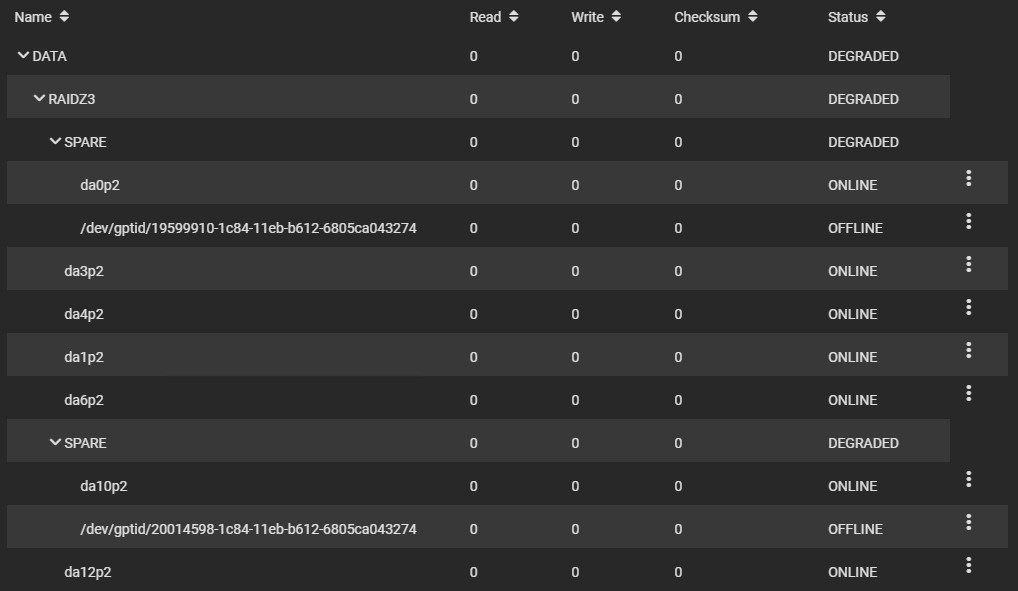

Pool is an degraded state.

2x disks replaced but still showing a Degraded state.

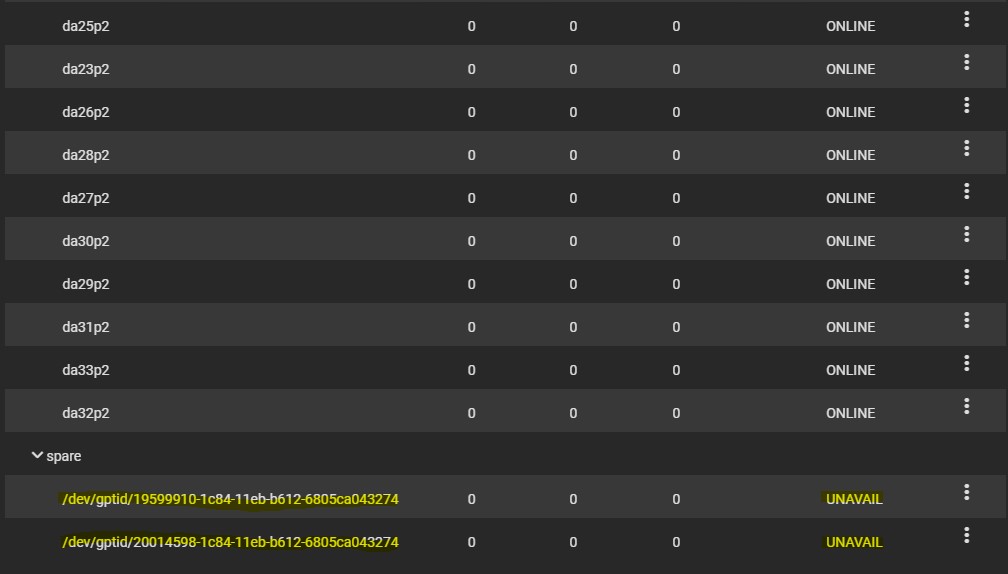

2x Spares show as Unavailable, I believe they are in use.

The GPTID of the offline disks match the GPTID assigned to the spare disks.

What are the steps required to get the Pool back to a healthy state (see pics)?

Please let me know what other information is required.

Thanks in advance,

DC

Custom Hardware build of TrueNAS

TrueNAS-12.0-U3

24 GB of RAM

2x Intel(R) Xeon(R) CPU E5603 @ 1.60GHz

36x Disks

34x Disks members of Pool called "DATA"

RESILVER Status below:

Status: FINISHED

Errors: 0

Date: 2023-01-16 19:29:11

Pool is an degraded state.

2x disks replaced but still showing a Degraded state.

2x Spares show as Unavailable, I believe they are in use.

The GPTID of the offline disks match the GPTID assigned to the spare disks.

What are the steps required to get the Pool back to a healthy state (see pics)?

Please let me know what other information is required.

Thanks in advance,

DC