Hello truenas community,

The problem is I can't import the zfs disk with the command zpool import -f zpoolname.

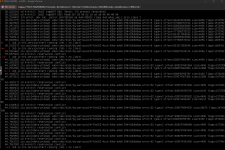

I get an error log

"zio pool=zpoolname vdev=/dev/disk/by-partuuid/xxxxxx-xxxxxxx-xxxxxx-xxxxxx-xxxxx error=5 type=1 offset=846329233408 size=4096 flags=572992"

"sd 4:0:0:2 reservation conflict"

Video can't import zpool

the message appears continuously (loop).

I use truenas scale under the proxmox server.

Is there a solution to overcome zpool so I can import and access my data?

Is there another way to access the data on vm-100-disk-0.raw?

because I need that data.

Please help me

Thank You

The problem is I can't import the zfs disk with the command zpool import -f zpoolname.

I get an error log

"zio pool=zpoolname vdev=/dev/disk/by-partuuid/xxxxxx-xxxxxxx-xxxxxx-xxxxxx-xxxxx error=5 type=1 offset=846329233408 size=4096 flags=572992"

"sd 4:0:0:2 reservation conflict"

Video can't import zpool

the message appears continuously (loop).

I use truenas scale under the proxmox server.

Is there a solution to overcome zpool so I can import and access my data?

Is there another way to access the data on vm-100-disk-0.raw?

because I need that data.

Please help me

Thank You