i ma running core and i was trying to migrate to scale. when i did i was seeing that one device of my pool 'safe' was missing:

then i tried listing the devices:

so i device is there but it seems to have no partitions on it.

so i moved back to truenas core and there the disk can be found and the pool is all fine..

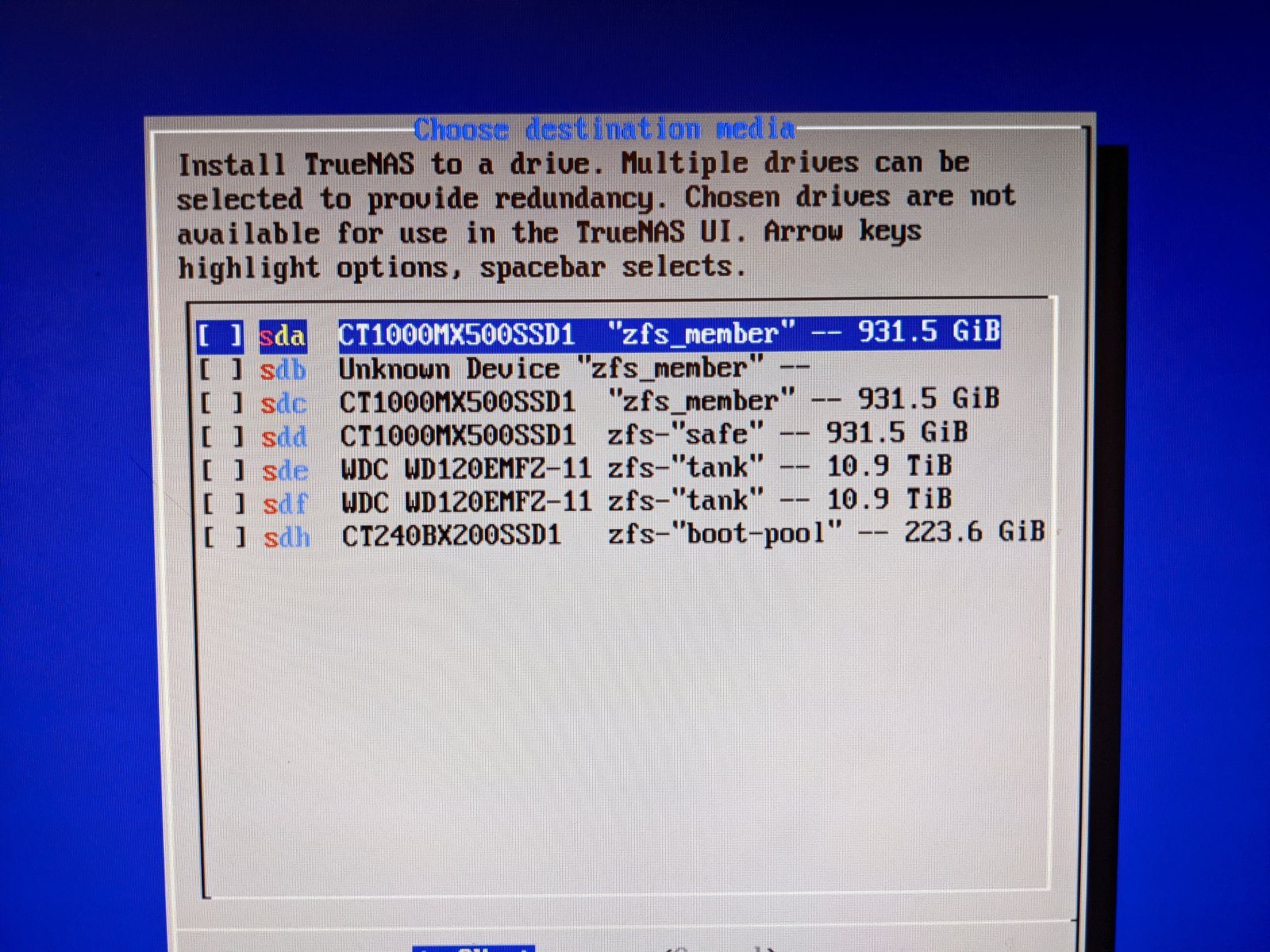

I also tried to boot again from the scale-installer and at the point of choosing the system device it is already apparent that the sdb device cannot be rightly identified as the 2nd device of the pool 'safe'.

has anybody an idea what is going on and how to fix this?

ty in advance

Code:

root@truenas[~]# zpool status

pool: boot-pool

state: ONLINE

status: Some supported and requested features are not enabled on the pool.

The pool can still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(7) for details.

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

sdg3 ONLINE 0 0 0

errors: No known data errors

pool: safe

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

scan: resilvered 1.75G in 00:00:07 with 0 errors on Tue Feb 15 03:44:26 2022

remove: Removal of vdev 1 copied 111G in 0h6m, completed on Tue Dec 1 01:14:30 2020

3.95M memory used for removed device mappings

config:

NAME STATE READ WRITE CKSUM

safe DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

60862b74-0e3f-11eb-8cb6-40167eaccd0a ONLINE 0 0 0

13780534977677316005 UNAVAIL 0 0 0 was /dev/gptid/e57ba527-112e-11eb-b8bb-b42e99602caa

errors: No known data errors

pool: tank

state: ONLINE

status: Some supported and requested features are not enabled on the pool.

The pool can still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(7) for details.

scan: scrub repaired 0B in 09:39:48 with 0 errors on Sun Jan 16 09:39:49 2022

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

f90856ed-1089-11eb-b8bb-b42e99602caa ONLINE 0 0 0

f9308133-1089-11eb-b8bb-b42e99602caa ONLINE 0 0 0

special

mirror-1 ONLINE 0 0 0

a418c1d7-343e-11eb-9044-b42e99602caa ONLINE 0 0 0

a41fb108-343e-11eb-9044-b42e99602caa ONLINE 0 0 0

errors: No known data errors

then i tried listing the devices:

Code:

root@truenas[~]# lsblk -o name,mountpoint,label,size,uuid NAME MOUNTPOINT LABEL SIZE UUID sda 931.5G └─sda1 tank 931.5G 10732666599134777332 sdb 931.5G sdc 931.5G └─sdc1 tank 931.5G 10732666599134777332 sdd 931.5G ├─sdd1 2G │ └─md127 2G │ └─md127 [SWAP] 2G 1b4bd60f-eff6-48d3-84df-ab0cf79be068 └─sdd2 safe 929.5G 17027203547547092571 sde 10.9T ├─sde1 2G │ └─md127 2G │ └─md127 [SWAP] 2G 1b4bd60f-eff6-48d3-84df-ab0cf79be068 └─sde2 tank 10.9T 10732666599134777332 sdf 10.9T ├─sdf1 2G └─sdf2 tank 10.9T 10732666599134777332 sdg 28.6G ├─sdg1 1M ├─sdg2 EFI 512M EE0E-4095 └─sdg3 boot-pool 28.1G 17966487355229984910 zd0 50G zd16 100G

so i device is there but it seems to have no partitions on it.

so i moved back to truenas core and there the disk can be found and the pool is all fine..

I also tried to boot again from the scale-installer and at the point of choosing the system device it is already apparent that the sdb device cannot be rightly identified as the 2nd device of the pool 'safe'.

has anybody an idea what is going on and how to fix this?

ty in advance