Hi all,

I am moving my current virtualized setup from a Dell R710:

I am also wondering if I should stick with mirror or go with raidz1/2, they are faster drives will I be able to saturate 10gbit? What would be a good approach for the pool config? I am looking for speed (but don't want to mirror if it can be avoided, due to space loss), I will likely expand the pool with more drives as time goes on but will have the 8 drives initially.

Open to any input, It will be used for Plex (Plex VM will be sitting on the same host) and also as iSCSI targets for itself and 2 other ESXi hosts, running my home lab with a bunch of VMs.

Cheers

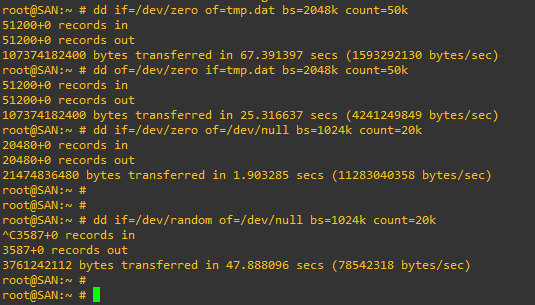

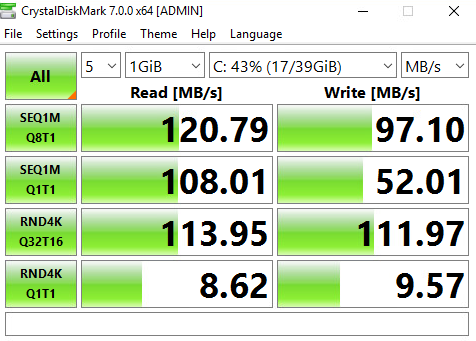

I have added some DD results and also crystal disk marks from a remote host, freenas and it are connected by 1 gbit.

I am moving my current virtualized setup from a Dell R710:

- X5670's (VM has 4 vCPU)

- gigabit nic

- VM given 48gb ram

- Passthrough of the HBA

- 6 x 6 WD reds 5400rpm (EFRX)

- Pool is in mirror with no slogs or L2arc

- E5-2640 v3 x2

- 256 gb ddr4 ram

- 10gbit nic

- Thinking like for like specs for the VM

- Discs will be 2 x 200gb enterprise SSD's

- 8 x 1.2 tb 10k SAS HDDs

I am also wondering if I should stick with mirror or go with raidz1/2, they are faster drives will I be able to saturate 10gbit? What would be a good approach for the pool config? I am looking for speed (but don't want to mirror if it can be avoided, due to space loss), I will likely expand the pool with more drives as time goes on but will have the 8 drives initially.

Open to any input, It will be used for Plex (Plex VM will be sitting on the same host) and also as iSCSI targets for itself and 2 other ESXi hosts, running my home lab with a bunch of VMs.

Cheers

I have added some DD results and also crystal disk marks from a remote host, freenas and it are connected by 1 gbit.

Last edited: