Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Installing macOS Sierra in ESXi 6.5U1

Firstly, to install Mac OS X, you need to build an installer ISO, and to do that you will need an instance of Mac OS X.

This is done on a Mac.

Building a Sierra ISO

You will need a copy of the "Install macOS Sierra.app", if you don't already have that, you can download it by going to the app store and searching for "macos sierra"

Apple's instructions are here:

https://support.apple.com/en-au/HT201475

Once you have download it, you need to create an ISO image from the Installer.

A script to do this can be found here:

https://gist.github.com/julianxhokaxhiu/6ed6853f3223d0dd5fdffc4799b3a877

I've reproduced the script, with an addition of an

Assuming you have "Install macOS Sierra.app" in your /Applications directory, the above bash script can be used to create the ISO. Just paste this into a .sh script, then execute it in a shell.

"Sierra.iso" will appear in a few moments on your desktop.

The output in your shell will look something like this:

and if you run

you should see something like this:

Next, upload the Sierra ISO to a data store on your ESXi host.

Unlocking ESXi's macOS support

Secondly, ESXi has support for Mac OS X guests, but it needs to be "unlocked".

You can unlock this support for macOS guests using VMware Unlocker, which is a set of scripts to enable/disable the support for macOS guests in the various vmWare hypervisors, including ESXi.

The official forum thread is:

http://www.insanelymac.com/forum/files/file/339-unlocker/

The latest released version is 2.08, but the version you need for ESXi 6.5U1 is 2.09RC, which can be downloaded from the github repository:

https://github.com/DrDonk/unlocker

Just click the "Clone or download" button, then Download ZIP. Rename the zip to "unlocker-209RC.zip". On a mac it'll end up in the Trash after decompressing.

Upload the zip to your datastore...

The following instructions and cautions are from readme.txt included with vmware-unlocker.

Note: the instructions for disabling the unlocker if your ESXi should fail to boot. In my testing this did not happen.

In the ESXi shell, I

See the transcript below:

NOTE: If you need to update ESXi, run the

Installing VMware tools for macOS into ESXi

Once unlocker is installed, and vmware is safely rebooted, you need to install the macOS vmware tools.

VMware's macOS vmware tools iso is no longer included in the base installation, you need to download it separately, and load it into ESXi.

At the time of writing, the latest version is 10.1.10,

https://my.vmware.com/group/vmware/details?downloadGroup=VMTOOLS10110&productId=614

You want to download the "VMware Tools packages for FreeBSD, Solaris and OS X". I chose the VMware-Tools-10.1.10-other-6082533.zip zipped version, over the tgz.

Again, upload to your datastore.

Then ssh into the ESXi shell. cd to the datastore (ie in the /vmfs/volumes directory), and unzip.

Inside the unziped directory, is a vmtools directory, and inside that is "darwin.iso", this needs to be copied to

/usr/lib/vmware/isoimages/darwin.iso

My transcript:

Creating a macOS Sierra VM

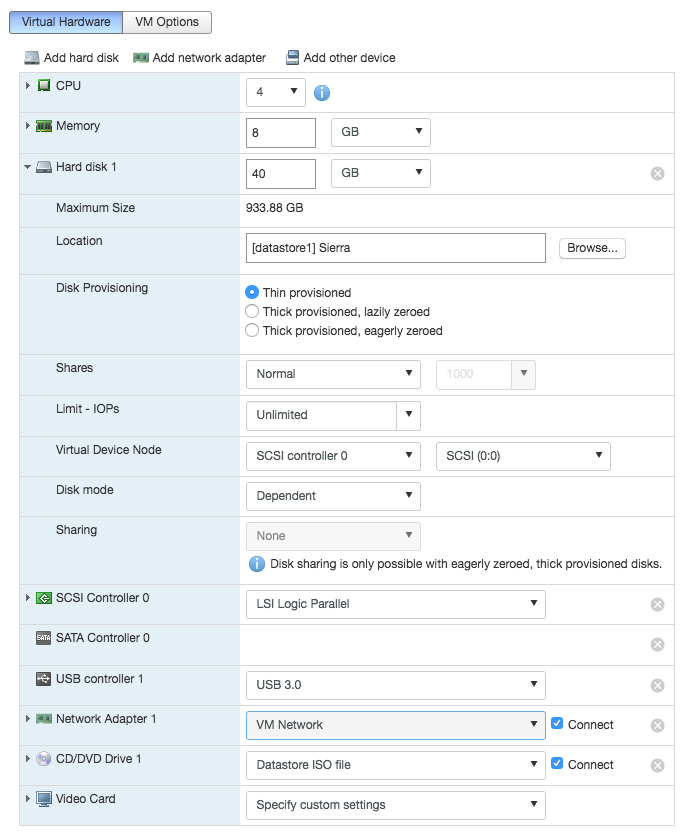

Next create a VM. Give it enough cores/ram for Sierra, thin provision the disk if you want, SCSI controller set to Parallel is optimal, select the Sierra ISO for the CDRom, Select the right VM Network for the network controller, select USB3.0 for USB controller.

ESXi only supports the E1000 network adapter for macOS.

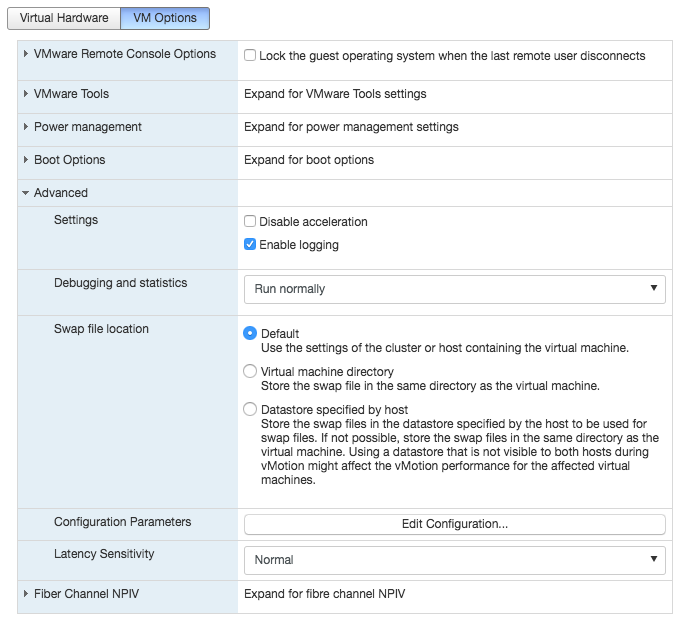

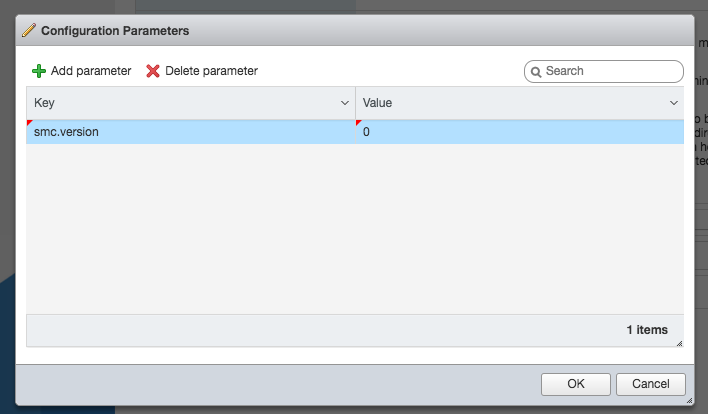

And then there is one very important thing to do in the VM options pane.

Go to Advanced section, then click the "Edit Configuration..." button

Then "Add parameter" for "smc.version" with value of "0"

Note: smc.version is set to 0

Without this parameter, the VM will fail to boot.

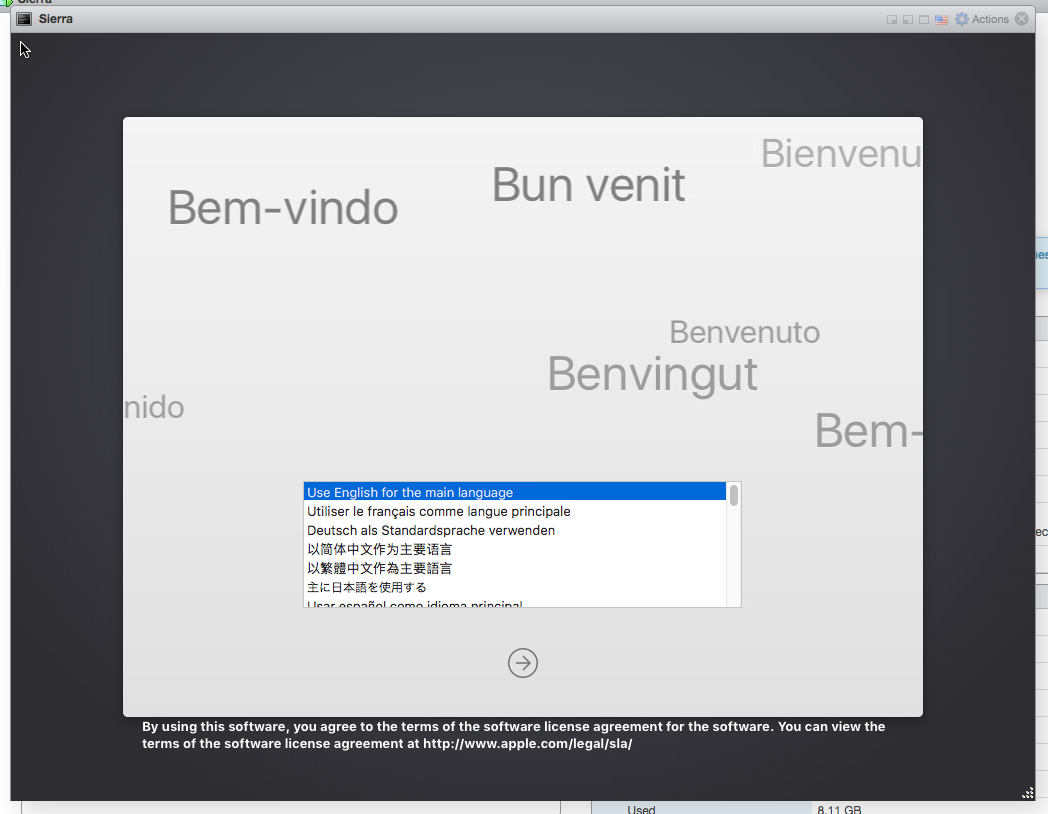

Now you can save the VM and start it up. It should boot into the Sierra installer.

Select your language

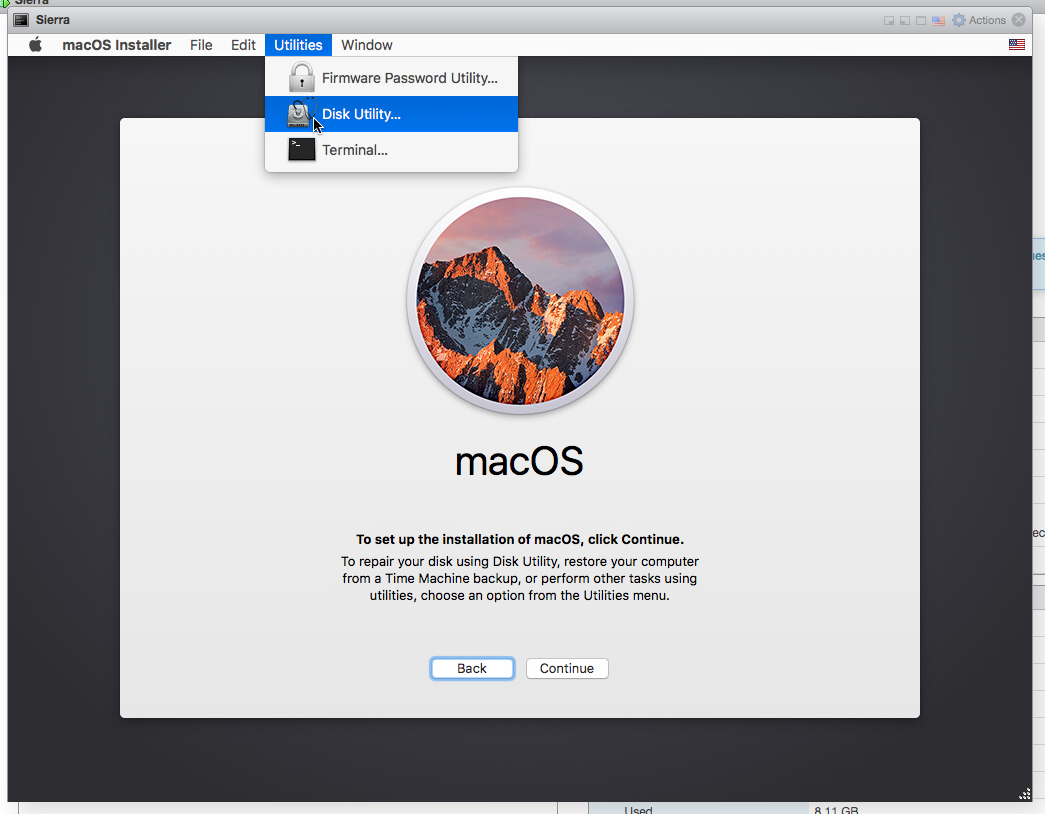

Then on the next screen, select Disk Utility from the Utilities menu

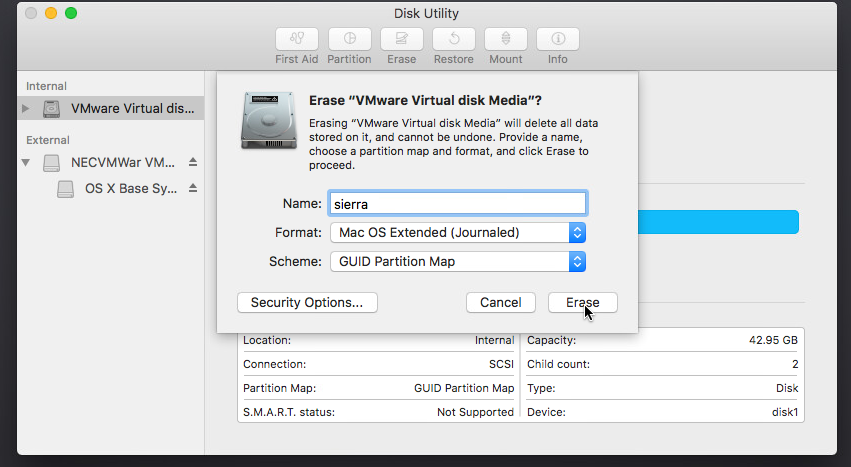

Once Disk Utility loads, select the VMware Virtual disk and click the Erase button to erase it. The defaults are correct.

Then quit Disk Utility.

Next continue and install Sierra onto the disk you initialised previously.

After an eternity it should finish installing and begin a countdown to restart. Choose Shutdown from the Apple menu. If you miss it, just shutdown anyway.

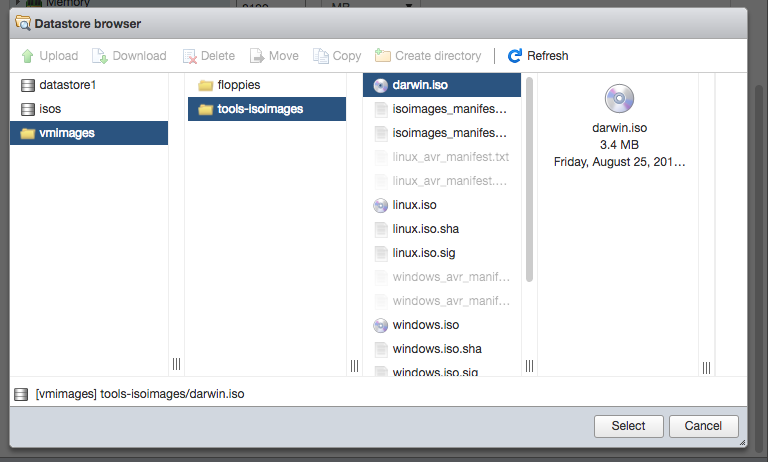

Next edit the VM, change the CD ISO file. Navigate to vmimages/tools-isoimages/darwin.iso

Save, and then power-on the VM.

Go through the post-install configuration setup as per normal.

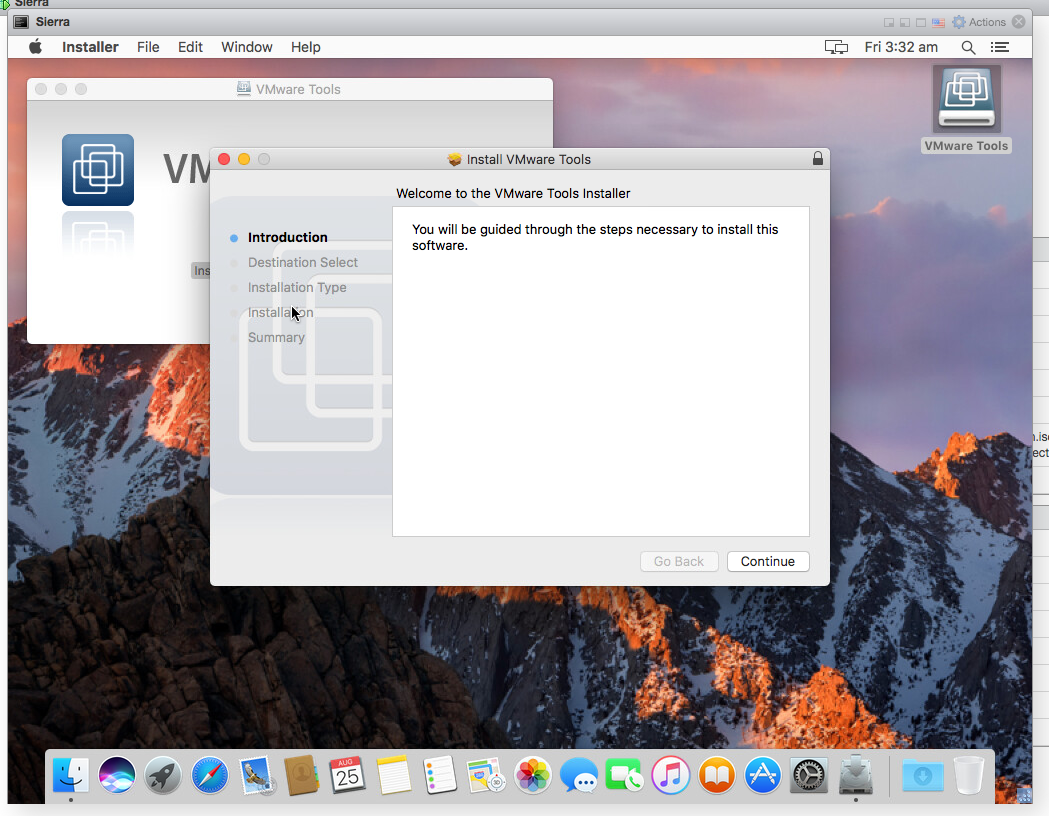

Once at the Desktop, Install VMware tools.

It'll force you to restart.

After it restarts... shutdown.

Edit the VM, and remove the CD drive.

Save.

And you're done :)

Now you can install all the macOS software and servers/services you want to.

Why?

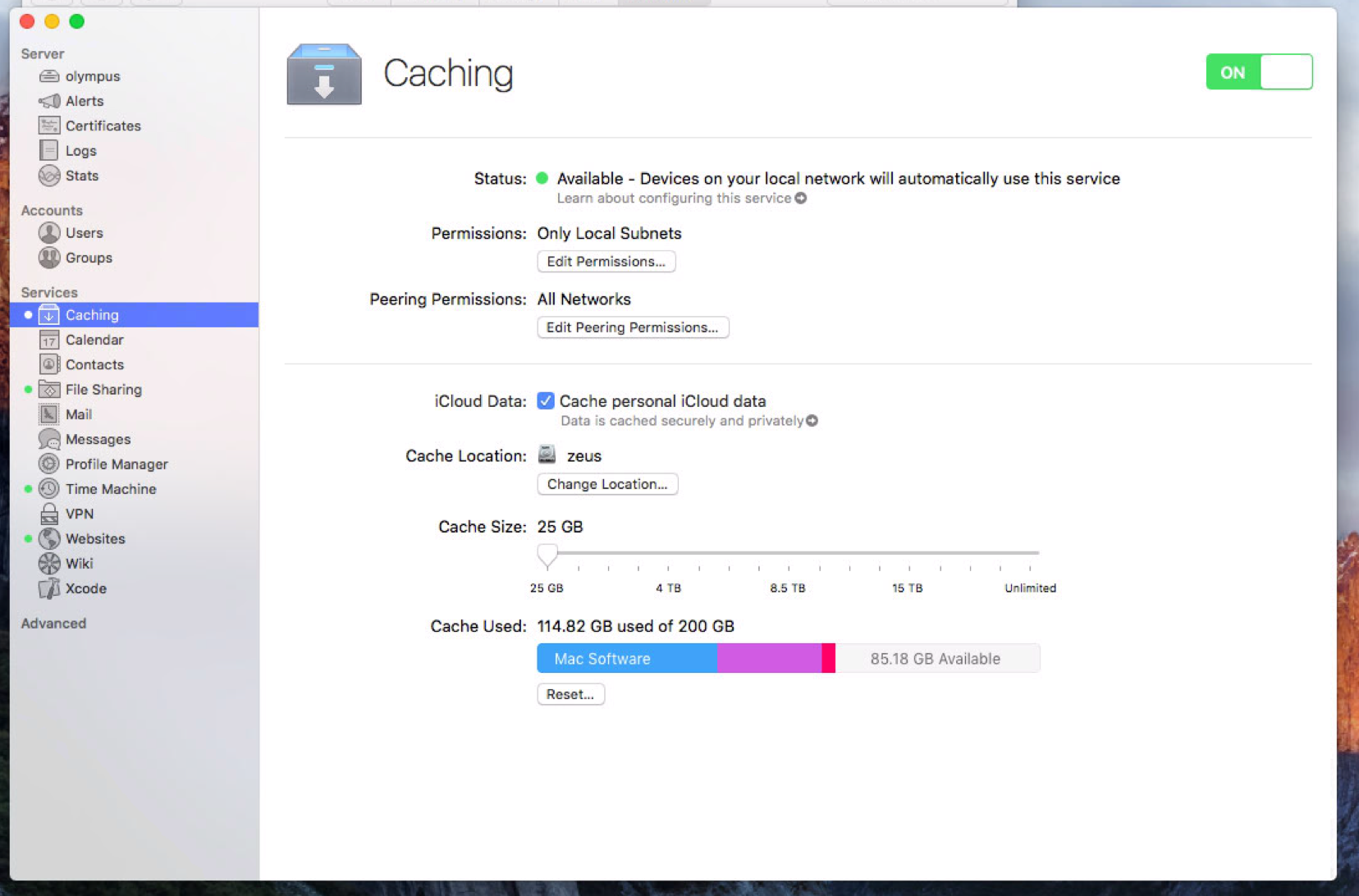

I wanted to virtualize away a pre-existing macOS server, since a lot of its functionality has been taken over by FreeNAS. I no longer need its Time Machine serving capabilities, or its SMB/AFP serving capabilities, but macOS Server does provide a useful caching service for Macs, iPhones, iPads, AppleTVs, etc on your network.

All software updates, store downloads, media, icloud data etc downloaded by any Apple device on the network will be through-cached on the server. Even music/video.

Here's one I prepared earlier:

Another reason to install macOS Server would be to use the Xcode Server...

Aside from macOS Server, you might want to...

Host an AirPrint gateway...

Host other mac only servers...

And perhaps just to test software on legacy versions of macOS.

With everything backed by FreeNAS/ZFS hosted iSCSi or NFS shares.

If you want to, you can also install GlobalSAN iSCSI directly in the macOS instance, add the Storage Network as a second vNIC and have the mac directly connect to an HFS+ zvol (ZFS backed) via iSCSI.

You can back up at 20gbps to a Time Machine share on your FreeNAS instance.

If you have a need to virtualize macOS, this is how to do it.

Firstly, to install Mac OS X, you need to build an installer ISO, and to do that you will need an instance of Mac OS X.

This is done on a Mac.

Building a Sierra ISO

You will need a copy of the "Install macOS Sierra.app", if you don't already have that, you can download it by going to the app store and searching for "macos sierra"

Apple's instructions are here:

https://support.apple.com/en-au/HT201475

Once you have download it, you need to create an ISO image from the Installer.

A script to do this can be found here:

https://gist.github.com/julianxhokaxhiu/6ed6853f3223d0dd5fdffc4799b3a877

I've reproduced the script, with an addition of an

rm /tmp/Sierra.cdr.dmg below:Code:

#!/bin/bash # # Credits to fuckbecauseican5 from https://www.reddit.com/r/hackintosh/comments/4s561a/macos_sierra_16a$ # Adapted to work with the official image available into Mac App Store # # Enjoy! hdiutil attach /Applications/Install\ macOS\ Sierra.app/Contents/SharedSupport/InstallESD.dmg -noverify$ hdiutil create -o /tmp/Sierra.cdr -size 7316m -layout SPUD -fs HFS+J hdiutil attach /tmp/Sierra.cdr.dmg -noverify -nobrowse -mountpoint /Volumes/install_build asr restore -source /Volumes/install_app/BaseSystem.dmg -target /Volumes/install_build -noprompt -nover$ rm /Volumes/OS\ X\ Base\ System/System/Installation/Packages cp -rp /Volumes/install_app/Packages /Volumes/OS\ X\ Base\ System/System/Installation/ cp -rp /Volumes/install_app/BaseSystem.chunklist /Volumes/OS\ X\ Base\ System/BaseSystem.chunklist cp -rp /Volumes/install_app/BaseSystem.dmg /Volumes/OS\ X\ Base\ System/BaseSystem.dmg hdiutil detach /Volumes/install_app hdiutil detach /Volumes/OS\ X\ Base\ System/ hdiutil convert /tmp/Sierra.cdr.dmg -format UDTO -o /tmp/Sierra.iso rm /tmp/Sierra.cdr.dmg mv /tmp/Sierra.iso.cdr ~/Desktop/Sierra.iso

Assuming you have "Install macOS Sierra.app" in your /Applications directory, the above bash script can be used to create the ISO. Just paste this into a .sh script, then execute it in a shell.

"Sierra.iso" will appear in a few moments on your desktop.

The output in your shell will look something like this:

Code:

stux$ sh ./create-installer-iso.sh /dev/disk2 GUID_partition_scheme /dev/disk2s1 EFI /dev/disk2s2 Apple_HFS /Volumes/install_app ....................................................................................................... created: /tmp/Sierra.cdr.dmg /dev/disk3 Apple_partition_scheme /dev/disk3s1 Apple_partition_map /dev/disk3s2 Apple_HFS /Volumes/install_build Validating target...done Validating source...done Retrieving scan information...done Validating sizes...done Restoring ....10....20....30....40....50....60....70....80....90....100 Remounting target volume...done "disk2" unmounted. "disk2" ejected. "disk3" unmounted. "disk3" ejected. Reading Driver Descriptor Map (DDM : 0)… Reading Apple (Apple_partition_map : 1)… Reading disk image (Apple_HFS : 2)… ....................................................................................................... Elapsed Time: 34.573s Speed: 211.6Mbytes/sec Savings: 0.0% created: /tmp/Sierra.iso.cdr

and if you run

ls -l ~/Desktop/Sierra.isoyou should see something like this:

Code:

stux$ ls -l ~/Desktop/Sierra.iso -rw-r--r-- 1 stux wheel 7671382016 25 Aug 19:07 /Users/stux/Desktop/Sierra.iso

Next, upload the Sierra ISO to a data store on your ESXi host.

Unlocking ESXi's macOS support

Secondly, ESXi has support for Mac OS X guests, but it needs to be "unlocked".

You can unlock this support for macOS guests using VMware Unlocker, which is a set of scripts to enable/disable the support for macOS guests in the various vmWare hypervisors, including ESXi.

The official forum thread is:

http://www.insanelymac.com/forum/files/file/339-unlocker/

The latest released version is 2.08, but the version you need for ESXi 6.5U1 is 2.09RC, which can be downloaded from the github repository:

https://github.com/DrDonk/unlocker

Just click the "Clone or download" button, then Download ZIP. Rename the zip to "unlocker-209RC.zip". On a mac it'll end up in the Trash after decompressing.

Upload the zip to your datastore...

The following instructions and cautions are from readme.txt included with vmware-unlocker.

7. ESXi

-------

You will need to transfer the zip file to the ESXi host either using vSphere client or SCP.

Once uploaded you will need to either use the ESXi support console or use SSH to

run the commands. Use the unzip command to extract the files.

<<< WARNING: use a datastore volume to store and run the scripts >>>

Please note that you will need to reboot the host for the patches to become active.

The patcher is embbedded in a shell script local.sh which is run at boot from /etc/rc.local.d.

You may need to ensure the ESXi scripts have execute permissions

by running chmod +x against the 2 files.

esxi-install.sh - patches VMware

esxi-uninstall.sh - restores VMware

There is a boot option for ESXi that disables the unlocker if there is a problem.

At the ESXi boot screen press shift + o to get the boot options and add nounlocker.

Note:

1. Any changes you have made to local.sh will be lost. If you have made changes to

that file, you will need to merge them into the supplied local.sh file.

2. The unlocker needs to be re-run after an upgrade or patch is installed on the ESXi host.

3. The macOS VMwwre tools are no longer shipped in the image from ESXi 6.5. They have to be

downloaded and installed manually onto the ESXi host. For additional details see this web page:

https://blogs.vmware.com/vsphere/2016/10/introducing-vmware-tools-10-1-10-0-12.html

Note: the instructions for disabling the unlocker if your ESXi should fail to boot. In my testing this did not happen.

In the ESXi shell, I

cd to the datastore where I uploaded unlocker, unziped it, renamed the directory, then executed esxi-install.sh, and rebooted. It already had the right permissions.See the transcript below:

Code:

[root@localhost:~] cd /vmfs/volumes/datastore1/downloads [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads] unzip unlocker-209RC.zip Archive: unlocker-209RC.zip creating: unlocker-master/ inflating: unlocker-master/.gitattributes inflating: unlocker-master/.gitignore inflating: unlocker-master/dumpsmc.exe inflating: unlocker-master/dumpsmc.py inflating: unlocker-master/esxi-build.sh inflating: unlocker-master/esxi-config.py inflating: unlocker-master/esxi-install.sh inflating: unlocker-master/esxi-uninstall.sh inflating: unlocker-master/gettools.exe inflating: unlocker-master/gettools.py inflating: unlocker-master/license.txt inflating: unlocker-master/lnx-install.sh inflating: unlocker-master/lnx-uninstall.sh inflating: unlocker-master/lnx-update-tools.sh inflating: unlocker-master/local-prefix.sh inflating: unlocker-master/local-suffix.sh inflating: unlocker-master/local.sh inflating: unlocker-master/osx-install.sh inflating: unlocker-master/osx-uninstall.sh inflating: unlocker-master/readme.txt inflating: unlocker-master/smctest.sh inflating: unlocker-master/unlocker.exe inflating: unlocker-master/unlocker.py inflating: unlocker-master/win-install.cmd inflating: unlocker-master/win-test-install.cmd inflating: unlocker-master/win-uninstall.cmd inflating: unlocker-master/win-update-tools.cmd creating: unlocker-master/wip/ inflating: unlocker-master/wip/__init__.py inflating: unlocker-master/wip/argtest.py inflating: unlocker-master/wip/extract-smbiosdb.py inflating: unlocker-master/wip/generate.py inflating: unlocker-master/wip/smbiosdb.json inflating: unlocker-master/wip/smbiosdb.plist inflating: unlocker-master/wip/smbiosdb.txt inflating: unlocker-master/wip/unlocker-asmpatch.diff [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads] mv unlocker-master unlocker-209RC [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads/unlocker-209RC] ls dumpsmc.exe esxi-install.sh license.txt local-prefix.sh osx-uninstall.sh unlocker.py win-update-tools.cmd dumpsmc.py esxi-uninstall.sh lnx-install.sh local-suffix.sh readme.txt win-install.cmd wip esxi-build.sh gettools.exe lnx-uninstall.sh local.sh smctest.sh win-test-install.cmd [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads/unlocker-209RC] ./esxi-install.sh VMware Unlocker 2.0.9 =============================== Copyright: Dave Parsons 2011-16 Installing local.sh Adding useVmxSandbox Saving current state in /bootbank Clock updated. Time: 09:28:21 Date: 08/25/2017 UTC Success - please now restart the server! [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads/unlocker-209RC] reboot

NOTE: If you need to update ESXi, run the

esxi-uninstall.sh script, update, then run the esxi-install.sh script again.Installing VMware tools for macOS into ESXi

Once unlocker is installed, and vmware is safely rebooted, you need to install the macOS vmware tools.

VMware's macOS vmware tools iso is no longer included in the base installation, you need to download it separately, and load it into ESXi.

At the time of writing, the latest version is 10.1.10,

https://my.vmware.com/group/vmware/details?downloadGroup=VMTOOLS10110&productId=614

You want to download the "VMware Tools packages for FreeBSD, Solaris and OS X". I chose the VMware-Tools-10.1.10-other-6082533.zip zipped version, over the tgz.

Again, upload to your datastore.

Then ssh into the ESXi shell. cd to the datastore (ie in the /vmfs/volumes directory), and unzip.

unzip VMware-Tools-10.1.10-other-6082533.zipInside the unziped directory, is a vmtools directory, and inside that is "darwin.iso", this needs to be copied to

/usr/lib/vmware/isoimages/darwin.iso

My transcript:

Code:

[root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads] cd VMware-Tools-10.1.10-other-6082 533/vmtools/ [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads/VMware-Tools-10.1.10-other-6082533/vmtools] ls darwin.iso freebsd.iso.sha solaris.iso.sig darwin.iso.sha freebsd.iso.sig solaris_avr_manifest.txt darwin.iso.sig solaris.iso solaris_avr_manifest.txt.sig freebsd.iso solaris.iso.sha [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads/VMware-Tools-10.1.10-other-6082533/vmtools] cp darwin.iso /usr/lib/vmware/isoimages/darwin.iso [root@localhost:/vmfs/volumes/599a6db2-d2f3f71f-617a-ac1f6b10ec96/downloads/VMware-Tools-10.1.10-other-6082533/vmtools] ls -l /usr/lib/vmware/isoimages/darwin.iso -rwx------ 1 root root 3561472 Aug 25 10:17 /usr/lib/vmware/isoimages/darwin.iso

Creating a macOS Sierra VM

Next create a VM. Give it enough cores/ram for Sierra, thin provision the disk if you want, SCSI controller set to Parallel is optimal, select the Sierra ISO for the CDRom, Select the right VM Network for the network controller, select USB3.0 for USB controller.

ESXi only supports the E1000 network adapter for macOS.

And then there is one very important thing to do in the VM options pane.

Go to Advanced section, then click the "Edit Configuration..." button

Then "Add parameter" for "smc.version" with value of "0"

Note: smc.version is set to 0

Without this parameter, the VM will fail to boot.

Now you can save the VM and start it up. It should boot into the Sierra installer.

Select your language

Then on the next screen, select Disk Utility from the Utilities menu

Once Disk Utility loads, select the VMware Virtual disk and click the Erase button to erase it. The defaults are correct.

Then quit Disk Utility.

Next continue and install Sierra onto the disk you initialised previously.

After an eternity it should finish installing and begin a countdown to restart. Choose Shutdown from the Apple menu. If you miss it, just shutdown anyway.

Next edit the VM, change the CD ISO file. Navigate to vmimages/tools-isoimages/darwin.iso

Save, and then power-on the VM.

Go through the post-install configuration setup as per normal.

Once at the Desktop, Install VMware tools.

It'll force you to restart.

After it restarts... shutdown.

Edit the VM, and remove the CD drive.

Save.

And you're done :)

Now you can install all the macOS software and servers/services you want to.

Why?

I wanted to virtualize away a pre-existing macOS server, since a lot of its functionality has been taken over by FreeNAS. I no longer need its Time Machine serving capabilities, or its SMB/AFP serving capabilities, but macOS Server does provide a useful caching service for Macs, iPhones, iPads, AppleTVs, etc on your network.

All software updates, store downloads, media, icloud data etc downloaded by any Apple device on the network will be through-cached on the server. Even music/video.

Here's one I prepared earlier:

Another reason to install macOS Server would be to use the Xcode Server...

Aside from macOS Server, you might want to...

Host an AirPrint gateway...

Host other mac only servers...

And perhaps just to test software on legacy versions of macOS.

With everything backed by FreeNAS/ZFS hosted iSCSi or NFS shares.

If you want to, you can also install GlobalSAN iSCSI directly in the macOS instance, add the Storage Network as a second vNIC and have the mac directly connect to an HFS+ zvol (ZFS backed) via iSCSI.

You can back up at 20gbps to a Time Machine share on your FreeNAS instance.

If you have a need to virtualize macOS, this is how to do it.

Last edited: