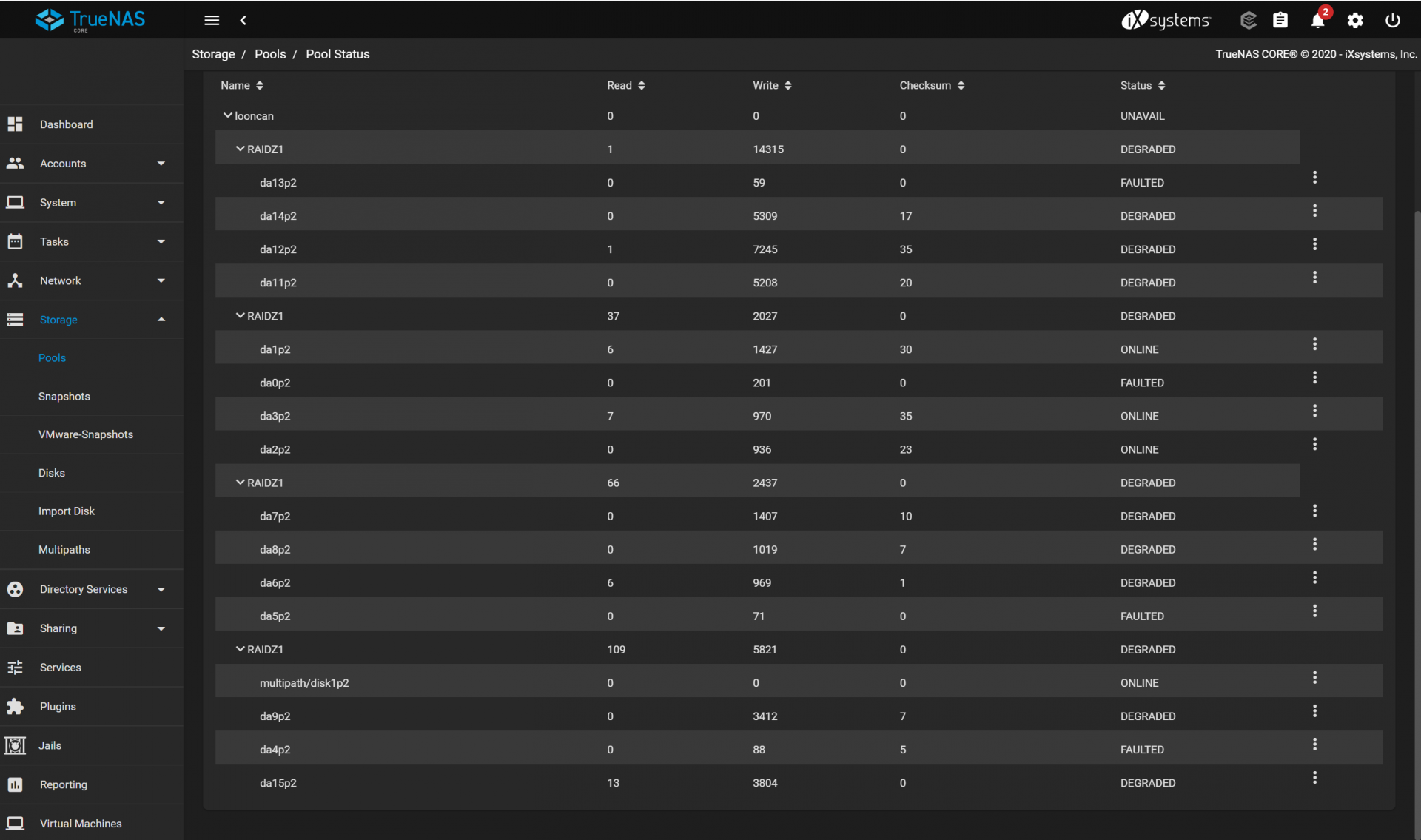

Initially 4 drives reported degraded or faulted simultaneously in my 4 vdevs of 4 x SAS HDD RAIDZ1 pool (picture attached). I figured I had the worst luck. Now almost all of them are degraded or faulted, so now I suspect something else is afoot as 12 drives simply don't fail within a day or two of each other. I am getting read errors while trying to back up data in the pool's datasets and replicate to other pools, so there is a real problem somewhere.

The only changes that has occurred on the pool or server before these issues were an update to TrueNAS 12.0-U2.1 and a weird issue where I renamed a iSCSI connected zvol on this pool and had to modify the VMFS volume to get ESXi to re-mount it again.

Again, I imagine 12 of 16 drives failing is very strange. Any ideas what is really going on and how to resolve it?

P.S. - The drives are 900 GB SAS 10k RPM HDDs in a Dell PowerVault MD1220 disk shelf connected to LSI SAS-9207-8e HBA PCI-e card.

The only changes that has occurred on the pool or server before these issues were an update to TrueNAS 12.0-U2.1 and a weird issue where I renamed a iSCSI connected zvol on this pool and had to modify the VMFS volume to get ESXi to re-mount it again.

Again, I imagine 12 of 16 drives failing is very strange. Any ideas what is really going on and how to resolve it?

P.S. - The drives are 900 GB SAS 10k RPM HDDs in a Dell PowerVault MD1220 disk shelf connected to LSI SAS-9207-8e HBA PCI-e card.