I'm currently in the final stages of acquiring the necessary components for my upcoming build. Everything has been purchased, except for the hard drives. My current setup involves a TrueNas 13 Core server with 7x8TB WD Red 5400rpm HDs in a single pool, serving as storage for file backups, UHD 4K movies/Mp3s, and media streaming to my 4K TV. Recently, I experienced a scare when the pool went offline, but with the assistance of Honey Badger and Joe, I successfully recovered the pool without any data loss.

Although all the hard drives and hardware are currently error-free, the drives have accumulated 55,000 hours of use. The goal is to use the newly built server to back up my existing server. In the event of hard drive or component failures, I aim to resilver the server with less stress, knowing I have a backup server ready.

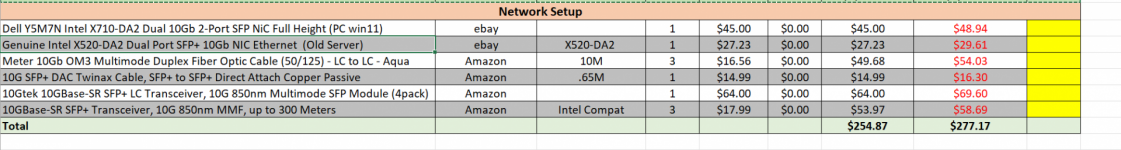

Now, I'm navigating the decision-making process, weighing factors such as price, performance, and practicality, recognizing the trade-offs involved in configuring the pools. Additionally, I plan to upgrade the NIC card in both my existing server and main PC to 10GE, as I transfer files between them on a daily basis.

See the new build below:

The distinction between my upcoming server and my current one lies in my decision to opt for 6x20TB WD Red Pro drives in a RaidZ2 configuration, as opposed to the 7x8TB drives in my existing server. The memory capacity will also see an increase, going from 32GB in the existing server to 128GB in the new one.

Upon initial discussion in forums, some members expressed concerns about re-silvering time in the event of drive failure, with recommendations for mirroring over RAIDZ2 for better performance. However, I didn't delve into this matter until after stabilizing my existing server and securing components for the new one, along with upgrading my network infrastructure to accommodate 10GE.

Both write and read speeds are crucial, as this server is slated to become my primary one eventually. Re-silvering times also bear significance in the event of drive failure. Some forum users shared anecdotes about their 18-20TB drives taking anywhere from 12 to 18 hours to re-silver, while others reported duration of up to 5 days. As a novice, I seek guidance on optimizing performance while minimizing re-silvering times. Pool size and drive size are acknowledged as contributing factors, but I'm unsure of their exact impact. Balancing the need for more storage space, especially as my movie collection expands, is critical. Creating multiple pools with smaller drives is less feasible due to the higher cost per MB for 8-12TB drives compared to larger 18-22TB ones, and I am hesitant to use used drives.

As I approach the final and most costly purchase for my build, I seek valuable insights from the knowledgeable individuals in this forum. I deeply appreciate the input of the fantastic members, with special thanks to HoneyBadger, Constantin, joeschmuck, and Davvo.

Although all the hard drives and hardware are currently error-free, the drives have accumulated 55,000 hours of use. The goal is to use the newly built server to back up my existing server. In the event of hard drive or component failures, I aim to resilver the server with less stress, knowing I have a backup server ready.

Now, I'm navigating the decision-making process, weighing factors such as price, performance, and practicality, recognizing the trade-offs involved in configuring the pools. Additionally, I plan to upgrade the NIC card in both my existing server and main PC to 10GE, as I transfer files between them on a daily basis.

See the new build below:

The distinction between my upcoming server and my current one lies in my decision to opt for 6x20TB WD Red Pro drives in a RaidZ2 configuration, as opposed to the 7x8TB drives in my existing server. The memory capacity will also see an increase, going from 32GB in the existing server to 128GB in the new one.

Upon initial discussion in forums, some members expressed concerns about re-silvering time in the event of drive failure, with recommendations for mirroring over RAIDZ2 for better performance. However, I didn't delve into this matter until after stabilizing my existing server and securing components for the new one, along with upgrading my network infrastructure to accommodate 10GE.

Both write and read speeds are crucial, as this server is slated to become my primary one eventually. Re-silvering times also bear significance in the event of drive failure. Some forum users shared anecdotes about their 18-20TB drives taking anywhere from 12 to 18 hours to re-silver, while others reported duration of up to 5 days. As a novice, I seek guidance on optimizing performance while minimizing re-silvering times. Pool size and drive size are acknowledged as contributing factors, but I'm unsure of their exact impact. Balancing the need for more storage space, especially as my movie collection expands, is critical. Creating multiple pools with smaller drives is less feasible due to the higher cost per MB for 8-12TB drives compared to larger 18-22TB ones, and I am hesitant to use used drives.

As I approach the final and most costly purchase for my build, I seek valuable insights from the knowledgeable individuals in this forum. I deeply appreciate the input of the fantastic members, with special thanks to HoneyBadger, Constantin, joeschmuck, and Davvo.