RchGrav

Dabbler

- Joined

- Feb 21, 2014

- Messages

- 36

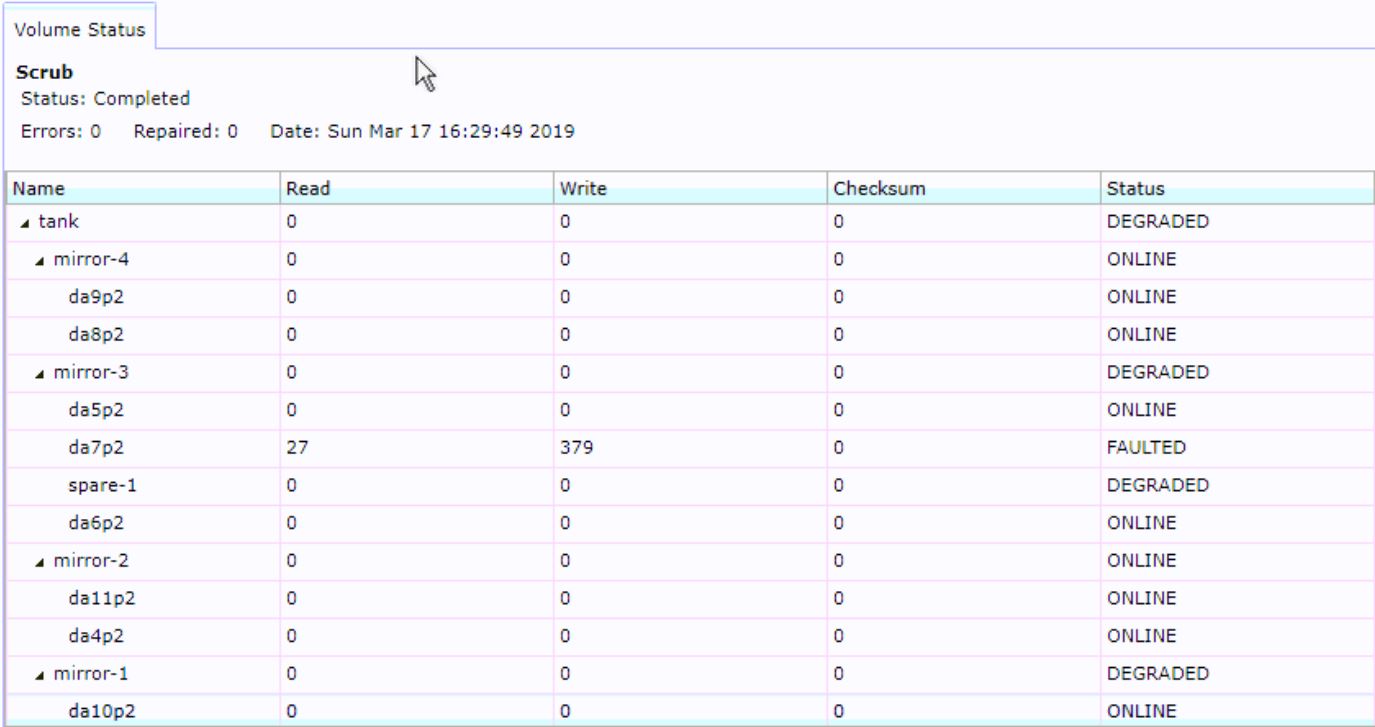

Can someone help clear up the steps to resolve this so it doesn't show faulted anymore.

I'm also a bit confused as to why its showing the spares as a stripe in the GUI.

Thanks in advance...

I'm also a bit confused as to why its showing the spares as a stripe in the GUI.

Thanks in advance...

Code:

root@silo:~ # zpool status

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0 in 0 days 00:00:12 with 0 errors on Sun Mar 31 03:45:12 2019

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ada0p2 ONLINE 0 0 0

ada1p2 ONLINE 0 0 0

errors: No known data errors

pool: tank

state: DEGRADED

status: One or more devices are faulted in response to persistent errors.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Replace the faulted device, or use 'zpool clear' to mark the device

repaired.

scan: scrub repaired 0 in 0 days 16:29:24 with 0 errors on Sun Mar 17 16:29:49 2019

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

mirror-0 ONLINE 0 0 0

gptid/284957e0-54f7-11e5-952d-0cc47a34f672 ONLINE 0 0 0

gptid/28aa3d5b-54f7-11e5-952d-0cc47a34f672 ONLINE 0 0 0

mirror-1 DEGRADED 0 0 0

gptid/5560a54f-54f7-11e5-952d-0cc47a34f672 ONLINE 0 0 0

spare-1 DEGRADED 0 0 0

gptid/55bfeb35-54f7-11e5-952d-0cc47a34f672 FAULTED 21 5 0 too many errors

gptid/a25d0618-5b03-11e5-ba30-0cc47a34f672 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/8cb54a53-54f7-11e5-952d-0cc47a34f672 ONLINE 0 0 0

gptid/b3e42571-5695-11e5-aa4b-0cc47a34f672 ONLINE 0 0 0

mirror-3 DEGRADED 0 0 0

gptid/f21b968f-54f7-11e5-952d-0cc47a34f672 ONLINE 0 0 0

spare-1 DEGRADED 0 0 0

gptid/f2802035-54f7-11e5-952d-0cc47a34f672 FAULTED 27 379 0 too many errors

gptid/5cd20210-5b03-11e5-ba30-0cc47a34f672 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

gptid/524b645d-54fc-11e5-952d-0cc47a34f672 ONLINE 0 0 0

gptid/e009e2d6-54fd-11e5-952d-0cc47a34f672 ONLINE 0 0 0

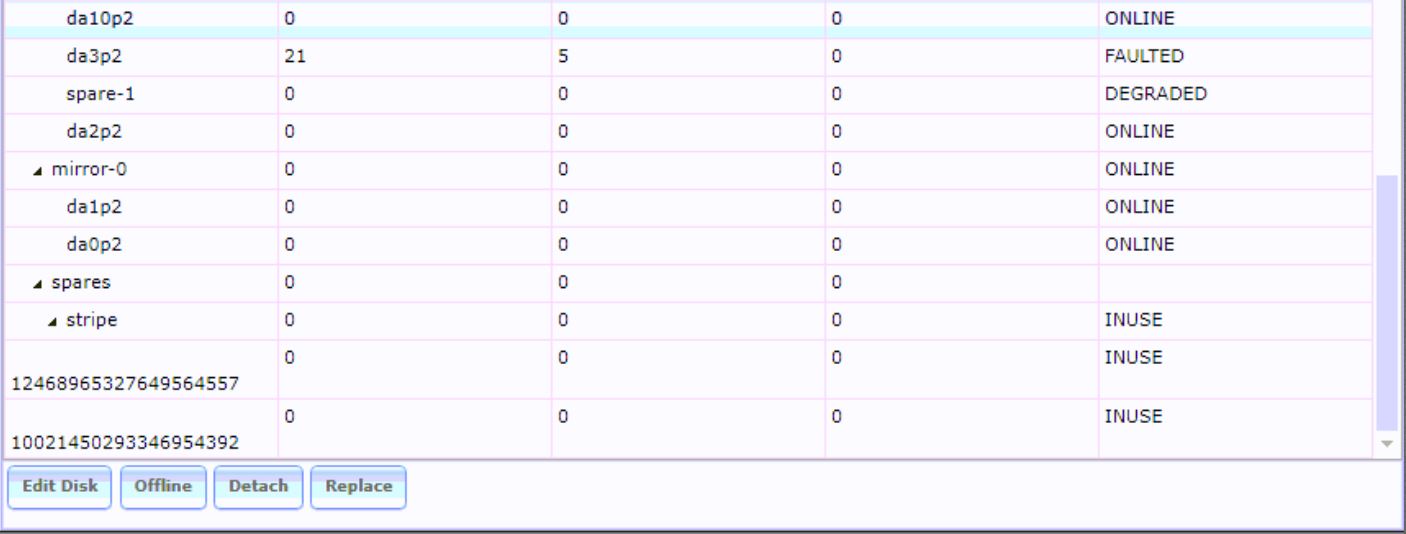

spares

10021450293346954392 INUSE was /dev/gptid/5cd20210-5b03-11e5-ba30-0cc47a34f672

12468965327649564557 INUSE was /dev/gptid/a25d0618-5b03-11e5-ba30-0cc47a34f672

errors: No known data errors

Last edited: