MeatTreats

Dabbler

- Joined

- Oct 23, 2021

- Messages

- 26

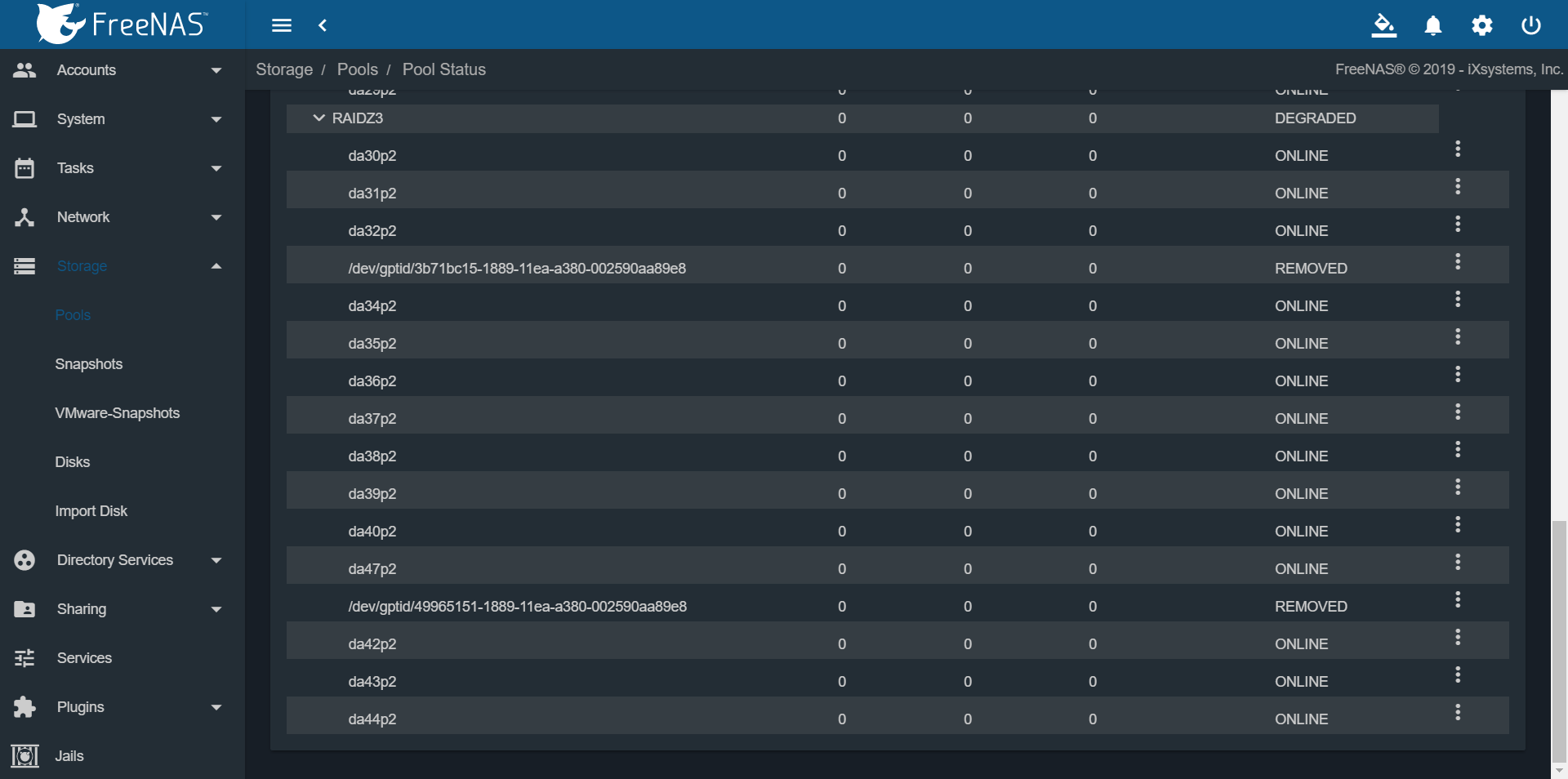

So I have a 48 drive server with 3 vdevs of 16x6TB 16x6TB and 16x10TB. I got these errors that indicated one of the 10TB drives failed so I replaced and rebuilt. Last night I decided to bootup my server and start a scrub since the last time I ran it, I was transferring files when the power momentarily went out. This morning while the scrub was still in progress, I wake up to see that another 10TB drive has failed.

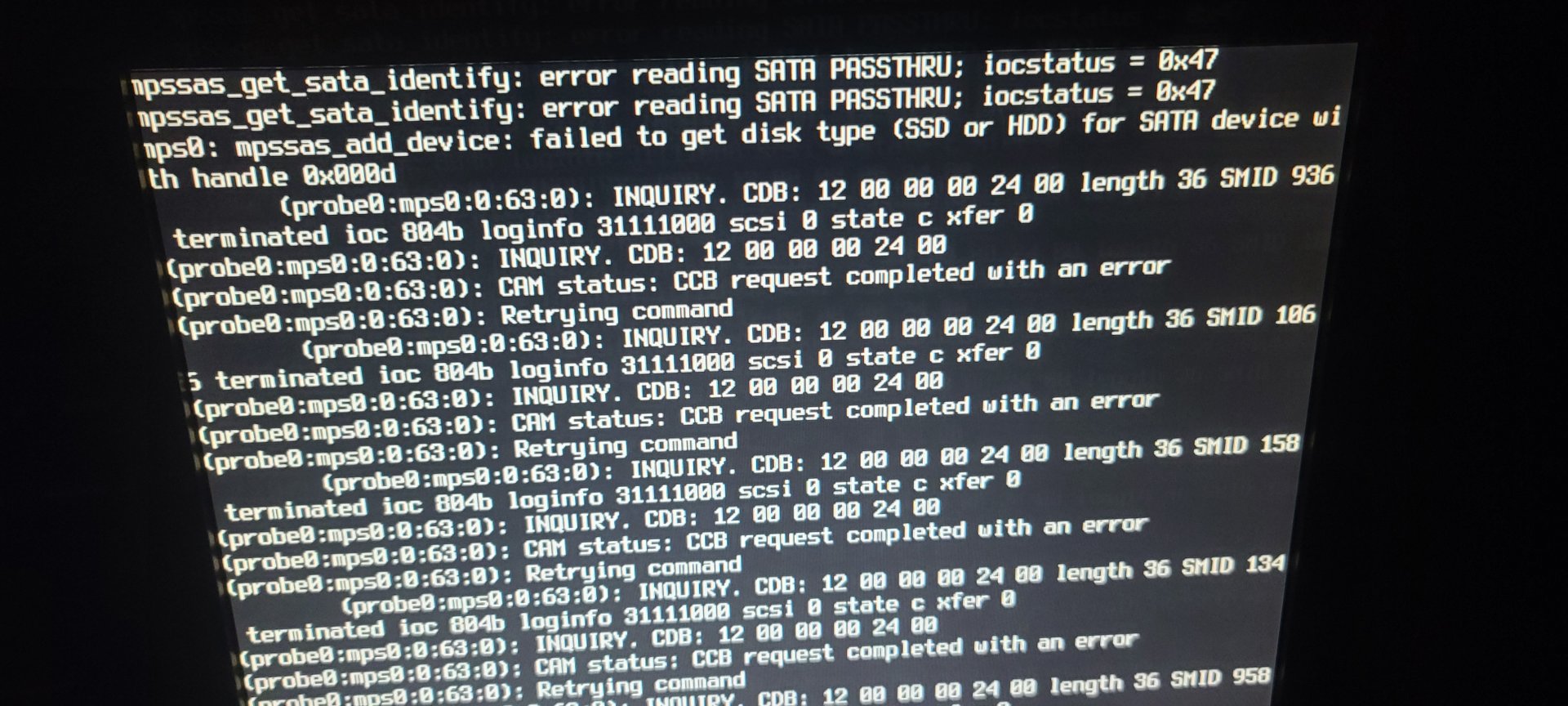

And just now I see a bunch of text on the monitor that scrolled by faster than I can take a picture of. BTW, I don't know how to scroll up or if all this info is available in the web GUI. So because I have never had two drives fail within a single day in the same array, I canceled the scrub so as to not put any more use on these drive until I can figure out WTF is going on.

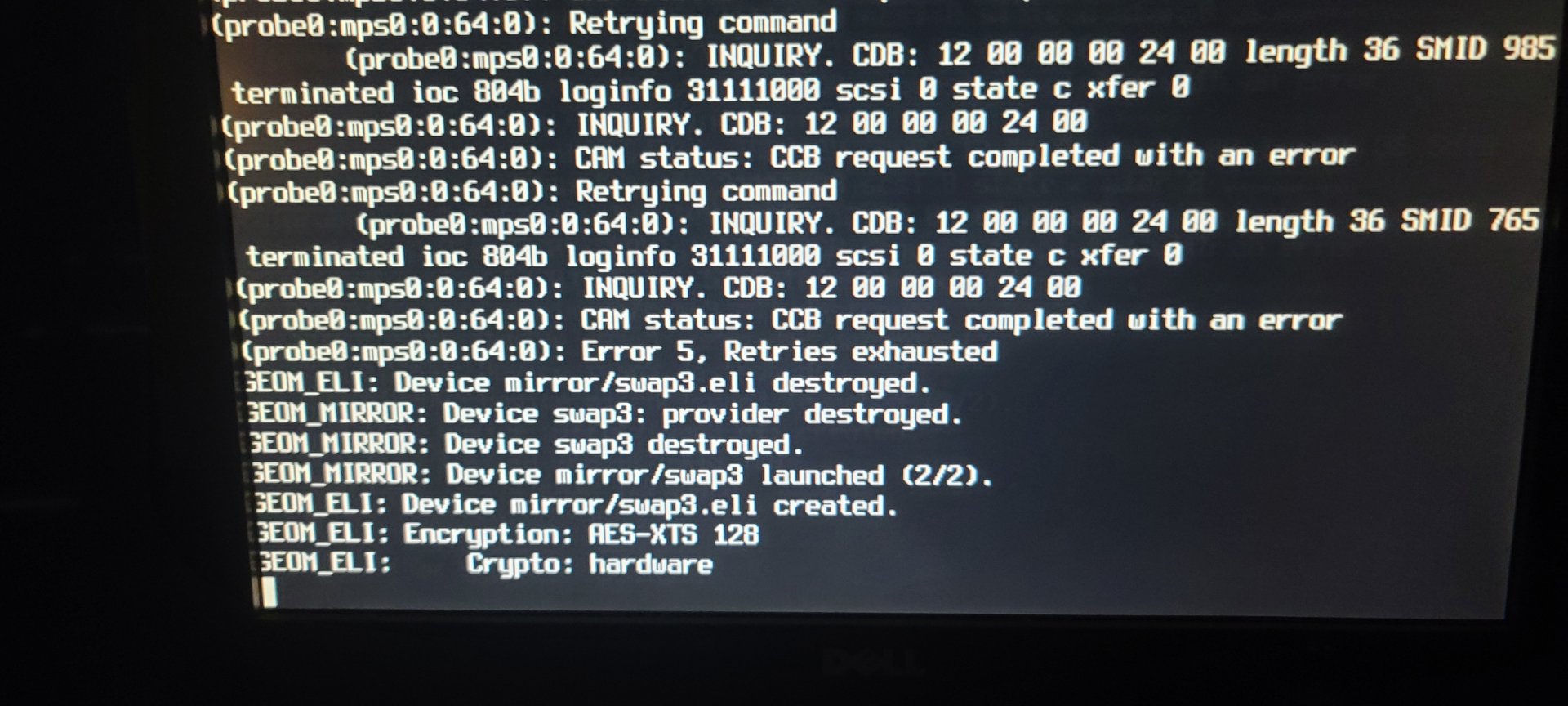

This is what appeared after the second drive failed. What's with the encryption stuff? Is my array infected with a virus?

All 3 drives are 10TB WD drives shucked from Easystores and all purchased at the same time. All 3 drives were in the same vdev. All of my 6TB drives seem to have no issues.

So my quest is are these legitimately failed drives or could something else be going? Is there any way to re-insert them into the array and run a SMART test on them?

And just now I see a bunch of text on the monitor that scrolled by faster than I can take a picture of. BTW, I don't know how to scroll up or if all this info is available in the web GUI. So because I have never had two drives fail within a single day in the same array, I canceled the scrub so as to not put any more use on these drive until I can figure out WTF is going on.

This is what appeared after the second drive failed. What's with the encryption stuff? Is my array infected with a virus?

All 3 drives are 10TB WD drives shucked from Easystores and all purchased at the same time. All 3 drives were in the same vdev. All of my 6TB drives seem to have no issues.

So my quest is are these legitimately failed drives or could something else be going? Is there any way to re-insert them into the array and run a SMART test on them?