Hi,

unfortunately I did not check the performance directly before switching to Scale, but since my workloads are mainly about seq read/write, I still have in mind that I had about 2200 read and 3000MB/s write and at iperf it was about 33Gbits/sec.

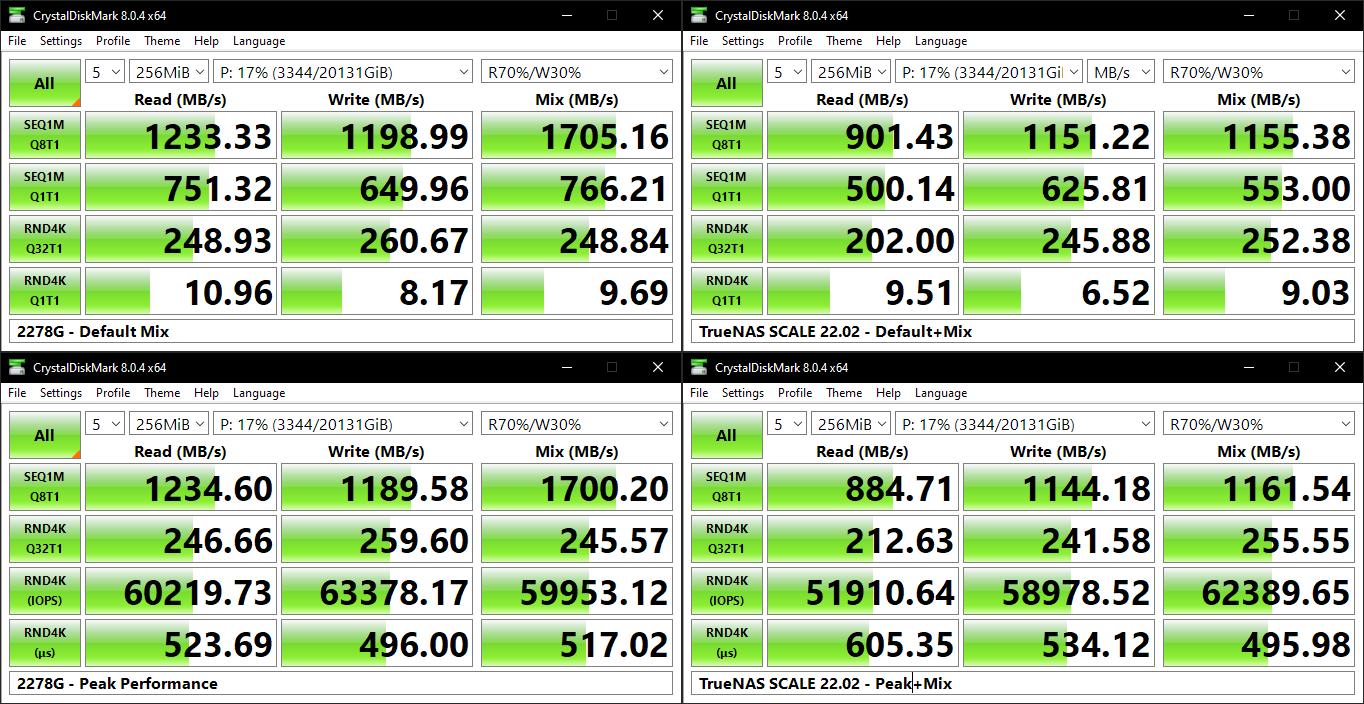

The CrystalDiskMark is made in a Windows 10VM on AMD Threadripper 2950x with Manjaro and iperf is directly Manjaro to Truenas Scale, hence the results are not comparable to your native tests.

Truenas Scale is runing on a Xeon 2660v3 with Mellanox Connect-3 pro nic.

------------------------------------------------------------------------------

CrystalDiskMark 8.0.4 x64 (C) 2007-2021 hiyohiyo

Crystal Dew World:

https://crystalmark.info/

------------------------------------------------------------------------------

* MB/s = 1,000,000 bytes/s [SATA/600 = 600,000,000 bytes/s]

* KB = 1000 bytes, KiB = 1024 bytes

[Read]

SEQ 1MiB (Q= 8, T= 1): 1291.865 MB/s [ 1232.0 IOPS] < 6485.29 us>

RND 4KiB (Q= 32, T= 1): 138.323 MB/s [ 33770.3 IOPS] < 944.66 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 2682.377 MB/s [ 2558.1 IOPS] < 3119.76 us>

RND 4KiB (Q= 32, T= 1): 129.912 MB/s [ 31716.8 IOPS] < 1003.45 us>

[Mix] Read 70%/Write 30%

SEQ 1MiB (Q= 8, T= 1): 2056.361 MB/s [ 1961.1 IOPS] < 4070.18 us>

RND 4KiB (Q= 32, T= 1): 136.835 MB/s [ 33407.0 IOPS] < 956.01 us>

Profile: Peak

Test: 256 MiB (x5) [E: 0% (0/1500GiB)]

Mode: [Admin]

Time: Measure 5 sec / Interval 5 sec

Date: 2022/02/24 8:42:35

OS: Windows 10 Professional [10.0 Build 18363] (x64)

-----------------------------------------------------------------

root@truenas[~]# iperf3 -s

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------

Accepted connection from 10.0.90.55, port 48400

[ 5] local 10.0.90.6 port 5201 connected to 10.0.90.55 port 48402

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 3.09 GBytes 26.6 Gbits/sec

[ 5] 1.00-2.00 sec 3.25 GBytes 27.9 Gbits/sec

[ 5] 2.00-3.00 sec 3.24 GBytes 27.8 Gbits/sec

[ 5] 3.00-4.00 sec 3.25 GBytes 27.9 Gbits/sec

[ 5] 4.00-5.00 sec 2.51 GBytes 21.6 Gbits/sec

[ 5] 5.00-6.00 sec 2.41 GBytes 20.7 Gbits/sec

[ 5] 6.00-7.00 sec 2.42 GBytes 20.8 Gbits/sec

[ 5] 7.00-8.00 sec 2.40 GBytes 20.6 Gbits/sec

[ 5] 8.00-9.00 sec 2.42 GBytes 20.8 Gbits/sec

[ 5] 9.00-10.00 sec 3.03 GBytes 26.0 Gbits/sec

[ 5] 10.00-10.03 sec 86.9 MBytes 26.6 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.03 sec 28.1 GBytes 24.1 Gbits/sec receiver

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------