erdas

Dabbler

- Joined

- Dec 13, 2022

- Messages

- 10

The 455A works on both the windows client and the truenas server.

They are connected via one twinax (DAC). Fiber is the same speed.

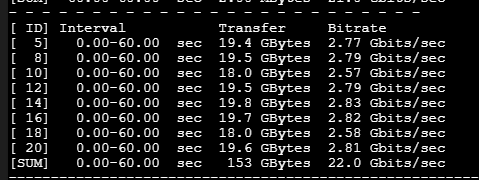

22gb/s is far from what I expected from a 100gb/s even with ETH and SMB overhead.

Both machines have beefy cpu where one core barely breaks 30%, both cards run firmware 3.0.25668 in ETH mode.

I don't know which card or OS is at fault.

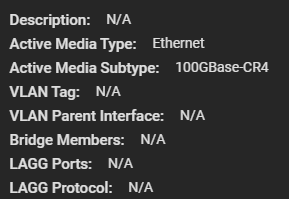

On windows device manager/mellanox/info shows link speed at 100gbe/full duplex. truenas shows this

Can someone tell me how to get closer to the full 100gb/s or at least identify where the problem is?

Thanks.

Hardware: client: windows 10 pro 13600k z790-taichi with the mellanox 455A-ECAT slotted in the top cpu-pcie x8 (max speed is about 70gb/s), the client 455A is set to ETH, running firmware v3 and directly connected to the server via a DAC cable rated at 100gb/s. The server is a 1920x on a x399-taichi and the server's 455A-ECAT is slotted in a full 16x lanes pcie gen4, there is 16gb ddr4, the main volume is a stripeed 4x 1TB m.2 rated at 3GB/s which, when the machine was running windows, was benchmarked at 12GB/s sequential RW with 8 threads in crystaldiskmark. They're slotted in a 4x m.2 pcie card slotted in a pcie gen4 set to x4x4x4x4 bifurcation.

They are connected via one twinax (DAC). Fiber is the same speed.

iperf3.exe -c 111.111.111.222 -t 60 -P 8

22gb/s is far from what I expected from a 100gb/s even with ETH and SMB overhead.

Both machines have beefy cpu where one core barely breaks 30%, both cards run firmware 3.0.25668 in ETH mode.

I don't know which card or OS is at fault.

On windows device manager/mellanox/info shows link speed at 100gbe/full duplex. truenas shows this

Can someone tell me how to get closer to the full 100gb/s or at least identify where the problem is?

Thanks.

Hardware: client: windows 10 pro 13600k z790-taichi with the mellanox 455A-ECAT slotted in the top cpu-pcie x8 (max speed is about 70gb/s), the client 455A is set to ETH, running firmware v3 and directly connected to the server via a DAC cable rated at 100gb/s. The server is a 1920x on a x399-taichi and the server's 455A-ECAT is slotted in a full 16x lanes pcie gen4, there is 16gb ddr4, the main volume is a stripeed 4x 1TB m.2 rated at 3GB/s which, when the machine was running windows, was benchmarked at 12GB/s sequential RW with 8 threads in crystaldiskmark. They're slotted in a 4x m.2 pcie card slotted in a pcie gen4 set to x4x4x4x4 bifurcation.

Last edited: