benjamin604

Cadet

- Joined

- Apr 28, 2021

- Messages

- 7

Hello,

I've got a test server up and running, Hardware is:

Hardware

HP Z840

Dual Xeon E5-2687W v3 @ 3.10GHz

128GB Ram

Mellanox Connect-x 5 Dual 25Gb

8 x 2TB Samsung 860 SSDs

1 x 1TB Samsung 860 SSD for TrueNAS core

TrueNAS-12.0-U6

Storage Pool

Single Raidz vdev with 11.85TiB usable

Single SMB Dataset with 3.51GiB used

no compression

no sync

no atime

record size 128KiB

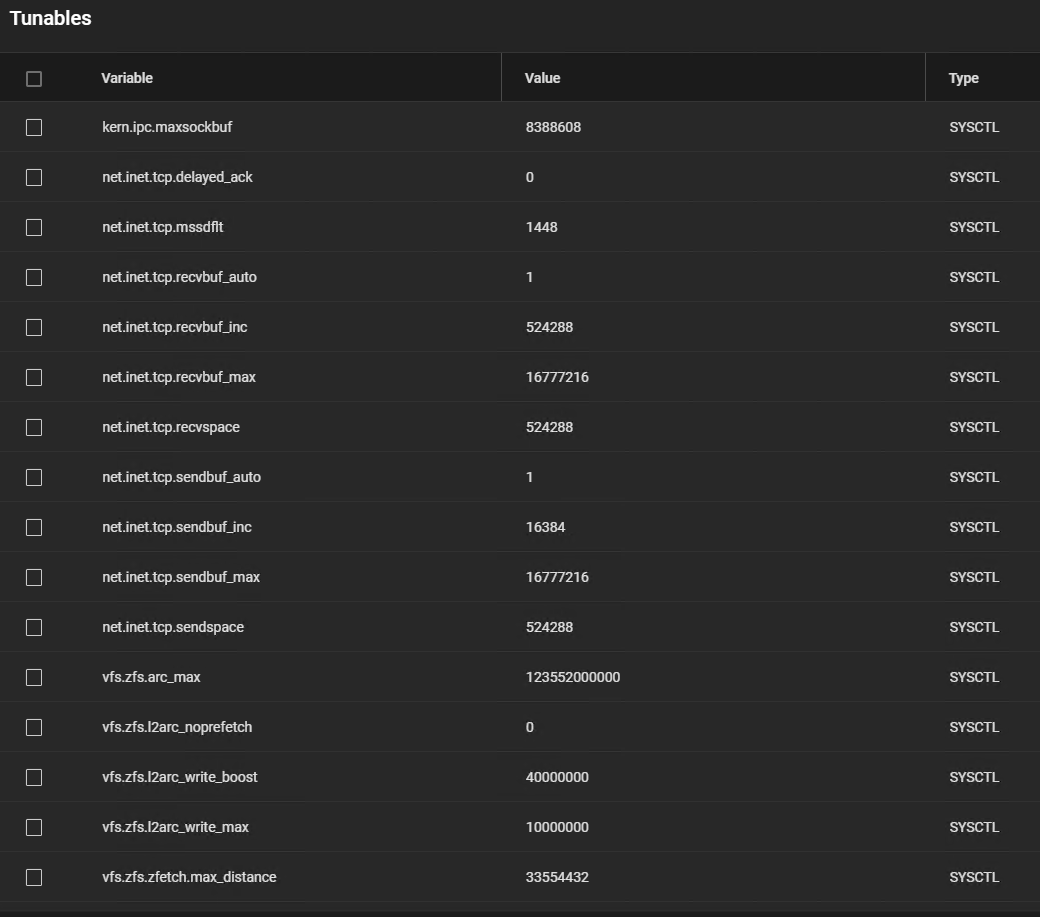

Tuneables

SMB Config

root@truenas[/mnt/RaidZSSD/SMBDataset]# testparm -a

Load smb config files from /usr/local/etc/smb4.conf

Loaded services file OK.

Server role: ROLE_STANDALONE

Press enter to see a dump of your service definitions

# Global parameters

[global]

aio max threads = 2

bind interfaces only = Yes

disable spoolss = Yes

dns proxy = No

enable web service discovery = Yes

kernel change notify = No

load printers = No

logging = file

map to guest = Bad User

max log size = 5120

nsupdate command = /usr/local/bin/samba-nsupdate -g

registry shares = Yes

server role = standalone server

server string = TrueNAS Server

unix extensions = No

idmap config *: range = 90000001-100000000

fruit:nfs_aces = No

idmap config * : backend = tdb

directory name cache size = 0

dos filemode = Yes

[smb]

ea support = No

guest ok = Yes

level2 oplocks = No

oplocks = No

path = /mnt/RaidZSSD/SMBDataset

read only = No

strict sync = No

vfs objects = fruit streams_xattr shadow_copy_zfs noacl aio_fbsd

fruit:metadata = stream

fruit:resource = stream

nfs4:chown = true

Client Machine

Mac Mini 2018

6 Core i7 3.2GHz

32Gb RAM

500Gb NVMe

Atto Thunderlink NS 3252 connected with TB3

Disabled Network DSStore

Network

Directly connected at 25Gb with Mellanox Optics, MTU 9000

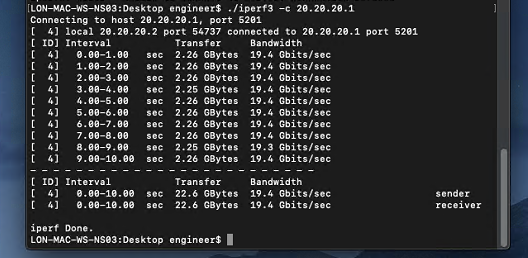

iperf looks pretty much as expected:

The Issue!

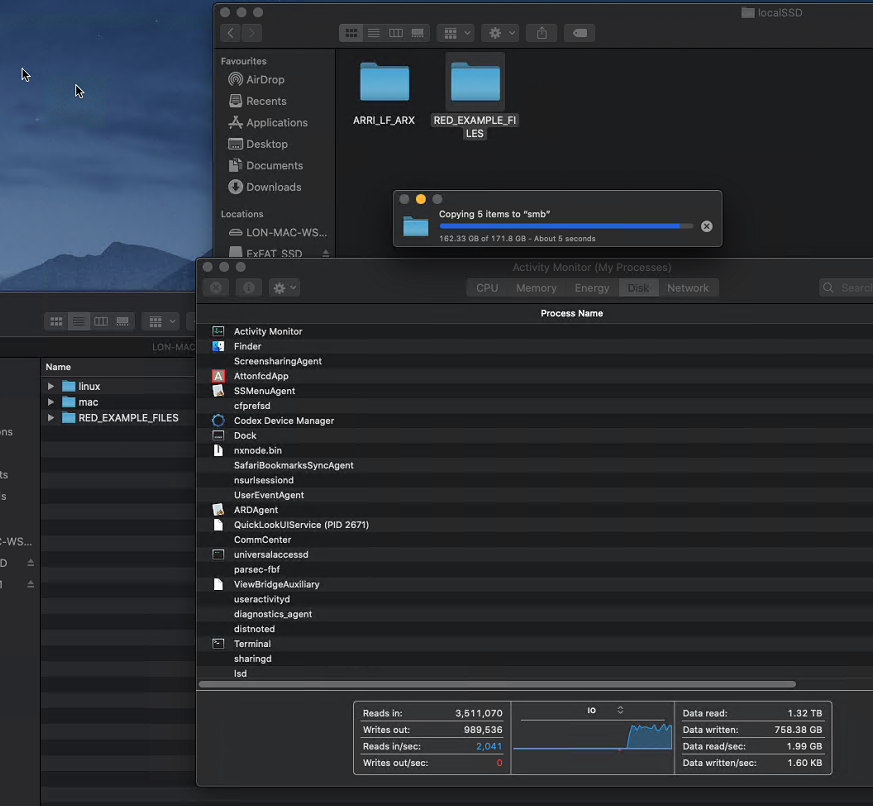

We're trying to move Arri camera media around for either ingest or backup to LTO. I've been testing with test media for the time being, just simple copies to and from the storage.

The performance of the disk looks fine. Here's IOZone for 10MB filesize (the size of Arri HDE files)

Moving larger RED files (approximately 5GB in size) I get 1.8/1.9GB/s

which for such a small array of disks is pretty good, read is similar.

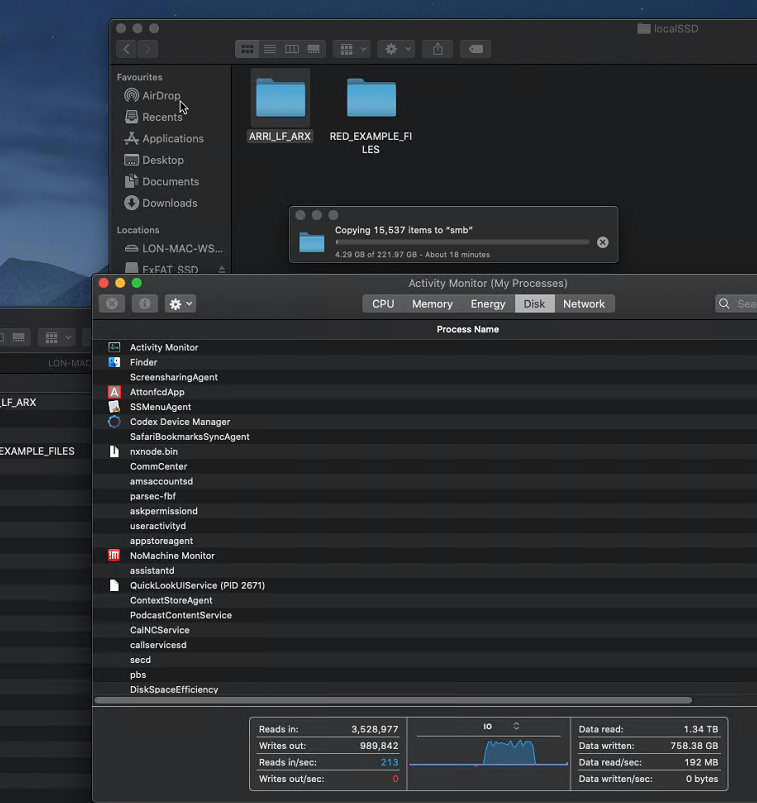

For the 10MB Arri files, however:

200MB/s. Rubbish.

Can anyone point me in a direction? More

I've got a test server up and running, Hardware is:

Hardware

HP Z840

Dual Xeon E5-2687W v3 @ 3.10GHz

128GB Ram

Mellanox Connect-x 5 Dual 25Gb

8 x 2TB Samsung 860 SSDs

1 x 1TB Samsung 860 SSD for TrueNAS core

TrueNAS-12.0-U6

Storage Pool

Single Raidz vdev with 11.85TiB usable

Single SMB Dataset with 3.51GiB used

no compression

no sync

no atime

record size 128KiB

Tuneables

SMB Config

root@truenas[/mnt/RaidZSSD/SMBDataset]# testparm -a

Load smb config files from /usr/local/etc/smb4.conf

Loaded services file OK.

Server role: ROLE_STANDALONE

Press enter to see a dump of your service definitions

# Global parameters

[global]

aio max threads = 2

bind interfaces only = Yes

disable spoolss = Yes

dns proxy = No

enable web service discovery = Yes

kernel change notify = No

load printers = No

logging = file

map to guest = Bad User

max log size = 5120

nsupdate command = /usr/local/bin/samba-nsupdate -g

registry shares = Yes

server role = standalone server

server string = TrueNAS Server

unix extensions = No

idmap config *: range = 90000001-100000000

fruit:nfs_aces = No

idmap config * : backend = tdb

directory name cache size = 0

dos filemode = Yes

[smb]

ea support = No

guest ok = Yes

level2 oplocks = No

oplocks = No

path = /mnt/RaidZSSD/SMBDataset

read only = No

strict sync = No

vfs objects = fruit streams_xattr shadow_copy_zfs noacl aio_fbsd

fruit:metadata = stream

fruit:resource = stream

nfs4:chown = true

Client Machine

Mac Mini 2018

6 Core i7 3.2GHz

32Gb RAM

500Gb NVMe

Atto Thunderlink NS 3252 connected with TB3

Disabled Network DSStore

Network

Directly connected at 25Gb with Mellanox Optics, MTU 9000

iperf looks pretty much as expected:

The Issue!

We're trying to move Arri camera media around for either ingest or backup to LTO. I've been testing with test media for the time being, just simple copies to and from the storage.

The performance of the disk looks fine. Here's IOZone for 10MB filesize (the size of Arri HDE files)

Code:

root@truenas[/mnt/RaidZSSD/SMBDataset/linux]# iozone -a -s 10240

Iozone: Performance Test of File I/O

Version $Revision: 3.487 $

Compiled for 64 bit mode.

Build: freebsd

Run began: Wed Oct 27 14:23:45 2021

Auto Mode

File size set to 10240 kB

Command line used: iozone -a -s 10240

Output is in kBytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 kBytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

random random bkwd record stride

kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread

10240 4 799557 850289 2535279 2535279 2038543 963325 2104777 973191 2042226 1017697 1020526 2378191 2374772

10240 8 1536836 2071980 4523015 4533039 3707414 1837748 3647900 1904404 3660960 1980725 1985670 3929591 3969175

10240 16 2345976 3366013 6890905 6928701 5885285 3114370 6023116 3300062 5933254 3392869 3424793 5259054 5308454

10240 32 3291463 5592546 10209568 10280438 9126954 5278443 9226952 5556371 9173741 5537744 5583821 6867765 6961268

10240 64 4092469 8569700 12980415 12960830 11917865 8108598 11934423 8598867 11961012 8441688 8498481 7907070 8094844

10240 128 9094101 11021764 13159384 13314401 12737884 10634250 12533442 11179553 12768178 10926429 10915322 7545878 7792305

10240 256 8597146 10352298 12160825 12160825 11951028 10231458 11937741 10322441 12017909 10387349 10397408 6210364 6547400

10240 512 8448330 10302632 12817715 12879213 12688962 10248547 12640414 10282899 12610723 10270604 10260789 5922617 6732128

10240 1024 8715780 10568830 13248688 13232361 13147299 10514495 13059352 10545475 13297912 10558437 10566230 6059657 6854612

10240 2048 8706946 10732573 13027662 13011875 12945204 10634250 12879213 10743311 13043488 10743311 10767552 5403286 6719489

10240 4096 7809376 10654824 13085407 13045661 13045661 10638329 12918138 10734715 13001235 10668056 10711290 4162392 6687628

10240 8192 5769116 10266427 12409588 12450057 12778810 10202411 12486251 10504976 12679779 10450659 10381195 2439662 5013234

iozone test complete.Moving larger RED files (approximately 5GB in size) I get 1.8/1.9GB/s

which for such a small array of disks is pretty good, read is similar.

For the 10MB Arri files, however:

200MB/s. Rubbish.

Can anyone point me in a direction? More