Poetart

Dabbler

- Joined

- Jan 4, 2018

- Messages

- 43

Moving my data to a temp array while I upgrade and rebuild my current one.

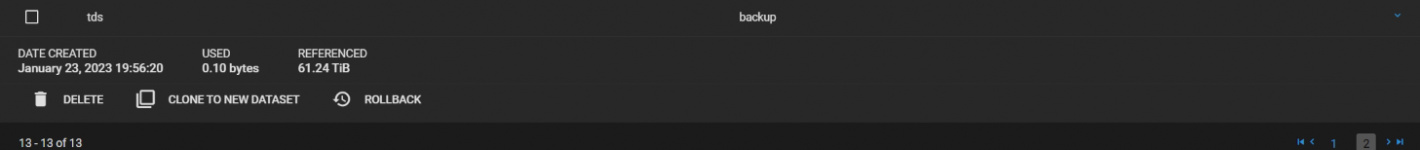

Total size is 60.7TB of used space.

I have been replicating this data for a few days but windows decided that now was the time to update so my console connection closed but from the disk useage I can see that it is still writing to the disks.

Is there anyway for me to see the progress of a ZFS send job that is currently in progress?

Total size is 60.7TB of used space.

I have been replicating this data for a few days but windows decided that now was the time to update so my console connection closed but from the disk useage I can see that it is still writing to the disks.

Is there anyway for me to see the progress of a ZFS send job that is currently in progress?