danb35

Hall of Famer

- Joined

- Aug 16, 2011

- Messages

- 15,504

I'm using three nodes of a Dell PowerEdge C6220 unit as a Proxmox VE cluster (which uses my TrueNAS CORE box for some of its storage, hence a tenuous connection to this forum). ISOs and some low-demand VM disk images are stored on the TrueNAS server, but most of the live disk images are stored on a Ceph pool consisting of three, 2TB Samsung EVO 860 SSDs, one in each node. The nodes are linked together via 10 Gb fiber with Chelsio T420 cards. And while performance is a whole lot better than it was with spinners, I'd still like to speed it up further--and also expand the VM disk storage.

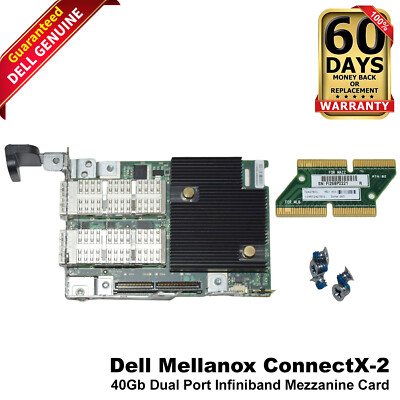

If I want faster performance than SATA SSDs, the obvious (AFAIK) next step is NVMe SSDs, but these servers don't have any NVMe support (and that also means that 4x 2.5" bays per node are pretty useless, and also that I lose hot-swap capability for my VM storage devices). No problem, there are PCIe-NVMe cards--but the servers only have a single PCIe (3.0, x16, half-height) slot, which is currently occupied with the NICs. Well, there's a solution to that too: the servers have "mezzanine" slots, and there are Intel X520 NICs available that fit them. They're available on eBay, and not too expensive, though units with the brackets I'd need do seem to cost more.

So that's sorted, then. Buy mezzanine X520 cards x3, buy PCIe-NVMe cards x3, buy NVMe SSDs x3, profit! Well, opposite of profit, actually, but... It's getting expensive, but it seems straightforward enough. The last sticking point is that I'm not seeing cards that would let me use two NVMe drives on a single card. I'm seeing plenty of cards that have two slots, but have a SATA port for the second slot, and you need to use a SATA cable to the mainboard to use that slot--which seems to completely defeat my purpose in the whole exercise. So I think that leads to the real questions:

If I want faster performance than SATA SSDs, the obvious (AFAIK) next step is NVMe SSDs, but these servers don't have any NVMe support (and that also means that 4x 2.5" bays per node are pretty useless, and also that I lose hot-swap capability for my VM storage devices). No problem, there are PCIe-NVMe cards--but the servers only have a single PCIe (3.0, x16, half-height) slot, which is currently occupied with the NICs. Well, there's a solution to that too: the servers have "mezzanine" slots, and there are Intel X520 NICs available that fit them. They're available on eBay, and not too expensive, though units with the brackets I'd need do seem to cost more.

So that's sorted, then. Buy mezzanine X520 cards x3, buy PCIe-NVMe cards x3, buy NVMe SSDs x3, profit! Well, opposite of profit, actually, but... It's getting expensive, but it seems straightforward enough. The last sticking point is that I'm not seeing cards that would let me use two NVMe drives on a single card. I'm seeing plenty of cards that have two slots, but have a SATA port for the second slot, and you need to use a SATA cable to the mainboard to use that slot--which seems to completely defeat my purpose in the whole exercise. So I think that leads to the real questions:

- Are there cards which would let me use two NVMe SSDs in a single half-height PCIe slot?

- Alternatively, am I going about this in completely the wrong way, and should I be thinking in a different direction entirely?