Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

The goal of this build was firstly to provide a strong 6-bay NAS, Plex server and offsite backup for my Primary NAS. Also, it needs to run ESXi in a performant manner, and will host Windows, OSX, Linux guests, as well as a pfSense gateway. The ESXi installation was the pilot project for an ESXi upgrade I am planning for the Primary NAS.

The Supermicro X10SDV-TLN4F is a Xeon D 1541 MiniITX motherboard. The Xeon D is an 8 core Broadwell CPU which supports 128GB dual channel EEC memory across 4 slots. The board features Dual 10gbe + Dual gigabit ethernet ports, 6 SATA ports, an M.2 2280 PCI NVMe slot and a fully bifurcatable 16x PCIe slot (this means it can support up to another 4 M.2 drives), and IPMI.

The Node 304 is a stylish relatively small 6 bay chassis, which has high WAF, and is thus suitable for use in a living room... with suitable fan control modifications.

Parts List:

Case: Fractal Design Node 304

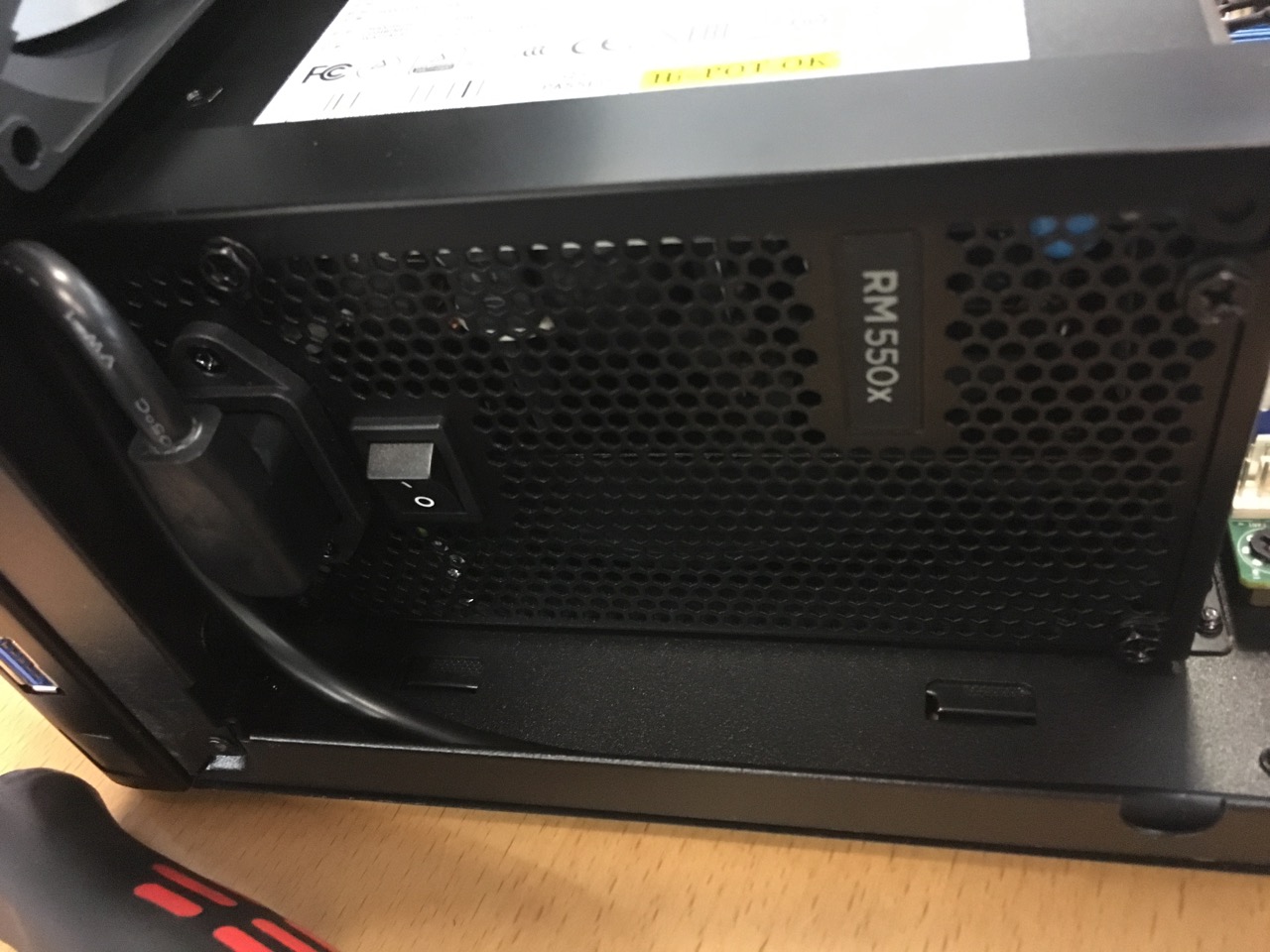

PSU: Corsair RM550x

Motherboard: Supermicro X10SDV-TLN4F

Memory: 2x 16GB Crucial DDR4 RDIMM

USBs: 2x Sandisk Cruzer Fit 16GB USB2

HDs: 6x Seagate IronWolf 8TB

HD Fans: 2x Noctua 92mm NF-A9 PWM

Exhaust Fan: Noctua 140mm NF-A14 PWM

ESXi Boot device: Samsung 960 Evo 250GB M.2 PCI NVMe SSD

SLOG: Intel P3700 400GB HHHL AIC PCI NVMe SSD

Misc: A PC piezo buzzer and USB3 to USB2 header adapter from ebay

Node 304 in all its glory

First things first, install motherboard, ram, psu, boot usbs, freenas, hds and test the memory and burn-in the HDs. Pity I don't have a photo of the Medusa that was all of the SATA cables before I cable managed them.

PS: Its easier to upgrade the HD fans *before* you install the PSU, so you may want to do that first.

The Corsair RM550x PSU has more than enough power for this use case, and is a silent 0RPM PSU at less than 50% utilization. It fits in the case, and has a 10 year warranty. It works well to route the PSU power cable along the case edge and back around

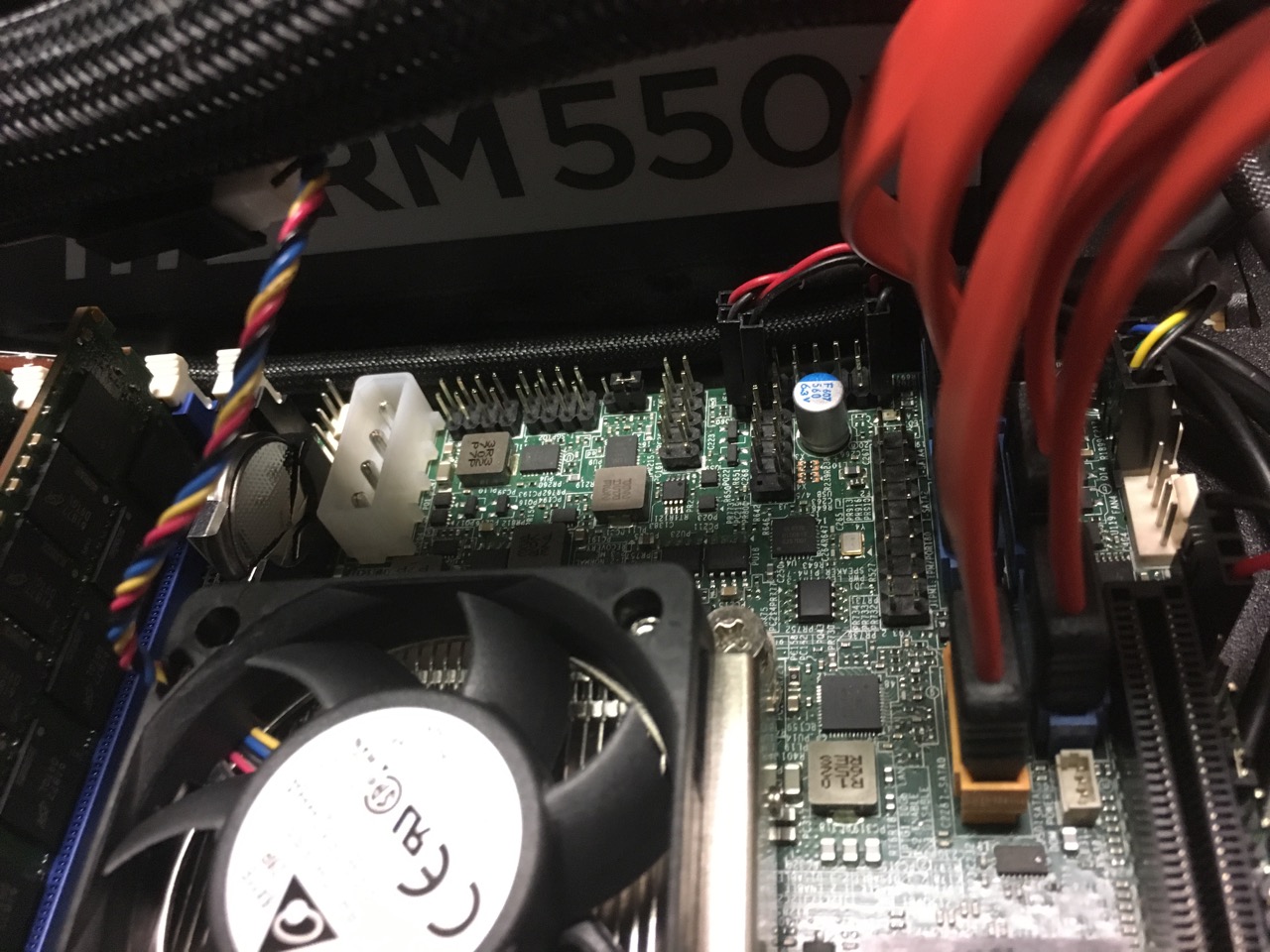

One of the tricks is wiring up the front panel connectors. Hopefully this picture helps anyone following in my footsteps

HD Audio is tucked under the motherboard, USB3 front panel cable is temporarily looped around while I await an adapter, eventually I'll run it between the PSU and motherboard edge to the other side. A PC buzzer speaker is added. M2 and SATA cables are already in place, becasue I've already tested the system.

Also, the Node 304 has USB3 ports on its front panel, but the X10SDV only has USB2 headers on the motherboard (the back ports are USB3). In order to connect the front ports to the motherboard you will need a USB3 to USB2 motherboard header adapter. Available for a few bucks off ebay. I routed the USB3 cable from the front panel between the PSU and the edge of the motherboard, then to the adapter, and to the motherboard. The main ATX cable is also looped around.

Fans!

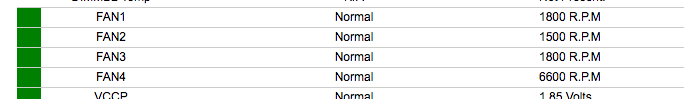

After all the hardware was tested, I then began performing thermal tests. Long story short, the CPU fan is obnoxious at full speed, and the Fractal fans aren't controllable, so in order to keep temperatures satisfactory I had to run them at full speed, and I found that that was too noisy, so I replaced them with Noctua PWM fans.

Now because I'm using 4 PWM fans (2x HD intake, 1x Exhaust and 1x CPU), I'm going to need a fan control script. Luckily I've written a dual-zone fan control script already. Later on I will provide my tuned settings for this case/motherboard.

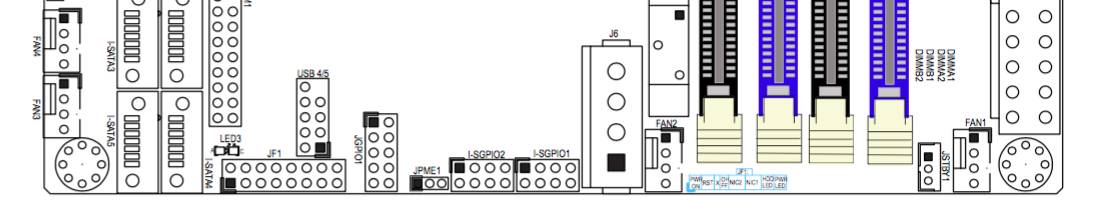

The X10SDV v2.0 boards supports 4 fans and has 2 fan zones. Fans 1-3 are Fan Zone 0 and Fan 4 is Fan Zone 1. From the factory, the CPU fan is connected to Fan 1. This means all your HD and Exhaust fans need to be ganged off of Fan 4. Or alternatively, you can extend the CPU fan with one of the Noctua fan extension leads to Fan 4, and then connect the HD fans to Fan 1 and 3 and the Exhaust fan to Fan 2.

This means you also have the benefit of being able to see the RPM of *ALL* of your fans.

Its easier to install the Noctua fans with their rubber bits if you start in the centre and then do the outsides. Have the tails on the bottom outside edges so they can reach the motherboard headers with no extensions.

This fan routes below the corner of the HD, to the FAN1 connector. It just makes it without being too tight.

The Supermicro X10SDV-TLN4F is a Xeon D 1541 MiniITX motherboard. The Xeon D is an 8 core Broadwell CPU which supports 128GB dual channel EEC memory across 4 slots. The board features Dual 10gbe + Dual gigabit ethernet ports, 6 SATA ports, an M.2 2280 PCI NVMe slot and a fully bifurcatable 16x PCIe slot (this means it can support up to another 4 M.2 drives), and IPMI.

The Node 304 is a stylish relatively small 6 bay chassis, which has high WAF, and is thus suitable for use in a living room... with suitable fan control modifications.

Parts List:

Case: Fractal Design Node 304

PSU: Corsair RM550x

Motherboard: Supermicro X10SDV-TLN4F

Memory: 2x 16GB Crucial DDR4 RDIMM

USBs: 2x Sandisk Cruzer Fit 16GB USB2

HDs: 6x Seagate IronWolf 8TB

HD Fans: 2x Noctua 92mm NF-A9 PWM

Exhaust Fan: Noctua 140mm NF-A14 PWM

ESXi Boot device: Samsung 960 Evo 250GB M.2 PCI NVMe SSD

SLOG: Intel P3700 400GB HHHL AIC PCI NVMe SSD

Misc: A PC piezo buzzer and USB3 to USB2 header adapter from ebay

Node 304 in all its glory

First things first, install motherboard, ram, psu, boot usbs, freenas, hds and test the memory and burn-in the HDs. Pity I don't have a photo of the Medusa that was all of the SATA cables before I cable managed them.

PS: Its easier to upgrade the HD fans *before* you install the PSU, so you may want to do that first.

The Corsair RM550x PSU has more than enough power for this use case, and is a silent 0RPM PSU at less than 50% utilization. It fits in the case, and has a 10 year warranty. It works well to route the PSU power cable along the case edge and back around

One of the tricks is wiring up the front panel connectors. Hopefully this picture helps anyone following in my footsteps

HD Audio is tucked under the motherboard, USB3 front panel cable is temporarily looped around while I await an adapter, eventually I'll run it between the PSU and motherboard edge to the other side. A PC buzzer speaker is added. M2 and SATA cables are already in place, becasue I've already tested the system.

Also, the Node 304 has USB3 ports on its front panel, but the X10SDV only has USB2 headers on the motherboard (the back ports are USB3). In order to connect the front ports to the motherboard you will need a USB3 to USB2 motherboard header adapter. Available for a few bucks off ebay. I routed the USB3 cable from the front panel between the PSU and the edge of the motherboard, then to the adapter, and to the motherboard. The main ATX cable is also looped around.

Fans!

After all the hardware was tested, I then began performing thermal tests. Long story short, the CPU fan is obnoxious at full speed, and the Fractal fans aren't controllable, so in order to keep temperatures satisfactory I had to run them at full speed, and I found that that was too noisy, so I replaced them with Noctua PWM fans.

Now because I'm using 4 PWM fans (2x HD intake, 1x Exhaust and 1x CPU), I'm going to need a fan control script. Luckily I've written a dual-zone fan control script already. Later on I will provide my tuned settings for this case/motherboard.

The X10SDV v2.0 boards supports 4 fans and has 2 fan zones. Fans 1-3 are Fan Zone 0 and Fan 4 is Fan Zone 1. From the factory, the CPU fan is connected to Fan 1. This means all your HD and Exhaust fans need to be ganged off of Fan 4. Or alternatively, you can extend the CPU fan with one of the Noctua fan extension leads to Fan 4, and then connect the HD fans to Fan 1 and 3 and the Exhaust fan to Fan 2.

This means you also have the benefit of being able to see the RPM of *ALL* of your fans.

Its easier to install the Noctua fans with their rubber bits if you start in the centre and then do the outsides. Have the tails on the bottom outside edges so they can reach the motherboard headers with no extensions.

This fan routes below the corner of the HD, to the FAN1 connector. It just makes it without being too tight.

Last edited: