David E

Contributor

- Joined

- Nov 1, 2013

- Messages

- 119

I've been playing with a lab server trying to understand the performance I am seeing, and figure out if there is some way to improve it.

Lab Setup:

ESXi 6 on a Dell R630, many cores, lots of ram

FreeNAS 9.3 20161181840 on an Intel E3 1230, 32G of RAM, single 840 Pro 128GB for testing

Networking is Intel 10G SFP+ NICs connected via DACs

Storage connection is via NFSv3 (intentional choice over iSCSI), and I've set sync=disabled for these tests. As you'd expect, writes are much slower with it on.

Performance:

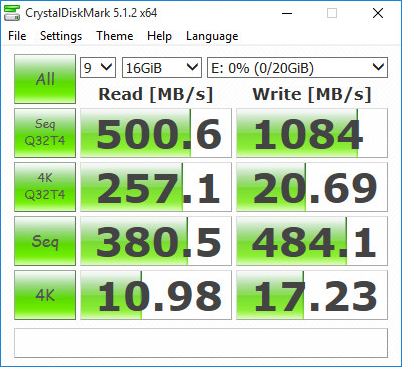

CrystalDiskMark run per the settings seen below in a Win10 VM

Question:

1. 4k random writes both qd32 and qd1 seem very low, and I believe are contributing factors to the slowness we feel in our production system. This drive when benchmarked locally achieves 370MB/s (17.8x) and 138MB/s (8x) respectively. I recognize there are a lot of additional overheads here, but is anyone able to achieve higher values here? And if so, how?

2. I'm also surprised that the random write performance doesn't grow to extremely high levels like sequential does when sync is turned off. Are random writes not batched up and laid out on disk sequentially?

Lab Setup:

ESXi 6 on a Dell R630, many cores, lots of ram

FreeNAS 9.3 20161181840 on an Intel E3 1230, 32G of RAM, single 840 Pro 128GB for testing

Networking is Intel 10G SFP+ NICs connected via DACs

Storage connection is via NFSv3 (intentional choice over iSCSI), and I've set sync=disabled for these tests. As you'd expect, writes are much slower with it on.

Performance:

CrystalDiskMark run per the settings seen below in a Win10 VM

Question:

1. 4k random writes both qd32 and qd1 seem very low, and I believe are contributing factors to the slowness we feel in our production system. This drive when benchmarked locally achieves 370MB/s (17.8x) and 138MB/s (8x) respectively. I recognize there are a lot of additional overheads here, but is anyone able to achieve higher values here? And if so, how?

2. I'm also surprised that the random write performance doesn't grow to extremely high levels like sequential does when sync is turned off. Are random writes not batched up and laid out on disk sequentially?