Hi all, I'm stuck with my FreeNAS performance tuning and I'd like to consult on your expertise. I focus on my write throughput (although read throughput is not great as well), and now it is at 20MB/s tested from ESXi. From reading the post in forum, I have some rough idea:

(1) force nfs async mode (to deal with ESXi impl)

- zfs set sync=disabled

- vfs.nfsd.async=1

(2) enable autotune

(3) have enough RAM and proper NIC

(4) stay away from RAID5/RAIDZ

(5) setup ZIL with SSD (I don't have this option)

(6) setup L2ARC with SSD (I don't have this option)

(7) disable atime, dedup, compression

Yet still my write throughput is far from expected. Anyone could point me a direction? If more info needed, please don't hesitate to ask.

My hardware spec:

FreeNAS server:

· - HP DL380 G8

· - Intel(R) Xeon(R) CPU E5-2690 0 @ 2.90GHz

· - BCM5719 Gigabit Ethernet 4 port

· - 64G DDR3 ECC RAM

· - Flash drive boot FreeNAS 9.2.0 RELEASE (diskless)

DAS:

· - EonStor R2240 + EonStor J2000R extension (JBOD)

· - RAID Controller: 1GB cache EonStor R2240 equiped with 3x 300G SAS disk 15k rpm (I cannot disable RAID controller function, so I let it to manage disks and expose LUNs to FreeNAS. Anyway Disk IO is not an issue)

· - JBOD: EonStor J2000R equipped with 10x 3T SATA disk 7200rpm

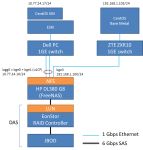

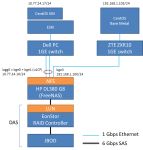

My configuration is shown as following diagram.

My FreeNAS is release 9.2.0, using default settings and then enabled autotune.

Sysctl:

kern.ipc.maxsockbuf = 2097152 (Generated by autotune)

net.inet.tcp.recvbuf_max = 2097152 (Generated by autotune)

net.inet.tcp.sendbuf_max = 2097152 (Generated by autotune)

vfs.nfsd.async=1 (added by me)

Tunables:

vfs.zfs.arc_max = 47696026428 (Generated by autotune)

vm.kmem_size = 52995584921 (Generated by autotune)

vm.kmem_size_max = 66244481152 (Generated by autotune)

No L2ARC, no ZIL.

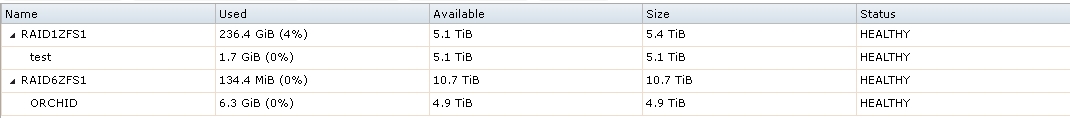

I have two zpool:

set sync=disabled for RAID6ZFS1:

[root@freenas ~]# zfs get sync

NAME PROPERTY VALUE SOURCE

RAID1ZFS1 sync standard local

RAID1ZFS1/test sync standard inherited from RAID1ZFS1

RAID6ZFS1 sync disabled local

RAID6ZFS1/ORCHID sync disabled local

LACP Link Aggregation works fine.

Network Test

iperf –d default network test gives following result:

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 64.0 KByte (default)

------------------------------------------------------------

[ 7] local 10.77.24.16 port 5001 connected with 10.77.24.17 port 54549

------------------------------------------------------------

Client connecting to 10.77.24.17, TCP port 5001

TCP window size: 96.5 KByte (default)

------------------------------------------------------------

[ 9] local 10.77.24.16 port 23951 connected with 10.77.24.17 port 5001

Waiting for server threads to complete. Interrupt again to force quit.

[ ID] Interval Transfer Bandwidth

[ 7] 0.0-10.0 sec 792 MBytes 663 Mbits/sec

[ 9] 0.0-10.1 sec 679 MBytes 566 Mbits/sec

Local Disk IO Test

dd test performed locally at FreeNAS yields following result:

[root@freenas /mnt/RAID6ZFS1/ORCHID]# dd if=/dev/zero of=testfile bs=128k count= 50k

51200+0 records in

51200+0 records out

6710886400 bytes transferred in 13.790086 secs (486645724 bytes/sec)

iozone test yields following result:

[root@freenas ~]# iozone -R -l 2 -u 2 -r 128k -s 1000m -F /mnt/RAID6ZFS1/ORCHID/f1 /mnt/RAID6ZFS1/ORCHID/f2

"Throughput report Y-axis is type of test X-axis is number of processes"

"Record size = 128 Kbytes "

"Output is in Kbytes/sec"

" Initial write " 6145867.75

" Rewrite " 2953791.75

" Read " 6836807.50

" Re-read " 8055269.75

" Reverse Read " 5743218.00

" Stride read " 6824206.75

" Random read " 8265411.25

" Mixed workload " 5935495.25

" Random write " 4638376.50

" Pwrite " 4055769.38

" Pread " 7961281.00

" Fwrite " 2854679.12

" Fread " 6021551.75

From the above, I assume network and disk IO works fine separately. Next I perform the same dd test using nfs mount from CentOS-VM and CentOS-BareMetal and there comes my problem:

NFS test from CentOS-VM:

[root@localhost etc]# mount -t nfs -o tcp,async 10.77.24.16:/mnt/RAID6ZFS1/ORCHID /mnt/ORCHID/

^C18625+0 records in

18625+0 records out

2441216000 bytes (2.4 GB) copied, 90.2074 s, 27.1 MB/s

"Throughput report Y-axis is type of test X-axis is number of processes"

"Record size = 128 Kbytes "

"Output is in Kbytes/sec"

" Initial write " 31658.41

" Rewrite " 28010.82

" Read " 95170.81

" Re-read " 114052.75

" Reverse Read " 216960.58

" Stride read " 414234.79

" Random read " 3097005.52

" Mixed workload " 37324.00

" Random write " 28707.09

" Pwrite " 29535.80

" Pread " 108033.00

" Fwrite " 29034.48

" Fread " 109954.27

iozone test complete.

While performing dd test:

[root@freenas ~]# zpool iostat 1

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.74G 10.9T 0 523 0 60.5M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.74G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.74G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.80G 10.9T 0 542 0 62.8M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.80G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.80G 10.9T 0 265 0 33.2M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.87G 10.9T 0 267 0 28.4M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.87G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.87G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.93G 10.9T 0 523 0 60.6M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.93G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.93G 10.9T 0 390 0 48.8M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.99G 10.9T 0 134 0 11.8M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.99G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.99G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

From CentOS bare metal I have slightly better result but still far from expected.

It seems to me, network is not saturated (far from iperf test), disk IO is not saturated (lots of idle in iostat). Hence I was wondering where FreeNAS is choking on, to give e.g. 20MB/s write throughput. I understand I don’t have the best rationale to justify my current setting, and I understand the parameters may not be optimized. But still it should not give such a performance result, is it? Thanks greatly for your help.

(1) force nfs async mode (to deal with ESXi impl)

- zfs set sync=disabled

- vfs.nfsd.async=1

(2) enable autotune

(3) have enough RAM and proper NIC

(4) stay away from RAID5/RAIDZ

(5) setup ZIL with SSD (I don't have this option)

(6) setup L2ARC with SSD (I don't have this option)

(7) disable atime, dedup, compression

Yet still my write throughput is far from expected. Anyone could point me a direction? If more info needed, please don't hesitate to ask.

My hardware spec:

FreeNAS server:

· - HP DL380 G8

· - Intel(R) Xeon(R) CPU E5-2690 0 @ 2.90GHz

· - BCM5719 Gigabit Ethernet 4 port

· - 64G DDR3 ECC RAM

· - Flash drive boot FreeNAS 9.2.0 RELEASE (diskless)

DAS:

· - EonStor R2240 + EonStor J2000R extension (JBOD)

· - RAID Controller: 1GB cache EonStor R2240 equiped with 3x 300G SAS disk 15k rpm (I cannot disable RAID controller function, so I let it to manage disks and expose LUNs to FreeNAS. Anyway Disk IO is not an issue)

· - JBOD: EonStor J2000R equipped with 10x 3T SATA disk 7200rpm

My configuration is shown as following diagram.

My FreeNAS is release 9.2.0, using default settings and then enabled autotune.

Sysctl:

kern.ipc.maxsockbuf = 2097152 (Generated by autotune)

net.inet.tcp.recvbuf_max = 2097152 (Generated by autotune)

net.inet.tcp.sendbuf_max = 2097152 (Generated by autotune)

vfs.nfsd.async=1 (added by me)

Tunables:

vfs.zfs.arc_max = 47696026428 (Generated by autotune)

vm.kmem_size = 52995584921 (Generated by autotune)

vm.kmem_size_max = 66244481152 (Generated by autotune)

No L2ARC, no ZIL.

I have two zpool:

- RAID1ZFS1: not used in test, configured with 4x 3T SATA 7.2k disk

- RAID6ZFS1: Use RAID Controller to configure RAID6 on 6x 3T SATA 7.2k disk, and then expose a single LUN to FreeNAS in order to create a ZFS volume (stripe)

set sync=disabled for RAID6ZFS1:

[root@freenas ~]# zfs get sync

NAME PROPERTY VALUE SOURCE

RAID1ZFS1 sync standard local

RAID1ZFS1/test sync standard inherited from RAID1ZFS1

RAID6ZFS1 sync disabled local

RAID6ZFS1/ORCHID sync disabled local

LACP Link Aggregation works fine.

Network Test

iperf –d default network test gives following result:

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 64.0 KByte (default)

------------------------------------------------------------

[ 7] local 10.77.24.16 port 5001 connected with 10.77.24.17 port 54549

------------------------------------------------------------

Client connecting to 10.77.24.17, TCP port 5001

TCP window size: 96.5 KByte (default)

------------------------------------------------------------

[ 9] local 10.77.24.16 port 23951 connected with 10.77.24.17 port 5001

Waiting for server threads to complete. Interrupt again to force quit.

[ ID] Interval Transfer Bandwidth

[ 7] 0.0-10.0 sec 792 MBytes 663 Mbits/sec

[ 9] 0.0-10.1 sec 679 MBytes 566 Mbits/sec

Local Disk IO Test

dd test performed locally at FreeNAS yields following result:

[root@freenas /mnt/RAID6ZFS1/ORCHID]# dd if=/dev/zero of=testfile bs=128k count= 50k

51200+0 records in

51200+0 records out

6710886400 bytes transferred in 13.790086 secs (486645724 bytes/sec)

iozone test yields following result:

[root@freenas ~]# iozone -R -l 2 -u 2 -r 128k -s 1000m -F /mnt/RAID6ZFS1/ORCHID/f1 /mnt/RAID6ZFS1/ORCHID/f2

"Throughput report Y-axis is type of test X-axis is number of processes"

"Record size = 128 Kbytes "

"Output is in Kbytes/sec"

" Initial write " 6145867.75

" Rewrite " 2953791.75

" Read " 6836807.50

" Re-read " 8055269.75

" Reverse Read " 5743218.00

" Stride read " 6824206.75

" Random read " 8265411.25

" Mixed workload " 5935495.25

" Random write " 4638376.50

" Pwrite " 4055769.38

" Pread " 7961281.00

" Fwrite " 2854679.12

" Fread " 6021551.75

From the above, I assume network and disk IO works fine separately. Next I perform the same dd test using nfs mount from CentOS-VM and CentOS-BareMetal and there comes my problem:

NFS test from CentOS-VM:

[root@localhost etc]# mount -t nfs -o tcp,async 10.77.24.16:/mnt/RAID6ZFS1/ORCHID /mnt/ORCHID/

- dd test

^C18625+0 records in

18625+0 records out

2441216000 bytes (2.4 GB) copied, 90.2074 s, 27.1 MB/s

- iozone test:

"Throughput report Y-axis is type of test X-axis is number of processes"

"Record size = 128 Kbytes "

"Output is in Kbytes/sec"

" Initial write " 31658.41

" Rewrite " 28010.82

" Read " 95170.81

" Re-read " 114052.75

" Reverse Read " 216960.58

" Stride read " 414234.79

" Random read " 3097005.52

" Mixed workload " 37324.00

" Random write " 28707.09

" Pwrite " 29535.80

" Pread " 108033.00

" Fwrite " 29034.48

" Fread " 109954.27

iozone test complete.

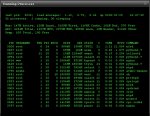

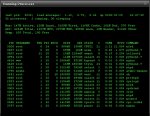

While performing dd test:

- top

- iostat

[root@freenas ~]# zpool iostat 1

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.74G 10.9T 0 523 0 60.5M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.74G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.74G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.80G 10.9T 0 542 0 62.8M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.80G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.80G 10.9T 0 265 0 33.2M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.87G 10.9T 0 267 0 28.4M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.87G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.87G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.93G 10.9T 0 523 0 60.6M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.93G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.93G 10.9T 0 390 0 48.8M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.99G 10.9T 0 134 0 11.8M

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.99G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

RAID1ZFS1 238G 5.20T 0 0 0 0

RAID6ZFS1 7.99G 10.9T 0 0 0 0

---------- ----- ----- ----- ----- ----- -----

- ifstat:

From CentOS bare metal I have slightly better result but still far from expected.

It seems to me, network is not saturated (far from iperf test), disk IO is not saturated (lots of idle in iostat). Hence I was wondering where FreeNAS is choking on, to give e.g. 20MB/s write throughput. I understand I don’t have the best rationale to justify my current setting, and I understand the parameters may not be optimized. But still it should not give such a performance result, is it? Thanks greatly for your help.