NightNetworks

Explorer

- Joined

- Sep 6, 2015

- Messages

- 61

Inside of FreeNAS I have a volume with a total size of 109GB. I have created a ZVOL of 90GB that is being shared via iSCSI to an ESXi host. Now obviously that far exceeds the recommendation of not using more than 50% of the volume when using iSCSI and I suspect that it will stop working soon... The reason for posting here is I am a little confused on the present used values and while I am fairly certain that the reason why I am seeing the numbers below is due to the whole 50% thing above I just wanted to confirm.

So my questions are as follows...

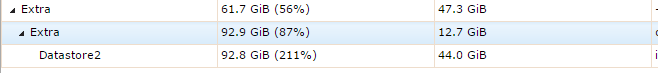

- If the total volume is 109GB in size but I created a ZVOL of 90GB why is it that FreeNAS is reporting volume usage of 61.7GB (56%) with 47.3GB of free space?

- The ZVOL "datastore2" is showing 92.87GB of used space which makes since, but then its reporting the percent used as 211... I am assuming that this has something to do with the fact that I am using more than 50% of the total volume or is this something else?

Just to repeat I know that I should not be using more than 50% of the volume and that this is a really bad idea I am just trying to understand the numbers that I am seeing.

Thanks in advance.

So my questions are as follows...

- If the total volume is 109GB in size but I created a ZVOL of 90GB why is it that FreeNAS is reporting volume usage of 61.7GB (56%) with 47.3GB of free space?

- The ZVOL "datastore2" is showing 92.87GB of used space which makes since, but then its reporting the percent used as 211... I am assuming that this has something to do with the fact that I am using more than 50% of the total volume or is this something else?

Just to repeat I know that I should not be using more than 50% of the volume and that this is a really bad idea I am just trying to understand the numbers that I am seeing.

Thanks in advance.