pixel_punk

Cadet

- Joined

- Apr 29, 2020

- Messages

- 3

Hallo everyone!

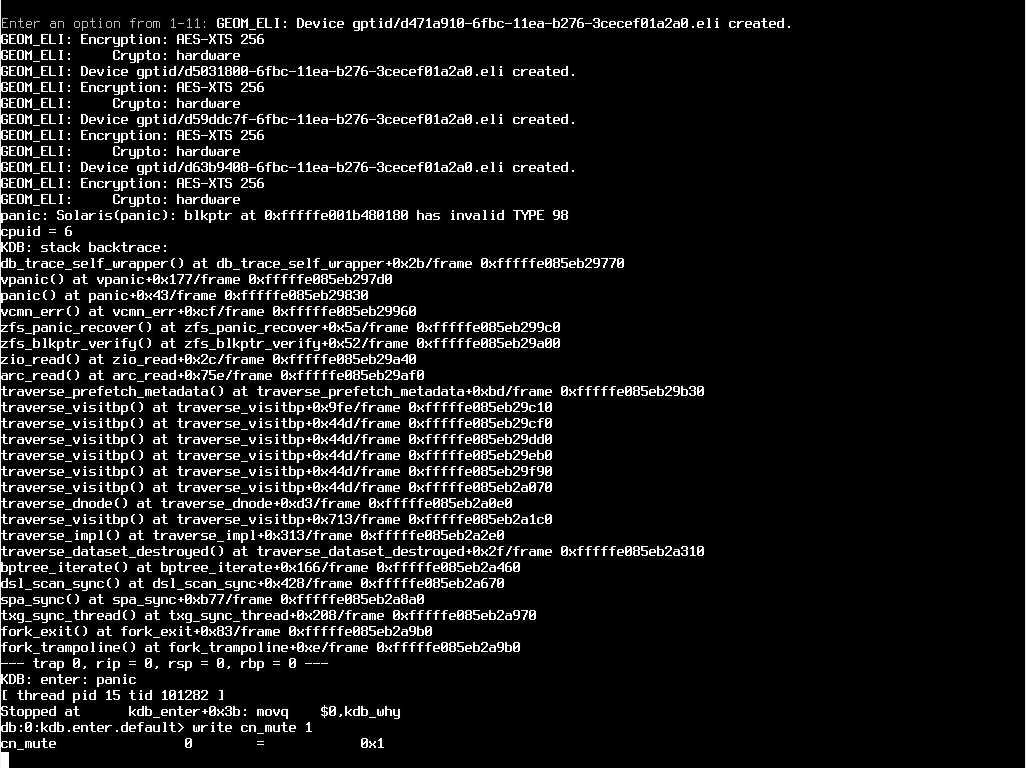

when deleting a jail in the GUI the server suddenly crashed and rebooted.

When it came up again I tried to unlock my encrypted pool as usual which led to another unexpected reboot.

So I logged in via SSH and tried to manually unlock the devices and import the pool.

Following this guide I successfully attached all devices:

But

So I followed these threads/posts:

www.ixsystems.com

www.ixsystems.com

Using ECC RAM this should not be a faulty module problem...

The partitions look good:

But this doesn't:

Also

But I managed to import the pool read-only

And it looks good:

So it seems that there is something wrong when importing that pool as RW.

Has anyone an idea what went wrong and how to fix it? I really don't want to pull 20TB from backup :o

System info:

FreeNAS-11.2-U8

Supermicro A2SDi-8C+-HLN4F

64GB DDR4-2400 regECC RAM

4x 8TB WD80EFAX-68KNBN0 (pool)

1x 250GB Samsung 860 EVO (boot)

when deleting a jail in the GUI the server suddenly crashed and rebooted.

When it came up again I tried to unlock my encrypted pool as usual which led to another unexpected reboot.

So I logged in via SSH and tried to manually unlock the devices and import the pool.

Following this guide I successfully attached all devices:

Code:

root@strider:~ # geli status

Name Status Components

mirror/swap0.eli ACTIVE mirror/swap0

mirror/swap1.eli ACTIVE mirror/swap1

gptid/d63b9408-6fbc-11ea-b276-3cecef01a2a0.eli ACTIVE gptid/d63b9408-6fbc-11ea-b276-3cecef01a2a0

gptid/d59ddc7f-6fbc-11ea-b276-3cecef01a2a0.eli ACTIVE gptid/d59ddc7f-6fbc-11ea-b276-3cecef01a2a0

gptid/d5031800-6fbc-11ea-b276-3cecef01a2a0.eli ACTIVE gptid/d5031800-6fbc-11ea-b276-3cecef01a2a0

gptid/d471a910-6fbc-11ea-b276-3cecef01a2a0.eli ACTIVE gptid/d471a910-6fbc-11ea-b276-3cecef01a2a0But

zpool import tank crashed the system again with this output:So I followed these threads/posts:

ZFS import crashes freenas

Hi, after upgrading to 9.2.1 every thing worked like a charm. Yesterday my nas suddenly crashed. The console displayed: KDB: enter: panic Rebooting did not help, so I made a new USB stick. Now, when I try to import the ZFS volume (GUI of CLI), my console gets tons of errors and reboots. Errors...

Using ECC RAM this should not be a faulty module problem...

The partitions look good:

Code:

root@strider:~ # gpart status Name Status Components ada0p1 OK ada0 ada0p2 OK ada0 ada1p1 OK ada1 ada1p2 OK ada1 ada2p1 OK ada2 ada2p2 OK ada2 ada3p1 OK ada3 ada3p2 OK ada3 ada4p1 OK ada4 ada4p2 OK ada4

But this doesn't:

Code:

root@strider:~ # zdb -e -bcsvL tank Traversing all blocks to verify checksums ... Assertion failed: (blkptr at 0x80b01eb80 has invalid TYPE 98), file (null), line 0. Abort (core dumped)

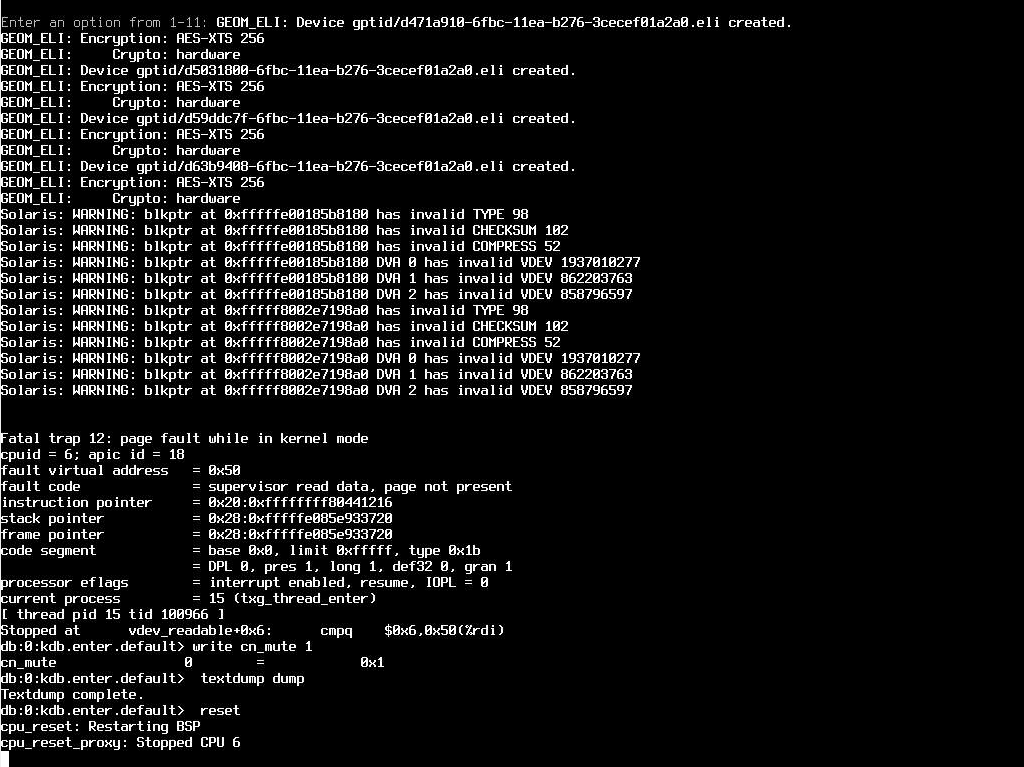

Also

zpool import -F tank (as suggested by Oracle) produces another system crash:But I managed to import the pool read-only

zpool import -F -f -o readonly=on -R /mnt tank...woohoo!And it looks good:

Code:

root@strider:~ # zpool status -v tank

pool: tank

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/d471a910-6fbc-11ea-b276-3cecef01a2a0.eli ONLINE 0 0 0

gptid/d5031800-6fbc-11ea-b276-3cecef01a2a0.eli ONLINE 0 0 0

gptid/d59ddc7f-6fbc-11ea-b276-3cecef01a2a0.eli ONLINE 0 0 0

gptid/d63b9408-6fbc-11ea-b276-3cecef01a2a0.eli ONLINE 0 0 0

errors: No known data errorsSo it seems that there is something wrong when importing that pool as RW.

Has anyone an idea what went wrong and how to fix it? I really don't want to pull 20TB from backup :o

System info:

FreeNAS-11.2-U8

Supermicro A2SDi-8C+-HLN4F

64GB DDR4-2400 regECC RAM

4x 8TB WD80EFAX-68KNBN0 (pool)

1x 250GB Samsung 860 EVO (boot)

Code:

root@strider:~ # zpool history -i tank History for 'tank': 2020-03-27.00:52:23 [txg:5] create pool version 5000; software version 5000/5; uts strider.local 11.2-STABLE 1102500 amd64 2020-03-27.00:52:23 [txg:5] set tank (21) compression=15 2020-03-27.00:52:23 [txg:5] set tank (21) aclmode=3 2020-03-27.00:52:23 [txg:5] set tank (21) aclinherit=3 2020-03-27.00:52:23 [txg:5] set tank (21) mountpoint=/tank 2020-03-27.00:52:23 zpool create -o cachefile=/data/zfs/zpool.cache -o failmode=continue -o autoexpand=on -O compression=lz4 -O aclmode=passthrough -O aclinherit=passthrough -f -m /tank -o altroot=/mnt tank raidz /dev/gptid/d471a910-6fbc-11ea-b276-3cecef01a2a0.eli /dev/gptid/d5031800-6fbc-11ea-b276-3cecef01a2a0.eli /dev/gptid/d59ddc7f-6fbc-11ea-b276-3cecef01a2a0.eli /dev/gptid/d63b9408-6fbc-11ea-b276-3cecef01a2a0.eli 2020-03-27.00:52:23 [txg:7] inherit tank (21) mountpoint=/ 2020-03-27.00:52:28 zfs inherit mountpoint tank 2020-03-27.00:52:28 zpool set cachefile=/data/zfs/zpool.cache tank [...] 2020-04-29.13:53:57 zfs destroy -d tank/iocage/jails/plex@auto-20200405.0428-2w 2020-04-29.16:19:33 [txg:869679] destroy tank/iocage/jails/plex/root@auto-20200421.0428-2w (395) 2020-04-29.16:19:33 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200421.0428-2w 2020-04-29.16:19:33 [txg:869680] destroy tank/iocage/jails/plex/root@auto-20200425.0428-2w (465) 2020-04-29.16:19:33 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200425.0428-2w 2020-04-29.16:19:33 [txg:869681] destroy tank/iocage/jails/plex/root@auto-20200429.0428-2w (579) 2020-04-29.16:19:33 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200429.0428-2w 2020-04-29.16:19:33 [txg:869682] destroy tank/iocage/jails/plex/root@auto-20200417.0428-2w (282) 2020-04-29.16:19:33 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200417.0428-2w 2020-04-29.16:19:33 [txg:869683] destroy tank/iocage/jails/plex/root@auto-20200416.0428-2w (264) 2020-04-29.16:19:34 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200416.0428-2w 2020-04-29.16:19:34 [txg:869684] destroy tank/iocage/jails/plex/root@auto-20200428.0428-2w (550) 2020-04-29.16:19:34 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200428.0428-2w 2020-04-29.16:19:34 [txg:869685] destroy tank/iocage/jails/plex/root@auto-20200420.0428-2w (350) 2020-04-29.16:19:35 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200420.0428-2w 2020-04-29.16:19:35 [txg:869686] destroy tank/iocage/jails/plex/root@auto-20200424.0428-2w (446) 2020-04-29.16:19:35 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200424.0428-2w 2020-04-29.16:19:35 [txg:869687] destroy tank/iocage/jails/plex/root@ioc_plugin_update_2019-10-07 (384) 2020-04-29.16:19:35 <iocage> zfs destroy tank/iocage/jails/plex/root@ioc_plugin_update_2019-10-07 2020-04-29.16:19:35 [txg:869688] destroy tank/iocage/jails/plex/root@auto-20200418.0428-2w (300) 2020-04-29.16:19:35 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200418.0428-2w 2020-04-29.16:19:35 [txg:869689] destroy tank/iocage/jails/plex/root@auto-20200422.0428-2w (410) 2020-04-29.16:19:35 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200422.0428-2w 2020-04-29.16:19:35 [txg:869690] destroy tank/iocage/jails/plex/root@auto-20200426.0428-2w (486) 2020-04-29.16:19:36 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200426.0428-2w 2020-04-29.16:19:36 [txg:869691] destroy tank/iocage/jails/plex/root@ioc_update_11.2-RELEASE-p6 (373) 2020-04-29.16:19:38 <iocage> zfs destroy tank/iocage/jails/plex/root@ioc_update_11.2-RELEASE-p6 2020-04-29.16:19:38 [txg:869693] destroy tank/iocage/jails/plex/root@auto-20200423.0428-2w (430) 2020-04-29.16:19:39 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200423.0428-2w 2020-04-29.16:19:39 [txg:869695] destroy tank/iocage/jails/plex/root@auto-20200427.0428-2w (515) 2020-04-29.16:19:40 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200427.0428-2w 2020-04-29.16:19:40 [txg:869696] destroy tank/iocage/jails/plex/root@auto-20200419.0428-2w (320) 2020-04-29.16:19:42 <iocage> zfs destroy tank/iocage/jails/plex/root@auto-20200419.0428-2w 2020-04-29.16:19:44 [txg:869700] destroy tank/iocage/jails/plex/root (369) 2020-04-29.16:19:46 <iocage> zfs destroy tank/iocage/jails/plex/root 2020-04-29.16:19:46 [txg:869702] destroy tank/iocage/jails/plex@auto-20200428.0428-2w (767) 2020-04-29.16:19:48 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200428.0428-2w 2020-04-29.16:19:48 [txg:869704] destroy tank/iocage/jails/plex@auto-20200420.0428-2w (669) 2020-04-29.16:19:50 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200420.0428-2w 2020-04-29.16:19:50 [txg:869706] destroy tank/iocage/jails/plex@auto-20200424.0428-2w (720) 2020-04-29.16:19:53 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200424.0428-2w 2020-04-29.16:19:53 [txg:869708] destroy tank/iocage/jails/plex@auto-20200416.0428-2w (597) 2020-04-29.16:19:55 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200416.0428-2w 2020-04-29.16:19:55 [txg:869710] destroy tank/iocage/jails/plex@manual-20200413_movingto_beta-branche (1059) 2020-04-29.16:19:57 <iocage> zfs destroy tank/iocage/jails/plex@manual-20200413_movingto_beta-branche 2020-04-29.16:19:57 [txg:869712] destroy tank/iocage/jails/plex@auto-20200417.0428-2w (614) 2020-04-29.16:19:59 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200417.0428-2w 2020-04-29.16:19:59 [txg:869714] destroy tank/iocage/jails/plex@ioc_update_11.2-RELEASE-p6 (632) 2020-04-29.16:20:02 <iocage> zfs destroy tank/iocage/jails/plex@ioc_update_11.2-RELEASE-p6 2020-04-29.16:20:02 [txg:869716] destroy tank/iocage/jails/plex@ioc_plugin_update_2019-10-07 (645) 2020-04-29.16:20:04 <iocage> zfs destroy tank/iocage/jails/plex@ioc_plugin_update_2019-10-07 2020-04-29.16:20:04 [txg:869718] destroy tank/iocage/jails/plex@auto-20200421.0428-2w (682) 2020-04-29.16:20:06 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200421.0428-2w 2020-04-29.16:20:06 [txg:869720] destroy tank/iocage/jails/plex@auto-20200425.0428-2w (731) 2020-04-29.16:20:09 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200425.0428-2w 2020-04-29.16:20:09 [txg:869722] destroy tank/iocage/jails/plex@auto-20200429.0428-2w (777) 2020-04-29.16:20:11 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200429.0428-2w 2020-04-29.16:20:11 [txg:869724] destroy tank/iocage/jails/plex@auto-20200419.0428-2w (659) 2020-04-29.16:20:13 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200419.0428-2w 2020-04-29.16:20:13 [txg:869726] destroy tank/iocage/jails/plex@auto-20200423.0428-2w (710) 2020-04-29.16:20:15 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200423.0428-2w 2020-04-29.16:20:15 [txg:869728] destroy tank/iocage/jails/plex@auto-20200427.0428-2w (757) 2020-04-29.16:20:17 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200427.0428-2w 2020-04-29.16:20:17 [txg:869730] destroy tank/iocage/jails/plex@auto-20200422.0428-2w (700) 2020-04-29.16:20:20 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200422.0428-2w 2020-04-29.16:20:20 [txg:869732] destroy tank/iocage/jails/plex@auto-20200426.0428-2w (746) 2020-04-29.16:20:22 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200426.0428-2w 2020-04-29.16:20:22 [txg:869734] destroy tank/iocage/jails/plex@auto-20200418.0428-2w (646) 2020-04-29.16:20:24 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200418.0428-2w 2020-04-29.16:20:26 [txg:869738] destroy tank/iocage/jails/plex (363) 2020-04-29.16:20:28 <iocage> zfs destroy tank/iocage/jails/plex 2020-04-29.16:20:31 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200428.0428-2w 2020-04-29.16:20:33 <iocage> zfs destroy tank/iocage/jails/plex@auto-20200420.0428-2w