Ahoy ahoy friends.

I was running a pool using 8x 4TB HDDs + 1 Hot Spare. One day, some power issue, caused 3 HDDs to be thrown out of the pool and it seems like this damaged the pool.

I wasn't able to mount the pool anymore in FreeNAS 11.3 because the complete ZFS started to hang whenever i tried to get zpool status, or something else.

So i went to TrueNAS and on TrueNAS i was able to complete the resilvering process at least, but immediatly after completing resilvering it said: "Solaris: warning pool has encountered an uncorrectable io error suspended".

Now after reboot i tried to access the pool again somehow, but also the ZFS subsystem is stuck now, and even "zpool import -a" doesn't complete.

What's the best way to proceed here now?

I'd like to run at least a scrub now, i don't really expect to recover any data, but i'd get a pic of my dataset hierarchy. Even though, i was able to get access to the pool while it was resilvering, now it seems to be impossible to mount it somehow.

Also i am not able to access the pool through a linux live system, because once i had the bad idea to activate GELI encryption for the pool on FreeNAS.

Any idea? Maybe trying FreeBSD or something to get access?

I see, people have reported success in using a Linux live system with ZoL.

Unfortunately i am not able to decrypt the GELI encryption.

What is the best way to get rid of the encryption? Maybe decrypt the disks on FreeBSD and dd them to another disk?

EDIT: Unfortunately the same issue in FreeBSD 14.0

EDIT2:

I have manually degraded the pool, by removing one disk, now i am able to get it working somehow.

I was running a pool using 8x 4TB HDDs + 1 Hot Spare. One day, some power issue, caused 3 HDDs to be thrown out of the pool and it seems like this damaged the pool.

I wasn't able to mount the pool anymore in FreeNAS 11.3 because the complete ZFS started to hang whenever i tried to get zpool status, or something else.

So i went to TrueNAS and on TrueNAS i was able to complete the resilvering process at least, but immediatly after completing resilvering it said: "Solaris: warning pool has encountered an uncorrectable io error suspended".

Now after reboot i tried to access the pool again somehow, but also the ZFS subsystem is stuck now, and even "zpool import -a" doesn't complete.

What's the best way to proceed here now?

I'd like to run at least a scrub now, i don't really expect to recover any data, but i'd get a pic of my dataset hierarchy. Even though, i was able to get access to the pool while it was resilvering, now it seems to be impossible to mount it somehow.

Also i am not able to access the pool through a linux live system, because once i had the bad idea to activate GELI encryption for the pool on FreeNAS.

Any idea? Maybe trying FreeBSD or something to get access?

I see, people have reported success in using a Linux live system with ZoL.

Unfortunately i am not able to decrypt the GELI encryption.

What is the best way to get rid of the encryption? Maybe decrypt the disks on FreeBSD and dd them to another disk?

EDIT: Unfortunately the same issue in FreeBSD 14.0

EDIT2:

I have manually degraded the pool, by removing one disk, now i am able to get it working somehow.

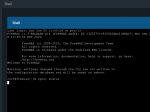

Code:

root@recover:/tmp # zpool status

pool: data

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Tue Jun 22 10:12:03 2021

1.16T scanned at 22.9G/s, 118M issued at 2.27M/s, 16.6T total

0B resilvered, 0.00% done, no estimated completion time

config:

NAME STATE READ WRITE CKSUM

data DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

da2p2.eli ONLINE 0 0 47

da7p2.eli ONLINE 0 0 0

da4p2.eli ONLINE 0 0 0

da0p2.eli ONLINE 0 0 0

da6p2.eli ONLINE 0 0 47

spare-5 DEGRADED 0 0 0

12219767935346166654 UNAVAIL 0 0 0 was /dev/gptid/9b4a1344-6596-11eb-a75c-8f89b0c061c4.eli

da5p2.eli ONLINE 0 0 0

da1p2.eli ONLINE 0 0 0

da3p2.eli ONLINE 0 0 0

logs

12893365534936794026 UNAVAIL 0 0 0 was /dev/gptid/080a5406-7e88-11eb-bffa-b95a0a9e4218.eli

errors: 6 data errors, use '-v' for a list

Attachments

Last edited: