DigitalMinimalist

Contributor

- Joined

- Jul 24, 2022

- Messages

- 162

Hello,

I currently run TrueNAS Scale (since many month with this pool design (see also my old thread)

Asrock Rack X470DU with 2700X and 4x16GB RAM ECC

4x 16TB Toshiba as striped mirror

2x Micron 7300 Pro 960GB mirrored as special vDev (metadata)

no L2ARC

no SLOG

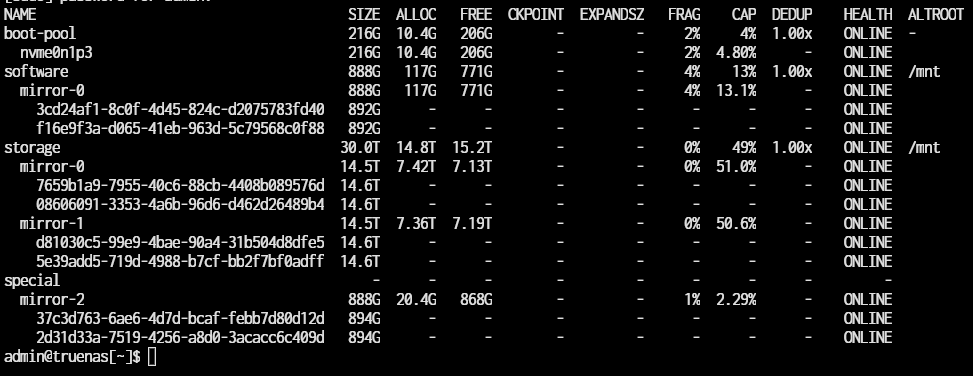

With "sudo zpool list -v" I get the following picture.

First of all looks ok, but I observe that "only" 20GB of the 868GB are used on the special metadata device AND it's only showing 6 files at 1K????

Any suggestions?

sudo zpool list -v

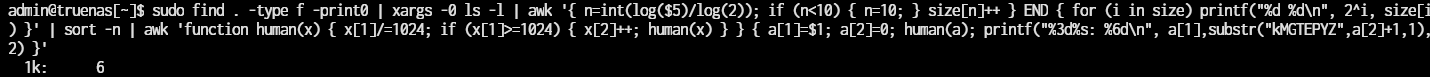

find . -type f -print0 | xargs -0 ls -l | awk '{ n=int(log($5)/log(2)); if (n<10) { n=10; } size[n]++ } END { for (i in size) printf("%d %d\n", 2^i, size) }' | sort -n | awk 'function human(x) { x[1]/=1024; if (x[1]>=1024) { x[2]++; human(x) } } { a[1]=$1; a[2]=0; human(a); printf("%3d%s: %6d\n", a[1],substr("kMGTEPYZ",a[2]+1,1),$2) }'

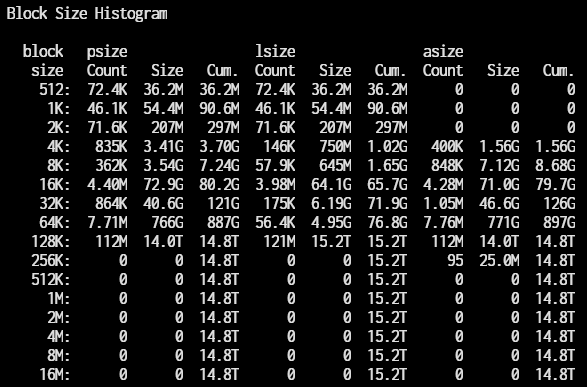

sudo zdb -Lbbbs -U /data/zfs/zpool.cache storage

On top, I'm not happy with the power consumption, which is around 70-80W... not bad, but nor great either.

I did buy a Gigabyte MC12-LE0 for cheap with a Ryzen 4650G and also bought 4x32GB RAM ECC - In my test setup, this system uses 30W idle (no VMs, no load) with a similar hardware setup (3x NVME + 4x HDD).

I have the suspicion, that the Micron 7300 Pro are the bad guys here (I have 4, as I initially planned to 2x mirrored to use the system more heavily for Virtualization).

Though, here is the idea:

Rebuild my 24/7 TNS server (after Release of Dragonfish):

I will only run a few virtualization tasks:

Proxomx Backup Server (PBS) as VM

optional: Jellyfin server (I could also use my Proxmox Hypervisor and only access the data from Server via NFS)

Open questions:

My X470D4U will become a backup server with TNS:

X470D4U

2700X

2x 16GB ECC RAM (or 4x 16GB)

X520-DA2

HP H220 HBA (SAS)

256GB SSD for OS

9x 4TB HDDs @ RAIDZ1 (I bought some cheap 4TB SAS HDDs)

Leftover Chenbro Rackount Chassis

I currently run TrueNAS Scale (since many month with this pool design (see also my old thread)

Asrock Rack X470DU with 2700X and 4x16GB RAM ECC

4x 16TB Toshiba as striped mirror

2x Micron 7300 Pro 960GB mirrored as special vDev (metadata)

no L2ARC

no SLOG

With "sudo zpool list -v" I get the following picture.

First of all looks ok, but I observe that "only" 20GB of the 868GB are used on the special metadata device AND it's only showing 6 files at 1K????

Any suggestions?

sudo zpool list -v

find . -type f -print0 | xargs -0 ls -l | awk '{ n=int(log($5)/log(2)); if (n<10) { n=10; } size[n]++ } END { for (i in size) printf("%d %d\n", 2^i, size) }' | sort -n | awk 'function human(x) { x[1]/=1024; if (x[1]>=1024) { x[2]++; human(x) } } { a[1]=$1; a[2]=0; human(a); printf("%3d%s: %6d\n", a[1],substr("kMGTEPYZ",a[2]+1,1),$2) }'

sudo zdb -Lbbbs -U /data/zfs/zpool.cache storage

On top, I'm not happy with the power consumption, which is around 70-80W... not bad, but nor great either.

I did buy a Gigabyte MC12-LE0 for cheap with a Ryzen 4650G and also bought 4x32GB RAM ECC - In my test setup, this system uses 30W idle (no VMs, no load) with a similar hardware setup (3x NVME + 4x HDD).

I have the suspicion, that the Micron 7300 Pro are the bad guys here (I have 4, as I initially planned to 2x mirrored to use the system more heavily for Virtualization).

Though, here is the idea:

Rebuild my 24/7 TNS server (after Release of Dragonfish):

- Gigabyte MC12-LE0

- 4650G

- 4x 32GB ECC RAM

- X710-DA2 NIC

- 4x16TB HDDs

- 256GB Intel 760p for OS

I will only run a few virtualization tasks:

Proxomx Backup Server (PBS) as VM

optional: Jellyfin server (I could also use my Proxmox Hypervisor and only access the data from Server via NFS)

Open questions:

- Continue to use special vDev for Metadata? If yes, I can get Intel P1600X Optane 58GB for 45 Euro :) (increase speed + lower power consumption)

- L2ARC? gut feeling: I should be good with 128GB

- SLOG: use another Intel P1600X Optane 58GB as SLOG?

- Will it be a problem, if I have to setup the PBS VM (weekly automatic backups of my Hypervisor VMs/LXCs) on my storage pool with HDDs? I think, I can't install VMs on the special vDev, or the OS SSD? Correct?

My X470D4U will become a backup server with TNS:

X470D4U

2700X

2x 16GB ECC RAM (or 4x 16GB)

X520-DA2

HP H220 HBA (SAS)

256GB SSD for OS

9x 4TB HDDs @ RAIDZ1 (I bought some cheap 4TB SAS HDDs)

Leftover Chenbro Rackount Chassis

Last edited: