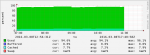

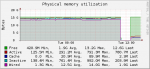

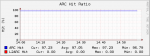

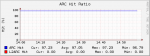

I'm having stability issues with FreeNAS-9.3-STABLE-201509022158 see attached screenshot.

I suspect tank was the zpool that caused the kernel crash as the other zpool should have been inactive.

This is a 2U Supermicro with 16 GB of RAM.

Code:

# zfs list tank NAME USED AVAIL REFER MOUNTPOINT tank 251G 290G 44.5K /mnt/tank

Code:

zpool status tank

pool: tank

state: ONLINE

status: The pool is formatted using a legacy on-disk format. The pool can

still be used, but some features are unavailable.

action: Upgrade the pool using 'zpool upgrade'. Once this is done, the

pool will no longer be accessible on software that does not support feature

flags.

scan: scrub repaired 0 in 1h3m with 0 errors on Sun Mar 6 01:03:08 2016

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/5a0a0323-7921-6964-8e75-d40c5d01776a ONLINE 0 0 0

gptid/34ba81da-c021-5acf-da0a-b99b02b81b6d ONLINE 0 0 0

errors: No known data errorsI suspect tank was the zpool that caused the kernel crash as the other zpool should have been inactive.

Code:

# zfs list unitrends NAME USED AVAIL REFER MOUNTPOINT unitrends 6.22T 1.68T 52.4K /mnt/unitrends # zfs get compression unitrends NAME PROPERTY VALUE SOURCE unitrends compression off default # zfs get dedup unitrends NAME PROPERTY VALUE SOURCE unitrends dedup off default

Code:

zpool status unitrends

pool: unitrends

state: ONLINE

status: One or more devices are configured to use a non-native block size.

Expect reduced performance.

action: Replace affected devices with devices that support the

configured block size, or migrate data to a properly configured

pool.

scan: scrub repaired 598K in 3h7m with 0 errors on Sun Mar 6 03:07:59 2016

config:

NAME STATE READ WRITE CKSUM

unitrends ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/dcf88df4-59eb-b1c5-f893-cafbd1c7cad4 ONLINE 0 0 0

gptid/b874ee52-e07c-8545-9ab4-9417e2e89593 ONLINE 0 0 0

gptid/7964f343-cf69-0acb-d655-8c71bd326151 ONLINE 0 0 0

gptid/c3e7c2fa-3d20-26c8-afd1-af0cc1486df7 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

gptid/b03363ed-dc79-ab6f-d88b-f1414fd9fd3a ONLINE 0 0 0

gptid/f5c57bd1-3221-b9ee-ee9b-db74aef5b8c4 ONLINE 0 0 0

gptid/0097375e-29e6-9de9-a6bf-d0cc9dc82701 ONLINE 0 0 0

gptid/8354f83f-3444-7f49-e08c-a9f2db39b402 ONLINE 0 0 0

raidz1-2 ONLINE 0 0 0

gptid/c21e456e-e7d2-5d47-e4f5-8cf202781a30 ONLINE 0 0 0

gptid/dda4e736-52e1-c267-f0e4-d97df6dff207 ONLINE 0 0 0

gptid/69233fe6-4f27-e1ec-bf3a-b9bcdc829df5 ONLINE 0 0 0

gptid/01dccfde-d2d5-03e1-b6cd-973255168e2b ONLINE 0 0 0

raidz1-3 ONLINE 0 0 0

gptid/d58a3b0c-a848-ace0-af98-a082c98fe49b ONLINE 0 0 0

gptid/fed64fbe-6dfd-3bc6-b20d-cfc3f618e91f ONLINE 0 0 0

gptid/37e8f78b-f155-806b-f6cb-b8b785af9920 ONLINE 0 0 0

gptid/2832fc4f-ddad-7649-b814-f80c8e1a7452 ONLINE 0 0 0

logs

gptid/8a62d106-7d45-03cd-dc93-ad039fc7dc09 ONLINE 0 0 0 block size: 512B configured, 4096B native

cache

gptid/c7857548-c70a-d141-c2de-9b91ff687fdf ONLINE 0 0 0

errors: No known data errors

Code:

lspci 00:00.0 Host bridge: Intel Corporation Xeon E5/Core i7 DMI2 (rev 07) 00:01.0 PCI bridge: Intel Corporation Xeon E5/Core i7 IIO PCI Express Root Port 1a (rev 07) 00:02.0 PCI bridge: Intel Corporation Xeon E5/Core i7 IIO PCI Express Root Port 2a (rev 07) 00:02.2 PCI bridge: Intel Corporation Xeon E5/Core i7 IIO PCI Express Root Port 2c (rev 07) 00:03.0 PCI bridge: Intel Corporation Xeon E5/Core i7 IIO PCI Express Root Port 3a in PCI Express Mode (rev 07) 00:03.2 PCI bridge: Intel Corporation Xeon E5/Core i7 IIO PCI Express Root Port 3c (rev 07) 00:04.0 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 0 (rev 07) 00:04.1 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 1 (rev 07) 00:04.2 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 2 (rev 07) 00:04.3 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 3 (rev 07) 00:04.4 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 4 (rev 07) 00:04.5 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 5 (rev 07) 00:04.6 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 6 (rev 07) 00:04.7 System peripheral: Intel Corporation Xeon E5/Core i7 DMA Channel 7 (rev 07) 00:05.0 System peripheral: Intel Corporation Xeon E5/Core i7 Address Map, VTd_Misc, System Management (rev 07) 00:05.2 System peripheral: Intel Corporation Xeon E5/Core i7 Control Status and Global Errors (rev 07) 00:05.4 PIC: Intel Corporation Xeon E5/Core i7 I/O APIC (rev 07) 00:11.0 PCI bridge: Intel Corporation C600/X79 series chipset PCI Express Virtual Root Port (rev 06) 00:16.0 Communication controller: Intel Corporation C600/X79 series chipset MEI Controller #1 (rev 05) 00:16.1 Communication controller: Intel Corporation C600/X79 series chipset MEI Controller #2 (rev 05) 00:1a.0 USB controller: Intel Corporation C600/X79 series chipset USB2 Enhanced Host Controller #2 (rev 06) 00:1d.0 USB controller: Intel Corporation C600/X79 series chipset USB2 Enhanced Host Controller #1 (rev 06) 00:1e.0 PCI bridge: Intel Corporation 82801 PCI Bridge (rev a6) 00:1f.0 ISA bridge: Intel Corporation C600/X79 series chipset LPC Controller (rev 06) 00:1f.2 SATA controller: Intel Corporation C600/X79 series chipset 6-Port SATA AHCI Controller (rev 06) 00:1f.3 SMBus: Intel Corporation C600/X79 series chipset SMBus Host Controller (rev 06) 00:1f.6 Signal processing controller: Intel Corporation C600/X79 series chipset Thermal Management Controller (rev 06) 03:00.0 Serial Attached SCSI controller: LSI Logic / Symbios Logic SAS2308 PCI-Express Fusion-MPT SAS-2 (rev 05) 05:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) 05:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) 05:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) 05:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) 08:01.0 VGA compatible controller: Matrox Electronics Systems Ltd. MGA G200eW WPCM450 (rev 0a)

This is a 2U Supermicro with 16 GB of RAM.

Attachments

Last edited: