cypher1024

Dabbler

- Joined

- Aug 22, 2011

- Messages

- 18

I'm not really sure where this thread belongs. Mods - feel free to move it if there's a more appropriate section.

I've seen a few posts about the merits of different ZFS disk configurations. I thought I'd do some testing.

Test machine:

FreeNAS 8.0.1 Beta 4 AMD64

Gigabyte GA-MA78LM-S2 (4 onboard SATA ports)

AMD Phenom II x2 550

4GB of 1333MHz DDR3

Dell 0HN359 SAS card with 32-pin SAS -> 4 x SATA cable

4 x 500GB disks (3 x 7200rpm Seagates & 1 x 7200rpm WD)

4 x 1.5TB disks (3 x 7200rpm Seagates & 1 x 5400rpm Samsung)

I wish I had eight identical disks for testing, but I did not. Hopefully this didn't influence the results too much.

During testing, the 1.5TB drives were connected to the SAS card and the 500GB drives were connected to the motherboard. I did a few tests and determined that it didn't matter which drives were connected to which interface.

Commands used (except for the 4k tests):

Write: dd if=/dev/zero of=/mnt/tank/test.dat bs=2048k count=15k

Read: dd if=/mnt/tank/test.dat of=/dev/null bs=2048k count=15k

Prefetch was disabled by FreeNAS because I only had 4GB of RAM.

Obviously these tests aren't indicative of all possible FreeNAS applications. They only simulate a single user reading and writing large files.

RESULTS:

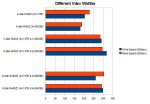

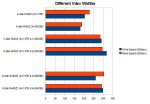

Different vdev widths:

What's interesting to note here are the n^2+p configurations (5-disk RAIDZ & 6-disk RAIDZ2). I've read several times that these configurations are supposed to be superior, but that doesn't seem to be the case in my testing. I tried to give them the best chance by removing the slower 500GB drives instead of the 1.5TB items. I realise that the scope of these tests is very limited, and there may be situations where these setups are better.

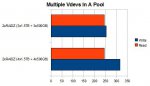

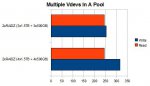

Multiple vdevs in a pool:

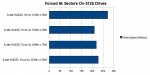

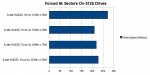

'Force 4k sectors' checkbox checked using 512b sector drives:

I probably should've made it clearer, but the top line is the 'control' (i.e. the 4k sector box isn't checked for that test).

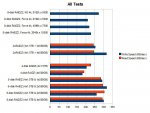

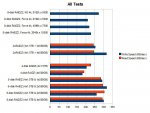

All results in one table:

I also tried an 8-disk stripe, but I didn't include it in the tables. I could write to it at about 450MB/sec, but strangely I could only read from it at about 290MB/sec.

I've seen a few posts about the merits of different ZFS disk configurations. I thought I'd do some testing.

Test machine:

FreeNAS 8.0.1 Beta 4 AMD64

Gigabyte GA-MA78LM-S2 (4 onboard SATA ports)

AMD Phenom II x2 550

4GB of 1333MHz DDR3

Dell 0HN359 SAS card with 32-pin SAS -> 4 x SATA cable

4 x 500GB disks (3 x 7200rpm Seagates & 1 x 7200rpm WD)

4 x 1.5TB disks (3 x 7200rpm Seagates & 1 x 5400rpm Samsung)

I wish I had eight identical disks for testing, but I did not. Hopefully this didn't influence the results too much.

During testing, the 1.5TB drives were connected to the SAS card and the 500GB drives were connected to the motherboard. I did a few tests and determined that it didn't matter which drives were connected to which interface.

Commands used (except for the 4k tests):

Write: dd if=/dev/zero of=/mnt/tank/test.dat bs=2048k count=15k

Read: dd if=/mnt/tank/test.dat of=/dev/null bs=2048k count=15k

Prefetch was disabled by FreeNAS because I only had 4GB of RAM.

Obviously these tests aren't indicative of all possible FreeNAS applications. They only simulate a single user reading and writing large files.

RESULTS:

Different vdev widths:

What's interesting to note here are the n^2+p configurations (5-disk RAIDZ & 6-disk RAIDZ2). I've read several times that these configurations are supposed to be superior, but that doesn't seem to be the case in my testing. I tried to give them the best chance by removing the slower 500GB drives instead of the 1.5TB items. I realise that the scope of these tests is very limited, and there may be situations where these setups are better.

Multiple vdevs in a pool:

'Force 4k sectors' checkbox checked using 512b sector drives:

I probably should've made it clearer, but the top line is the 'control' (i.e. the 4k sector box isn't checked for that test).

All results in one table:

I also tried an 8-disk stripe, but I didn't include it in the tables. I could write to it at about 450MB/sec, but strangely I could only read from it at about 290MB/sec.