winnielinnie

MVP

- Joined

- Oct 22, 2019

- Messages

- 3,641

This is a continuation and update of this thread:

www.truenas.com

www.truenas.com

Looks like OpenZFS 2.2+ introduces some cleaned up and rewritten code¹ to more intelligently and gracefully handle ARC data / metadata eviction from RAM. It's such a major change to the code that it will not be backported to version 2.1.10.

We'll also be able to use a new tunable parameter² named

In other words, we should see less abrupt metadata eviction as the ARC "adapts" to your workflow, and there's always the option to tweak this value higher or lower to guide the ARC's logic to best suit your general needs.

This is a feature I'm happily looking forward to!

If it works as intended, not only will it address a longstanding issue with aggressive metadata eviction, but it also keeps things simpler and cleaner with less tweaking involved. The ARC will behave more closely to its namesake of being truly "adaptive".

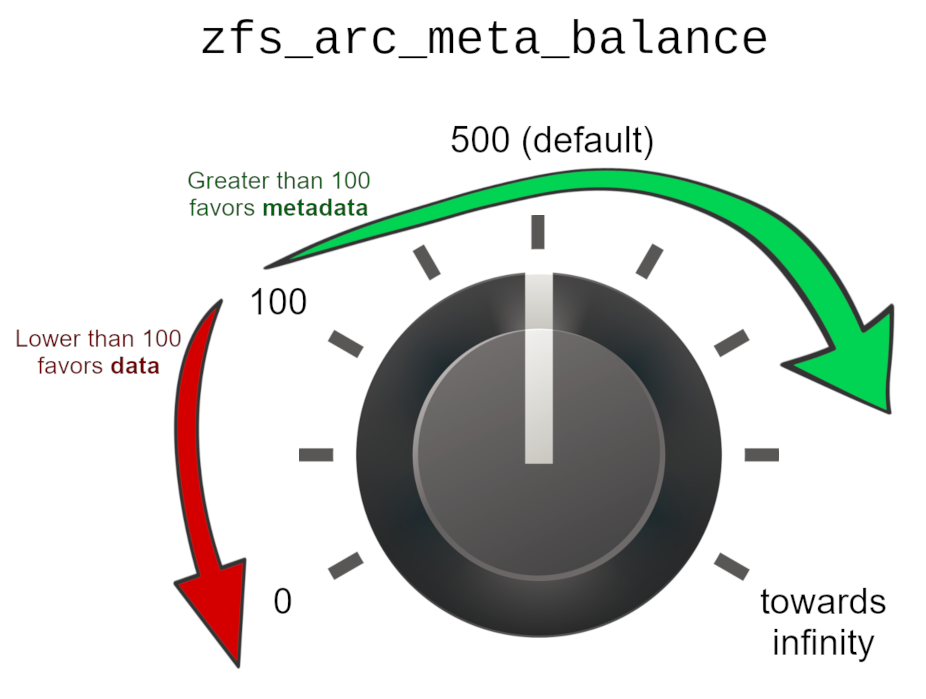

Here is a graphical representation of how to use this new parameter, as described by its developers:

Because it defaults to a value of 500, this means that ZFS 2.2.x prioritizes metadata in the ARC, without any adjustment or tweaking. The idea is that you can "dial it lower or higher", depending on your needs. (Any value below 100 will favor data over metadata.)

[1] https://github.com/openzfs/zfs/pull/14359

[2] https://openzfs.github.io/openzfs-docs/man/4/zfs.4.html#zfs_arc_meta_balance

ZFS "ARC" doesn't seem that smart...

Without yet resorting to adding an L2ARC, is there a "tuneable" that I can test which instructs the ARC to prioritize metadata? I upgraded from 16GB to 32GB ECC RAM. Yet there is zero change in this behavior. This keeps happening: I run regular rsync tasks from a few local clients, which is...

Looks like OpenZFS 2.2+ introduces some cleaned up and rewritten code¹ to more intelligently and gracefully handle ARC data / metadata eviction from RAM. It's such a major change to the code that it will not be backported to version 2.1.10.

We'll also be able to use a new tunable parameter² named

zfs_arc_meta_balance, which defaults to a value of "500". (Any value over “100” prioritizes metadata, and the higher you set this value, the lower the pressure for metadata eviction. Rest assured, it can never be set to a value that will absolutely prevent metadata eviction.)In other words, we should see less abrupt metadata eviction as the ARC "adapts" to your workflow, and there's always the option to tweak this value higher or lower to guide the ARC's logic to best suit your general needs.

This is a feature I'm happily looking forward to!

If it works as intended, not only will it address a longstanding issue with aggressive metadata eviction, but it also keeps things simpler and cleaner with less tweaking involved. The ARC will behave more closely to its namesake of being truly "adaptive".

Here is a graphical representation of how to use this new parameter, as described by its developers:

Because it defaults to a value of 500, this means that ZFS 2.2.x prioritizes metadata in the ARC, without any adjustment or tweaking. The idea is that you can "dial it lower or higher", depending on your needs. (Any value below 100 will favor data over metadata.)

[1] https://github.com/openzfs/zfs/pull/14359

[2] https://openzfs.github.io/openzfs-docs/man/4/zfs.4.html#zfs_arc_meta_balance

Last edited: