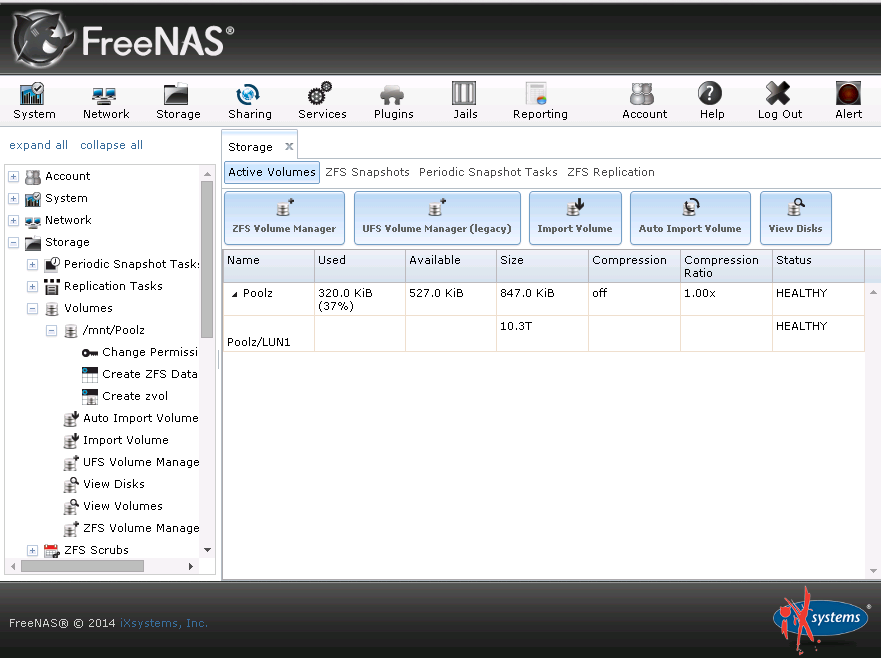

I am running 9.2.1.8 Release x64 with 6x3TB drives in a raidz2 pool. I setup an iscsi target that allocates all but 800k of the zfs pool. This config has been running fine for about a year. Now, I am getting alerts that the capacity of my pool is at 98%. The pool is 10.3TB and ~5TB are in use via a Windows box through the iscsi. At this point, I cannot write anything to the drive via Windows due to an I/O error. The freenas box is incredibly slow. It is also running a scrub at the moment. When I try to stop the scrub, it says: "cannot cancel scrubbing Poolz: out of space"

My questions are:

1. I have 10.3 TB allocated to that iscsi target, can I use all of it or am I out of space at the halfway point?

2. Was I supposed to leave some space in the pool for FreeNAS?

3. (this is a broad one) what path do I need to take to get this thing running again?

My questions are:

1. I have 10.3 TB allocated to that iscsi target, can I use all of it or am I out of space at the halfway point?

2. Was I supposed to leave some space in the pool for FreeNAS?

3. (this is a broad one) what path do I need to take to get this thing running again?