draand28

Cadet

- Joined

- Feb 10, 2022

- Messages

- 3

Hello!

I'm a TrueNAS newbie, just got up and running my first machine a few days ago.

I'm having writing issues over SMB on my new NAS. Whenever I'm trying to write files over 1GB, the writing speed falls to 0MB/s for minutes until it gets back to 5-20MB/s for a few secs and then falls back to 0 again for some more minutes. This is an always happening loop and it kinda bothers me as it takes about 30h to copy 900GB to the NAS.

At this I had only 4GB of RAM so I thought that this would be normal, especially having DM-SMR drives with LZ4 and dedup, all while using Z1 (basically worst case scenario), so I upgraded to 20GB, but the RAM isn't even used, nor is the CPU. I am aware that I need about 5GB of ram per TB, but to be honest, I don't think this is the case here.

I read some topics here for a while, but other than the RAM, I can't put my finger on what the issue could be.

On the other hand, reads work great, fully saturating the 1gbps link (soon to be 10gbps, as the NAS is my only machine without 10gbps).

In the end I don't really mind it if it's that slow, as I'm using it for archieving purposes, but of course I would be more than happy to get some actual decent writing speeds. I really wanted to use dedup as my use case scenario includes a lot of randomly allocated duplicates and I save quite a lot by using dedup.

I tried without dedup, only LZ4 compression and it works great, always 113MB/s (basically 1gbps).

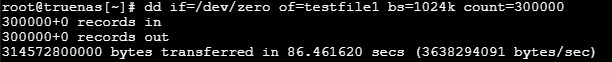

Also tried to see the actual speed of the array with dedup+LZ4, with LZ4 only and without compression, but it it always the same, 3.6GB/s (I'm probably using dd wrong)

Specs:

Raid Z1 with 4x4TB DM-SMR drives (probably part of the issue) with LZ4 compression and deduplication (this kinda is the issue)

120GB 2.5 Inch Hitachi HDD for boot (I really didn't have any spare drives left, I will upgrade this to a sata SSD when I have the money) - on that note, I noticed that while writing to the NAS, when the speed drops to 0, the built-in WebGUI Shell and Reporting graphs don't load at all, might have something to do with the boot drive?

4+16GB DDR4 2400mhz (I know it's not really dual channel, but at the moment I am very budget limited.

Intel Pentium G4400

No HBA, just using the built-in motherboard sata ports (some Asrock H110M board).

I'm a TrueNAS newbie, just got up and running my first machine a few days ago.

I'm having writing issues over SMB on my new NAS. Whenever I'm trying to write files over 1GB, the writing speed falls to 0MB/s for minutes until it gets back to 5-20MB/s for a few secs and then falls back to 0 again for some more minutes. This is an always happening loop and it kinda bothers me as it takes about 30h to copy 900GB to the NAS.

At this I had only 4GB of RAM so I thought that this would be normal, especially having DM-SMR drives with LZ4 and dedup, all while using Z1 (basically worst case scenario), so I upgraded to 20GB, but the RAM isn't even used, nor is the CPU. I am aware that I need about 5GB of ram per TB, but to be honest, I don't think this is the case here.

I read some topics here for a while, but other than the RAM, I can't put my finger on what the issue could be.

On the other hand, reads work great, fully saturating the 1gbps link (soon to be 10gbps, as the NAS is my only machine without 10gbps).

In the end I don't really mind it if it's that slow, as I'm using it for archieving purposes, but of course I would be more than happy to get some actual decent writing speeds. I really wanted to use dedup as my use case scenario includes a lot of randomly allocated duplicates and I save quite a lot by using dedup.

I tried without dedup, only LZ4 compression and it works great, always 113MB/s (basically 1gbps).

Also tried to see the actual speed of the array with dedup+LZ4, with LZ4 only and without compression, but it it always the same, 3.6GB/s (I'm probably using dd wrong)

Specs:

Raid Z1 with 4x4TB DM-SMR drives (probably part of the issue) with LZ4 compression and deduplication (this kinda is the issue)

120GB 2.5 Inch Hitachi HDD for boot (I really didn't have any spare drives left, I will upgrade this to a sata SSD when I have the money) - on that note, I noticed that while writing to the NAS, when the speed drops to 0, the built-in WebGUI Shell and Reporting graphs don't load at all, might have something to do with the boot drive?

4+16GB DDR4 2400mhz (I know it's not really dual channel, but at the moment I am very budget limited.

Intel Pentium G4400

No HBA, just using the built-in motherboard sata ports (some Asrock H110M board).