robbiecarman

Cadet

- Joined

- Aug 8, 2021

- Messages

- 2

I'm trying to figure out if I have write bottleneck a new build or what I'm seeing is expected. Server is video editing/color in my home suite (3-4 seats)

Server Build

Motherboard - ASUS x99 Sage WS (had this left over from an older workstation)

RAM - 128GB

CPU - Xeon E5 2697 v3 2.6ghz 14 Core

HBA - LSI 9305 16i (running in IT Mode, flashed to newest firmware/bios)

NIC - Intel X710 4 port RJ45 10GBe (MTU 9000 set for each port)

L2ARC - XPG 2TB NVMe located in Sabrent PCIe adapter (in a x16 slot)

System - XPG 256gb NVMe located on motherboard (x4)

Drives - 12x 10TB Western Digital Gold (Sata) Datacenter drives

Rosewill 4u Case

Running True Nas 12.0-U5

Pool Setup

1 Pool with 2x 6Drive Z2 Vdevs

NVMe L2ARC

Compression disabled

Record Size 1MB

SMB Dataset also with 1MB Record size (changed to this size anticipating larger video files)

Sharing Via SMB

Test Client & Other

Right now the server is on my kitchen table and I'm testing with a Dell Precision 5750 and a Promise San Link 3 (Thunderbolt 3 adapter) connected to a single port (not bonded) on the Intel Nic. The San Link also has MTU set to 9000 and I've tuned as much as I know how (buffers, RSS queues etc).

Also I've enabled auto tune on the server.

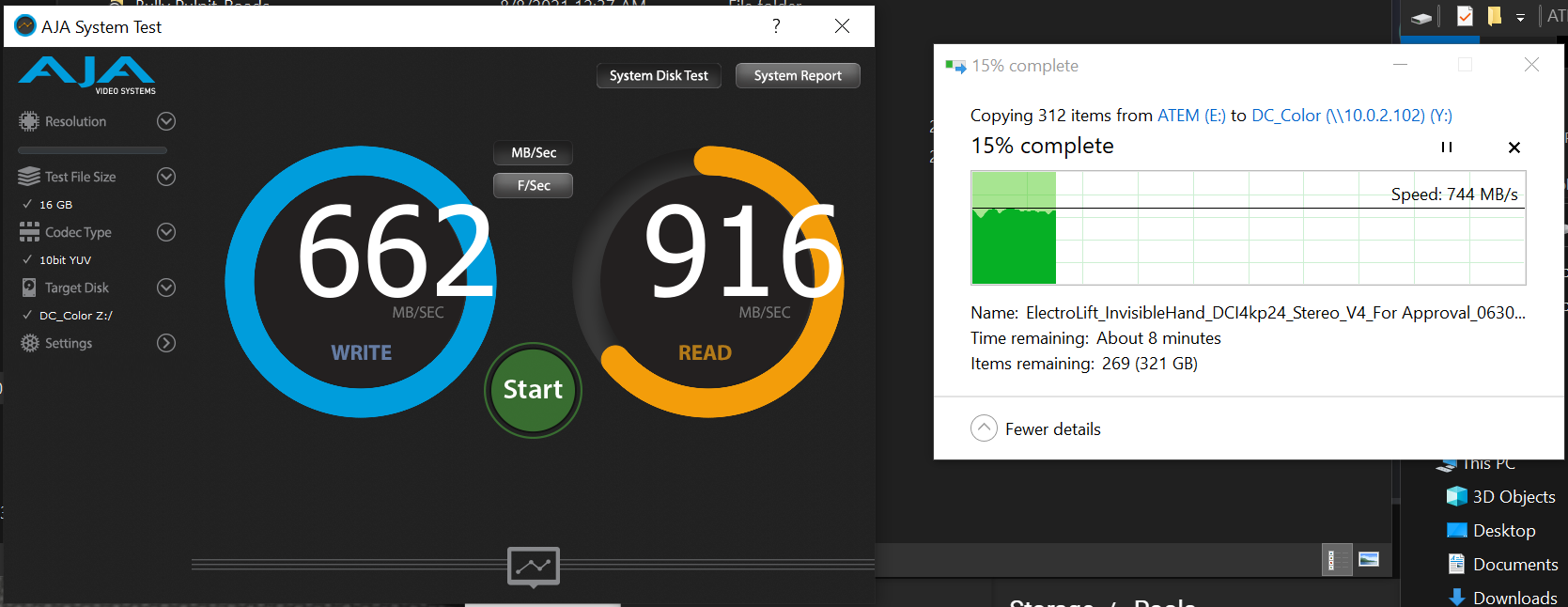

This setup is working pretty well - Using a plethora of benchmarks (BMD, AJA, CrystalDisk etc) I'm seeing Reads in the low 900 MB/s range but my Writes are averaging 'only' in the mid 600s.

In moving an actual large data block to the server (about 480GB from an Internal NVMe on the Dell) I'm getting writes that are actually in mid 700s (windows copy dialog)

Here's a screen shot of AJA test where you can see mid 600s vs mid 700s in an actual file copy:

Doing a copy back from the server things correlate better to the bench marks were i'm getting mid 800s to mid 900s.

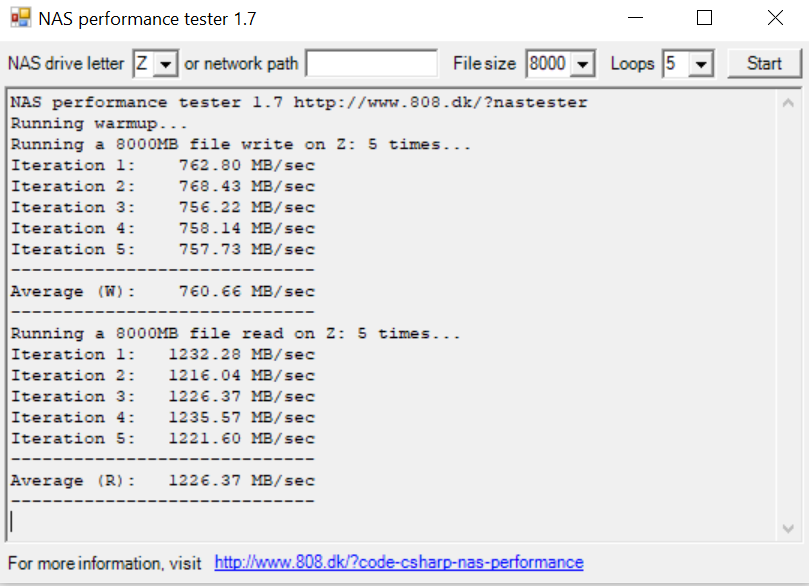

I did find another benchmark called Nas Test - that seems to match my actual copy speeds, but the reads (on a 800MB file) I don't think I can really trust as 1200 MB seems unlikely?

SO... here's my question - is the difference between read/write (150-200MB) normal for the setup I've described? I was expecting them to be closer or if anything the write to be a little ahead.

I've tried building a pool with 3 Vdevs, another using 2x Z1 instead of Z2 and that didn't seem to really make any difference - the numbers were about the same

Before I put this setup in the rack is there anything I should try with my pool, tuning etc?

To be clear I'm very happy with Read performance - where for my work that matters a more. I'm ok with the Write just seems 'slow' comparatively and I"m curious if I can get it better

Thanks for any insight/advice!

P.S. Ultimately this server will go in my rack and plan to do a 4 port bond back to my Ubiquti 10gbe Switch (XG 24). All my clients to this server will also have 10G connections.

Server Build

Motherboard - ASUS x99 Sage WS (had this left over from an older workstation)

RAM - 128GB

CPU - Xeon E5 2697 v3 2.6ghz 14 Core

HBA - LSI 9305 16i (running in IT Mode, flashed to newest firmware/bios)

NIC - Intel X710 4 port RJ45 10GBe (MTU 9000 set for each port)

L2ARC - XPG 2TB NVMe located in Sabrent PCIe adapter (in a x16 slot)

System - XPG 256gb NVMe located on motherboard (x4)

Drives - 12x 10TB Western Digital Gold (Sata) Datacenter drives

Rosewill 4u Case

Running True Nas 12.0-U5

Pool Setup

1 Pool with 2x 6Drive Z2 Vdevs

NVMe L2ARC

Compression disabled

Record Size 1MB

SMB Dataset also with 1MB Record size (changed to this size anticipating larger video files)

Sharing Via SMB

Test Client & Other

Right now the server is on my kitchen table and I'm testing with a Dell Precision 5750 and a Promise San Link 3 (Thunderbolt 3 adapter) connected to a single port (not bonded) on the Intel Nic. The San Link also has MTU set to 9000 and I've tuned as much as I know how (buffers, RSS queues etc).

Also I've enabled auto tune on the server.

This setup is working pretty well - Using a plethora of benchmarks (BMD, AJA, CrystalDisk etc) I'm seeing Reads in the low 900 MB/s range but my Writes are averaging 'only' in the mid 600s.

In moving an actual large data block to the server (about 480GB from an Internal NVMe on the Dell) I'm getting writes that are actually in mid 700s (windows copy dialog)

Here's a screen shot of AJA test where you can see mid 600s vs mid 700s in an actual file copy:

Doing a copy back from the server things correlate better to the bench marks were i'm getting mid 800s to mid 900s.

I did find another benchmark called Nas Test - that seems to match my actual copy speeds, but the reads (on a 800MB file) I don't think I can really trust as 1200 MB seems unlikely?

SO... here's my question - is the difference between read/write (150-200MB) normal for the setup I've described? I was expecting them to be closer or if anything the write to be a little ahead.

I've tried building a pool with 3 Vdevs, another using 2x Z1 instead of Z2 and that didn't seem to really make any difference - the numbers were about the same

Before I put this setup in the rack is there anything I should try with my pool, tuning etc?

To be clear I'm very happy with Read performance - where for my work that matters a more. I'm ok with the Write just seems 'slow' comparatively and I"m curious if I can get it better

Thanks for any insight/advice!

P.S. Ultimately this server will go in my rack and plan to do a 4 port bond back to my Ubiquti 10gbe Switch (XG 24). All my clients to this server will also have 10G connections.