Yes am eyeing for a Supermicro all NVMe chassis for my next build. They have one model now like this but I will wait a bit to see used ones on the second hand market. What I am not sure is say if I have a 8 wide RAIDZ2 and one disk fails, is the time to rebuild equivalent to the time if takes to read from the remaining 7 disks in parallel, calculate the missing bytes and write to the new disk? This means the resilvering time is not going to increase with wider vdev assuming the HBA has sufficient bandwidth (eg not more than 8 disks for an 8 lane HBA) but I keep on reading wider vdev has longer rebuild time.Resilver time will depend on the speed that the new disk can write data. The kind of HBA is not relevant unless you actually have 12Gb SAS SSDs because even SAS spinning disks that are rated for a 12Gb interface are not going to be fast enough to matter. What kind of drive are you going to use.

-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What is needed to achieve 10Gbps read with RAIDZ-2 (raid6)

- Thread starter titusc

- Start date

Johnnie Black

Guru

- Joined

- May 10, 2017

- Messages

- 838

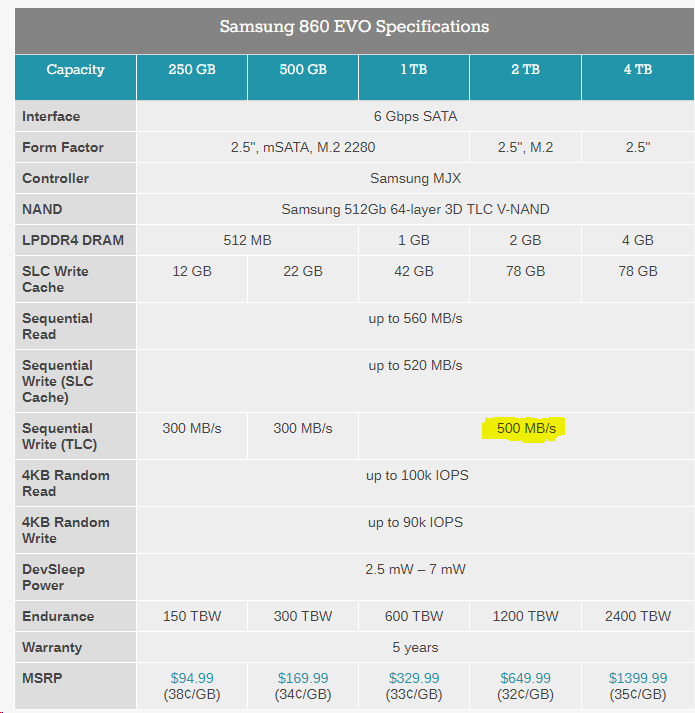

I thought QVO and EVO have similar read and write speeds and differ mostly on endurance.

No, completely different, QVO use QLC flash and can only sustain 500MB/s+ when using the small SLC cache, which varies from 6 GB when the drive is relatively full, up to 42 GB when not, after that you get 80MB/s.

Johnnie Black

Guru

- Joined

- May 10, 2017

- Messages

- 838

And for comparison, the 860 EVO:

- Joined

- May 19, 2017

- Messages

- 1,829

I'd refer to the SLOG thread. There is a lot of actual data in there... NVME isn't the sole answer. For example, there are multiple flavours of Optane out there, ranging from $$$ PCIe data center solutions down to consumer-grade 16GB NMVE solutions. But they are not created equal and the tests in the thread show it. Run a test or two with your SLOG candidates and compare it to other candidates like the P4801X running in a PCIe 3.0 x4 slot. Remember, the SLOG doesn't have to be huge but it should feature fast writes.Does this matter if I use a 500GB NVMe SLOG? I thought QVO and EVO have similar read and write speeds and differ mostly on endurance.

FWIW, the 100GB P4801X claims 1,000MB/s sequential writes, 2,200MB/s seq. reads, 250k IOPS for writes, 550k IOPS for reads.

Last edited:

Holy crap thanks for pointing this out.No, completely different, QVO use QLC flash and can only sustain 500MB/s+ when using the small SLC cache, which varies from 6 GB when the drive is relatively full, up to 42 GB when not, after that you get 80MB/s.

Okay I read through 7 pages just now on my phone on that thread. Good read but I don't think I can compare properly on my phone. It appears the Optane 900p is one of the if not the best overall one? I can buy a 900p 280GB for $223. I wonder how a proper NVMe disk fares. It appears all benchmarks I saw up to page 7 do not have NVMe? I might have missed it though. Are you saying P4801x is better than the 900p?I'd refer to the SLOG thread. There is a lot of actual data in there... NVME isn't the sole answer. For example, there are multiple flavours of Optane out there, ranging from $$$ PCIe data center solutions down to consumer-grade 16GB NMVE solutions. But they are not created equal and the tests in the thread show it. Run a test or two with your SLOG candidates and compare it to other candidates like the P4801X running in a PCIe 3.0 x4 slot. Remember, the SLOG doesn't have to be huge but it should feature fast writes.

FWIW, the 100GB P4801X claims 1,000MB/s sequential writes, 2,200MB/s seq. reads, 250k IOPS for writes, 550k IOPS for reads.

- Joined

- May 19, 2017

- Messages

- 1,829

If m.2 is what you’re after, p4801x is the way to go. But only if you have a x4 slot for it. See the second to last page

I just re-read the thread on a PC and unless I'm missing something the 900P is the one to go. I have copied and pasted some of the benchmarks from that thread to here. Also the following provides more comparisons as well.If m.2 is what you’re after, p4801x is the way to go. But only if you have a x4 slot for it. See the second to last page

https://www.servethehome.com/exploring-best-zfs-zil-slog-ssd-intel-optane-nand/

https://www.servethehome.com/buyers...as-servers/top-picks-freenas-zil-slog-drives/

I have obtained a dual 10Gbps Optional LOM card so between the only 2 x PCIe slots I can fit in the following.

- LSI HBA

- 900P Optane SLOG

DC P4802x 200GB

Code:

Synchronous random writes:

0.5 kbytes: 24.8 usec/IO = 19.7 Mbytes/s

1 kbytes: 25.0 usec/IO = 39.1 Mbytes/s

2 kbytes: 25.3 usec/IO = 77.2 Mbytes/s

4 kbytes: 22.9 usec/IO = 170.5 Mbytes/s

8 kbytes: 25.1 usec/IO = 310.8 Mbytes/s

16 kbytes: 29.8 usec/IO = 523.5 Mbytes/s

32 kbytes: 41.0 usec/IO = 762.9 Mbytes/s

64 kbytes: 60.3 usec/IO = 1037.3 Mbytes/s

128 kbytes: 96.6 usec/IO = 1293.5 Mbytes/s

256 kbytes: 162.0 usec/IO = 1543.1 Mbytes/s

512 kbytes: 291.4 usec/IO = 1715.6 Mbytes/s

1024 kbytes: 551.4 usec/IO = 1813.6 Mbytes/s

2048 kbytes: 1073.1 usec/IO = 1863.8 Mbytes/s

4096 kbytes: 2109.1 usec/IO = 1896.5 Mbytes/s

8192 kbytes: 4183.8 usec/IO = 1912.1 Mbytes/s

DC P4801x 100GB

Code:

Synchronous random writes:

0.5 kbytes: 28.0 usec/IO = 17.4 Mbytes/s

1 kbytes: 27.0 usec/IO = 36.1 Mbytes/s

2 kbytes: 27.5 usec/IO = 71.1 Mbytes/s

4 kbytes: 23.4 usec/IO = 167.1 Mbytes/s

8 kbytes: 30.4 usec/IO = 257.0 Mbytes/s

16 kbytes: 44.1 usec/IO = 354.3 Mbytes/s

32 kbytes: 64.6 usec/IO = 483.5 Mbytes/s

64 kbytes: 103.7 usec/IO = 602.7 Mbytes/s

128 kbytes: 161.1 usec/IO = 776.1 Mbytes/s

256 kbytes: 285.8 usec/IO = 874.6 Mbytes/s

512 kbytes: 527.5 usec/IO = 947.9 Mbytes/s

1024 kbytes: 988.2 usec/IO = 1012.0 Mbytes/s

2048 kbytes: 1905.6 usec/IO = 1049.5 Mbytes/s

4096 kbytes: 3730.2 usec/IO = 1072.3 Mbytes/s

8192 kbytes: 7398.6 usec/IO = 1081.3 Mbytes/s

900p 280GB

Code:

Synchronous random writes:

0.5 kbytes: 14.7 usec/IO = 33.2 Mbytes/s

1 kbytes: 14.9 usec/IO = 65.6 Mbytes/s

2 kbytes: 15.1 usec/IO = 129.1 Mbytes/s

4 kbytes: 12.6 usec/IO = 309.4 Mbytes/s

8 kbytes: 14.2 usec/IO = 548.7 Mbytes/s

16 kbytes: 18.6 usec/IO = 840.5 Mbytes/s

32 kbytes: 26.2 usec/IO = 1191.1 Mbytes/s

64 kbytes: 41.2 usec/IO = 1515.3 Mbytes/s

128 kbytes: 72.1 usec/IO = 1732.7 Mbytes/s

256 kbytes: 132.9 usec/IO = 1880.7 Mbytes/s

512 kbytes: 259.3 usec/IO = 1928.2 Mbytes/s

1024 kbytes: 522.7 usec/IO = 1913.1 Mbytes/s

2048 kbytes: 1029.5 usec/IO = 1942.8 Mbytes/s

4096 kbytes: 2043.6 usec/IO = 1957.3 Mbytes/s

8192 kbytes: 4062.0 usec/IO = 1969.5 Mbytes/s

I'm still sourcing parts from my DL360 G8 server. Just to summarize this is what I'm trying to achieve.

Boot disk in RAID 1 via the onboard p480 controller:

Previously I mentioned about removing the SAS Expander so I can place the 2 boot disks on the disk bays at the front but it seems to be not feasible to remove the SAS Expander. The PSU only provides +12V DC. The cable that delivers power from the motherboard (10 pin MOLEX) has only yellow and black cables on the SAS Expander end further implying 12VDC only. I guess it is the SAS Expander circuitry split this into +12V and +5V DC to the drives. In other words I can't remove the SAS Expander and get +5V DC for the disks I'm going to use.

There are only 2 sources of +5V DC on this server.

Boot disk in RAID 1 via the onboard p480 controller:

- 2 x Hikvision C100 480GB SSD. Bought these for like $49 each.

- Power fed from onboard Optical disk slim SATA connector. For this I'd need the following.

- Female slimline SATA power to Female 4 pin MOLEX. These are harder to find but I found them on Taobao.

- Male 4 pin MOLEX to 2 x Female slimline SATA power. These are very easy to find.

- 8 x Samsung 860 EVO 1TB SSD. I thought I saw recently there were some awesome deals probably on Wallmart but I can't find them now. :(

- 1 x Optaine 900P 280GB. They are available on Taobao for like ¥1529 so about $228.

Previously I mentioned about removing the SAS Expander so I can place the 2 boot disks on the disk bays at the front but it seems to be not feasible to remove the SAS Expander. The PSU only provides +12V DC. The cable that delivers power from the motherboard (10 pin MOLEX) has only yellow and black cables on the SAS Expander end further implying 12VDC only. I guess it is the SAS Expander circuitry split this into +12V and +5V DC to the drives. In other words I can't remove the SAS Expander and get +5V DC for the disks I'm going to use.

There are only 2 sources of +5V DC on this server.

- USB but they are all 2.0 so insufficient power to power 2 x SSDs.

- Slim SATA port on the motherboard for the optical drive which should be sufficient to power 2 x SSD.

- Joined

- May 19, 2017

- Messages

- 1,829

Agreed that the 900p is a better SLOG host as long as you’re ok with losing a PCIe slot or two (mirrored) and your primary concern is speeding up small file writes.

I only have two PCIe slots on my board and a single vdev Z3 array, so the SLOG will quickly be bound by the slow I/o in the back end. If I go mirrored, it will be via a riser card, allowing me to host the L2ARc, 2nd SLOG on one Bifurcated PCIe card. That leaves a second PCIe slot for something else.

However, as long as you have the PCIe slots and the spare watts from the PS, the 900p is likely overall a better performer and costs about the same. Thanks for pulling that info together.

I only have two PCIe slots on my board and a single vdev Z3 array, so the SLOG will quickly be bound by the slow I/o in the back end. If I go mirrored, it will be via a riser card, allowing me to host the L2ARc, 2nd SLOG on one Bifurcated PCIe card. That leaves a second PCIe slot for something else.

However, as long as you have the PCIe slots and the spare watts from the PS, the 900p is likely overall a better performer and costs about the same. Thanks for pulling that info together.

terrenceTtibbs

Dabbler

- Joined

- May 30, 2021

- Messages

- 10

I get 1GB/s using 7x 3TB 7200 drives in RAIDZ2 read and around 500-750MB/s write. This is with a mixture of small and large files to make it real world. CPU consideration in the client workstation is important also.

- Joined

- May 19, 2017

- Messages

- 1,829

Presumably you have sync turned off, bypassing the need for a SLOG?

terrenceTtibbs

Dabbler

- Joined

- May 30, 2021

- Messages

- 10

Hi, I have no idea what SLOG or sync is or does but I will have a look now and read about it.Presumably you have sync turned off, bypassing the need for a SLOG?

terrenceTtibbs

Dabbler

- Joined

- May 30, 2021

- Messages

- 10

I do have 40gb and 12 threads of Ram and the RAM is always pegged at 38.5GB when transfers happen.Presumably you have sync turned off, bypassing the need for a SLOG?

Yorick

Wizard

- Joined

- Nov 4, 2018

- Messages

- 1,912

The thing we have that provides "dual disk failure redundancy" is RAIDz2.

yes, and also 3-way mirrors. Though that’s a little brutal on the storage efficiency.

- Joined

- May 19, 2017

- Messages

- 1,829

Apologies but AFAIK none of the factors you just listed are relevant in this discussion.I do have 40gb and 12 threads of Ram and the RAM is always pegged at 38.5GB when transfers happen.

I presume sync is off and hence your drives don’t need a SLOG. Very high write speeds result but should the NAS get interrupted during a write then the integrity of the data might fall into question. So that’s a trade off you can make, just as some folk use striped RAID for scratch disks.

terrenceTtibbs

Dabbler

- Joined

- May 30, 2021

- Messages

- 10

Hi I have just checked and sync is set to standard not offApologies but AFAIK none of the factors you just listed are relevant in this discussion.

I presume sync is off and hence your drives don’t need a SLOG. Very high write speeds result but should the NAS get interrupted during a write then the integrity of the data might fall into question. So that’s a trade off you can make, just as some folk use striped RAID for scratch disks.

- Joined

- May 19, 2017

- Messages

- 1,829

Then your results are surprising to me. A single VDEV system with HDDs is typically characterized to have single HDD performance re IOPS etc…. around 300MB/s writes for large files on a sustained basis.

That’s also my experience here though I’m running one more drive in a Z3, which increases the # of parity writes accordingly. Anyhow, enjoy those high speeds, I am happy for you!

That’s also my experience here though I’m running one more drive in a Z3, which increases the # of parity writes accordingly. Anyhow, enjoy those high speeds, I am happy for you!

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "What is needed to achieve 10Gbps read with RAIDZ-2 (raid6)"

Similar threads

- Replies

- 5

- Views

- 12K

- Replies

- 13

- Views

- 4K