Rogla

Dabbler

- Joined

- Jan 24, 2012

- Messages

- 28

Hi!

I think i have a volume with wrong configuration/setup and like to get some advice/confirmation.

It looks like I have two striped raidz2 vdevs. That was not the intention, one raidz2 vdev was the goal.

The simple reason is that I'm missing space.

There are 8 x 4 TB disk space, i expect nominal 6 x 4 = 24 TB compared to about 14 TB reported as (Used + Available) for the Dataset on the volume..

Checkpoint and replication is used, but only a few checkpoints are stored and no big changes between them so they should not cointain a lot of data.

Unless i'm failing to understand something....

The questions are:

1) Looking at the output below, is the volume really two striped raidz2 vdevs?

2) If, how do I rebuild the volume to have only one raidz2 vdev as easy as possible on 9.10-Stable?

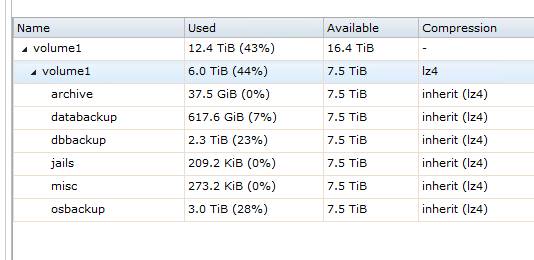

Storage pic:

I think i have a volume with wrong configuration/setup and like to get some advice/confirmation.

It looks like I have two striped raidz2 vdevs. That was not the intention, one raidz2 vdev was the goal.

The simple reason is that I'm missing space.

There are 8 x 4 TB disk space, i expect nominal 6 x 4 = 24 TB compared to about 14 TB reported as (Used + Available) for the Dataset on the volume..

Checkpoint and replication is used, but only a few checkpoints are stored and no big changes between them so they should not cointain a lot of data.

Unless i'm failing to understand something....

The questions are:

1) Looking at the output below, is the volume really two striped raidz2 vdevs?

2) If, how do I rebuild the volume to have only one raidz2 vdev as easy as possible on 9.10-Stable?

Storage pic:

[root@sellafield ~]# zpool status

pool: volume1

config:

NAME STATE READ WRITE CKSUM

volume1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/52bd1d7f-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

gptid/52f79936-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

gptid/5332c03e-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

gptid/536beb1f-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

gptid/53aa6575-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

gptid/53e3d26d-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

gptid/541f34b8-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

gptid/545b8755-4fc5-11e4-b903-b82a72d6f2c2 ONLINE 0 0 0

errors: No known data errors

[root@sellafield ~]#

[root@sellafield ~]# zpool list -v

NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

volume1 28.8T 12.4T 16.4T - 4% 43% 1.00x ONLINE /mnt

raidz2 14.4T 6.19T 8.19T - 4% 43%

gptid/52bd1d7f-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

gptid/52f79936-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

gptid/5332c03e-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

gptid/536beb1f-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

raidz2 14.4T 6.19T 8.19T - 4% 43%

gptid/53aa6575-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

gptid/53e3d26d-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

gptid/541f34b8-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

gptid/545b8755-4fc5-11e4-b903-b82a72d6f2c2 - - - - - -

[root@sellafield ~]#

[root@sellafield ~]# zfs list -t all

NAME USED AVAIL REFER MOUNTPOINT

volume1 5.99T 7.50T 546K /mnt/volume1

volume1/.system 341M 7.50T 199M legacy

volume1/.system/configs-c7c03331e27944b6afc5cf519d212cdd 92.2M 7.50T 92.2M legacy

volume1/.system/cores 1.08M 7.50T 1.08M legacy

volume1/.system/rrd-c7c03331e27944b6afc5cf519d212cdd 34.2M 7.50T 34.2M legacy

volume1/.system/samba4 3.60M 7.50T 3.60M legacy

volume1/.system/syslog-c7c03331e27944b6afc5cf519d212cdd 10.8M 7.50T 10.8M legacy

volume1/archive 37.5G 7.50T 37.5G /mnt/volume1/archive

volume1/archive@auto-20181008.0700-1w 0 - 37.5G -

volume1/databackup 618G 7.50T 618G /mnt/volume1/databackup

volume1/databackup@auto-20181007.0800-1w 174K - 618G -

volume1/databackup@auto-20181008.0800-1w 0 - 618G -

volume1/dbbackup 2.33T 7.50T 2.33T /mnt/volume1/dbbackup

volume1/dbbackup@auto-20181007.1100-1w 1.18M - 2.30T -

volume1/jails 209K 7.50T 209K /mnt/volume1/jails

volume1/misc 273K 7.50T 273K /mnt/volume1/misc

volume1/misc@auto-20181007.1200-1w 0 - 273K -

volume1/osbackup 3.02T 7.50T 3.00T /mnt/volume1/osbackup

volume1/osbackup@auto-20181007.0900-1w 24.1G - 3.00T -

volume1/osbackup@auto-20181008.0900-1w 198K - 3.00T -

[root@sellafield ~]#