Ray DeMoss

Dabbler

- Joined

- Jul 11, 2017

- Messages

- 11

I am recovering from a crashed TrueNAS system where I lost my jails. This is a long story and really not important. This is my primary home server and hosts many local LAN shares and when everything was working, it runs 5 jails. Currently, I have one jail setup that runs a webserver that downloads files. Before the crash, I observed nearly full ISP bandwidth to the Internet from within the jail running the same software. Here is the relevant info, but please ask for anything that may be missing.

I've been reading the forums for hours and while others have posted similar questions, the situations have been fairly different. Some use VLANs some use LAGG or other configurations. This is pretty straightforward with the

I would really appreciate any suggestions to improve or compare performance. Does this look like it's configured properly? I'm pretty advanced with network hardware, but I'm not an expert with virtualized components like the VNETs or BRIDGEs.

- I have one NIC connected to the LAN. This is a 10Gbit interface

ix0connected to a 10Gbit switch connected to the pfSense router. - I have a Google Fiber connection to the Internet with 2Gbit down and 1Gbit up.

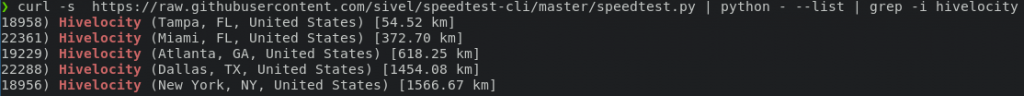

- From the root folder of the TrueNAS server, I run a command line speed test to ensure the core functionality is working as shown below

- I am only running IPv4 and everything is on one network. There are no VLANs or LAGGs on any interfaces. This is a home server so I keep it pretty simple.

- The MTU is all set to 1500 all-around although the host interface looks like it supports jumbo packets.

- The NIC card is an Intel X540 card and offloading is enabled. This was also enabled in my previous build.

root@truenas:~ # ./speedtest-cli

Retrieving speedtest.net configuration...

Testing from Google Fiber (136.xxx.xxx.xxx)...

Retrieving speedtest.net server list...

Selecting best server based on ping...

Hosted by UTOPIA Fiber (SLC, UT) [7.62 km]: 5.159 ms

Testing download speed................................................................................

[COLOR=rgb(184, 49, 47)]Download: 1923.62 Mbit/s[/COLOR]

Testing upload speed......................................................................................................

[COLOR=rgb(184, 49, 47)]Upload: 834.26 Mbit/s[/COLOR]

root@truenas:~ #- The jail is set up using

vnet0andbridge0. - Here is the

ifconfigoutput from the host system:

ix0: flags=8963<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500

options=4a538b9<RXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_UCAST,WOL_MCAST,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,NOMAP>

ether xx:xx:xx:xx:xx:60

inet 192.168.10.96 netmask 0xffffff00 broadcast 192.168.10.255

media: Ethernet autoselect (10Gbase-T <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

bridge0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1500

ether xx:xx:xx:xx:xx:91

id 00:00:00:00:00:00 priority 32768 hellotime 2 fwddelay 15

maxage 20 holdcnt 6 proto rstp maxaddr 2000 timeout 1200

root id 00:00:00:00:00:00 priority 32768 ifcost 0 port 0

member: vnet0.7 flags=143<LEARNING,DISCOVER,AUTOEDGE,AUTOPTP>

ifmaxaddr 0 port 10 priority 128 path cost 2000

member: ix0 flags=143<LEARNING,DISCOVER,AUTOEDGE,AUTOPTP>

ifmaxaddr 0 port 5 priority 128 path cost 2000

groups: bridge

nd6 options=9<PERFORMNUD,IFDISABLED>

vnet0.7: flags=8963<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500

description: associated with jail: sabnzbd as nic: epair0b

options=8<VLAN_MTU>

ether xx:xx:xx:xx:xx:38

hwaddr xx:xx:xx:xx:xx:0a

groups: epair

media: Ethernet 10Gbase-T (10Gbase-T <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

- I then run the same speed test from inside the jail

root@testjail:~ # ./speedtest-cli

Retrieving speedtest.net configuration...

Testing from QuickWeb Hosting Solutions (184.170.241.12)...

Retrieving speedtest.net server list...

Selecting best server based on ping...

Hosted by CopperNet Systems, Inc. (Kearny, AZ) [113.56 km]: 78.688 ms

Testing download speed................................................................................

[COLOR=rgb(184, 49, 47)]Download: 77.04 Mbit/s[/COLOR]

Testing upload speed......................................................................................................

[COLOR=rgb(184, 49, 47)]Upload: 68.72 Mbit/s[/COLOR]

- In the previous build of this jail, I would see 1.5 to 1.8 Gbit down

- The previous build of the jail also used

vnet0andbridge0. - The VNET and BRIDGE were created by TrueNAS when I created the jail. Neither existed prior to the creation of this testjail.

- Here are the NIC interfaces inside the jail:

root@testjail:~ # ifconfig

lo0: flags=8049<UP,LOOPBACK,RUNNING,MULTICAST> metric 0 mtu 16384

options=680003<RXCSUM,TXCSUM,LINKSTATE,RXCSUM_IPV6,TXCSUM_IPV6>

inet6 ::1 prefixlen 128

inet6 fe80::1%lo0 prefixlen 64 scopeid 0x1

inet 127.0.0.1 netmask 0xff000000

groups: lo

nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL>

pflog0: flags=0<> metric 0 mtu 33160

groups: pflog

epair0b: flags=8863<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1500

options=8<VLAN_MTU>

ether xx:xx:xx:xx:xx:39

hwaddr xx::xx:xx:xx:xx:0b

inet 192.168.10.40 netmask 0xffffff00 broadcast 192.168.10.255

groups: epair

media: Ethernet 10Gbase-T (10Gbase-T <full-duplex>)

status: active

nd6 options=1<PERFORMNUD>

I've been reading the forums for hours and while others have posted similar questions, the situations have been fairly different. Some use VLANs some use LAGG or other configurations. This is pretty straightforward with the

vnet connected to the bridge connect to the ix0 interface and still hitting a network performance issue.I would really appreciate any suggestions to improve or compare performance. Does this look like it's configured properly? I'm pretty advanced with network hardware, but I'm not an expert with virtualized components like the VNETs or BRIDGEs.