DMDComposer

Cadet

- Joined

- Feb 1, 2023

- Messages

- 5

Hi,

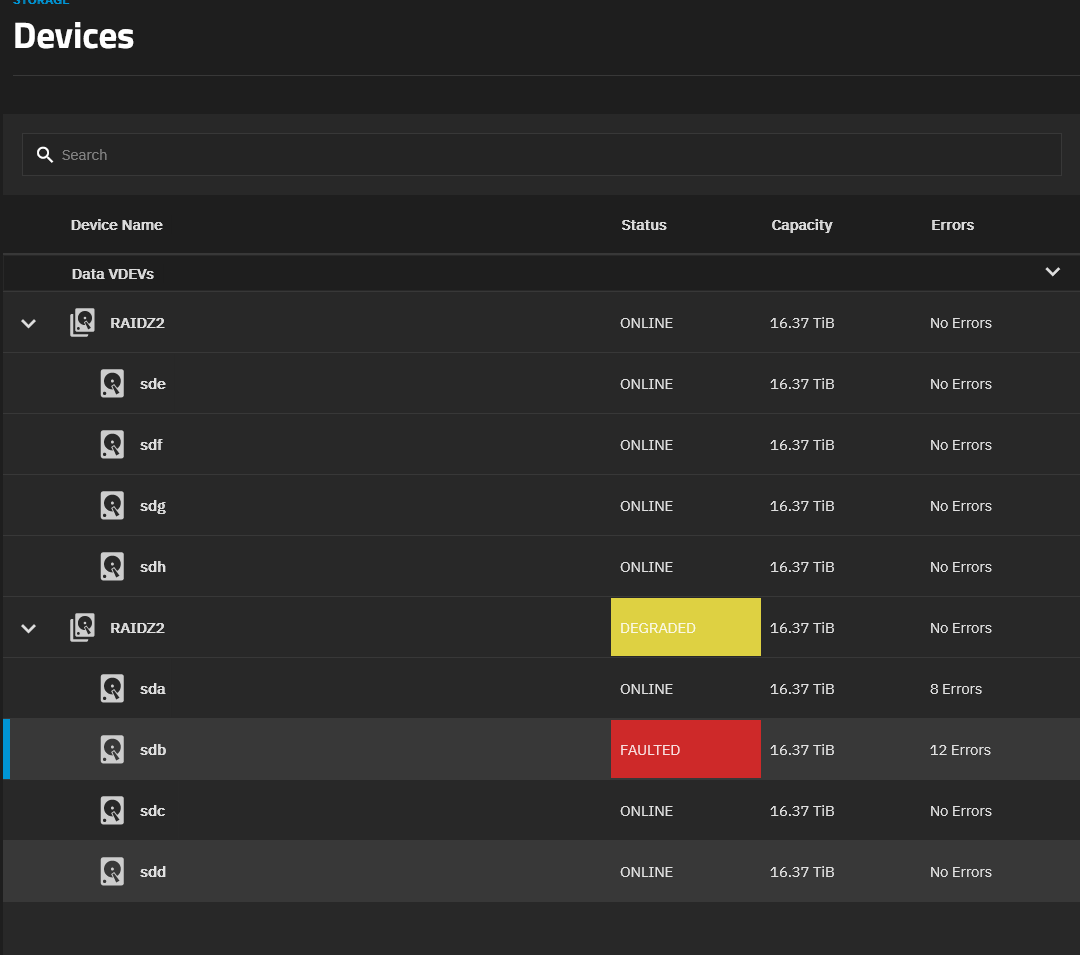

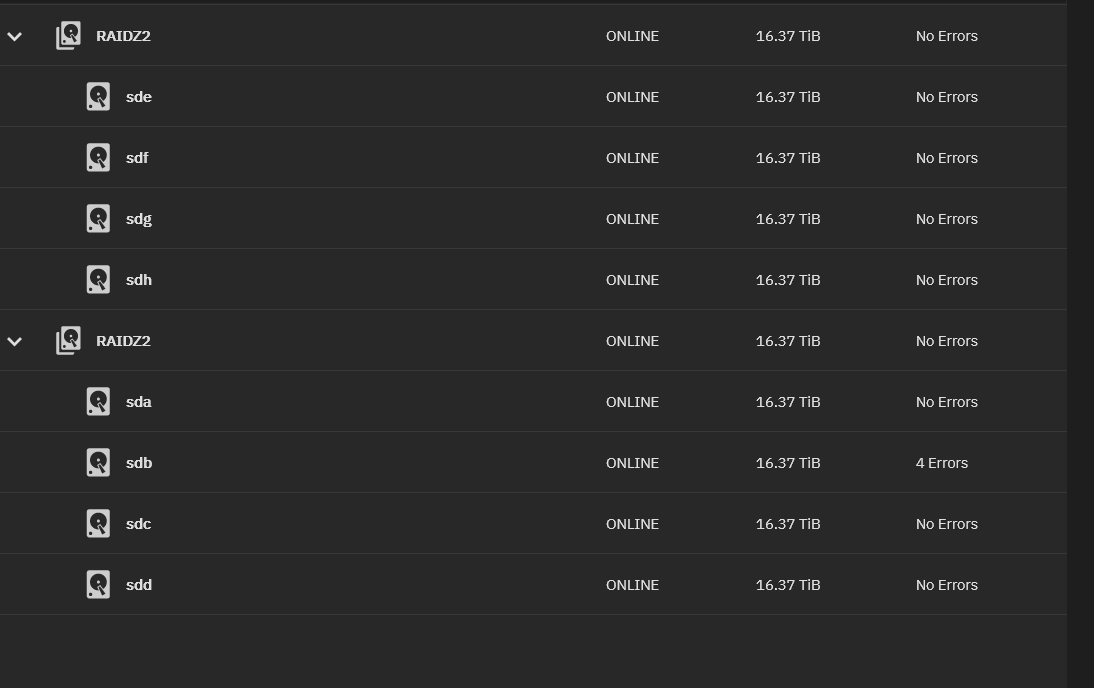

I'm new to NAS servers in general. I saw one of my VDEVs was showing degraded. It seems "sda" and "sdb" are showing some errors with "sdb" being the one faulted. However, after a restart it now looks fine, but "sdb" has some errors still just a lot lower. I've provided two pictures below.

My question is, is one of my hdd's, presumably "sdb" in risk of failing? Is this a situation that I need to replace the drive asap? Or, do I just keep an eye on it? I would appreciate any insight!

Cheers,

DMDComposer

I'm new to NAS servers in general. I saw one of my VDEVs was showing degraded. It seems "sda" and "sdb" are showing some errors with "sdb" being the one faulted. However, after a restart it now looks fine, but "sdb" has some errors still just a lot lower. I've provided two pictures below.

My question is, is one of my hdd's, presumably "sdb" in risk of failing? Is this a situation that I need to replace the drive asap? Or, do I just keep an eye on it? I would appreciate any insight!

Cheers,

DMDComposer