darksoul

Dabbler

- Joined

- Dec 1, 2020

- Messages

- 11

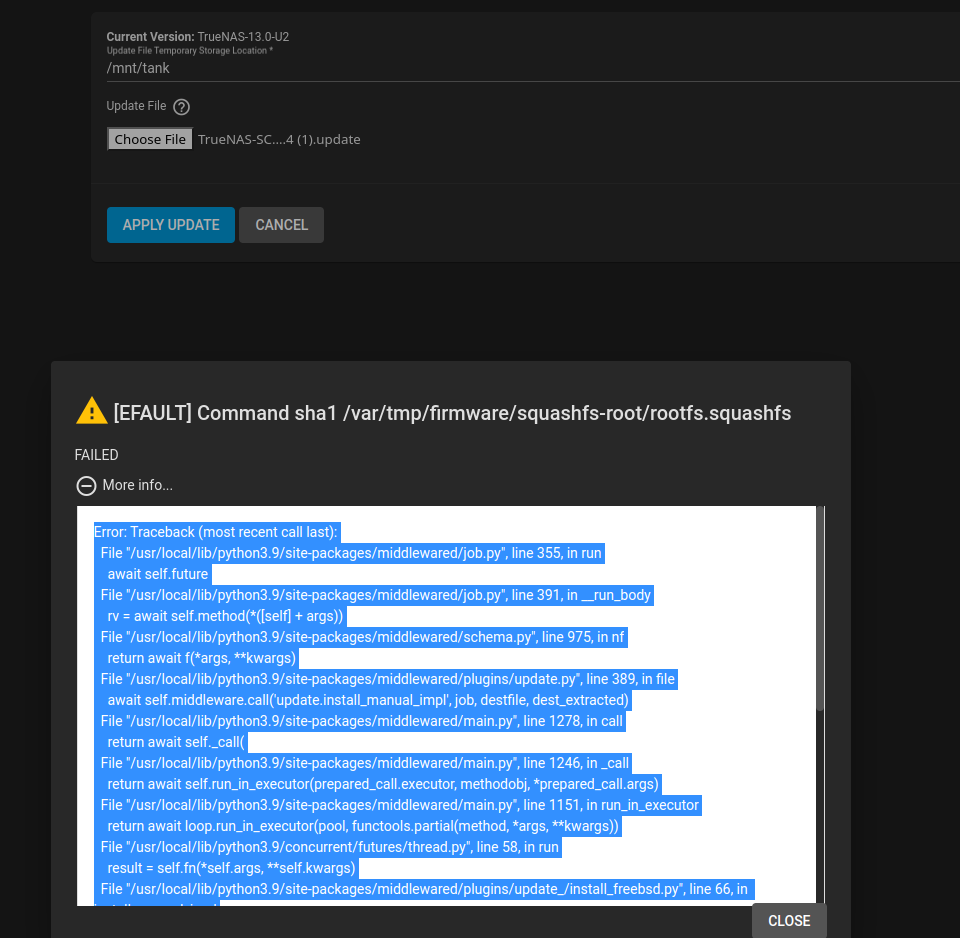

When trying to update to Scale from core 13.02-U2 i get the below errors.

Note. I have downloaded the Update twice.

The Server is fully patched to 13.02-U2

The Server was rebooted after patching and again before trying to patch with second download

I have tried using the temp storage and my pool called TANK /mnt/tank. I always get the same errors.

On a second attempt using the temp storage i will get the /var/tmp/firmware drive is full. I am at a loss generally my

upgrades are flawless. Is there something i'm missing. that i need to do first?

Error: Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 355, in run

await self.future

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 391, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 975, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update.py", line 389, in file

await self.middleware.call('update.install_manual_impl', job, destfile, dest_extracted)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1278, in call

return await self._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1246, in _call

return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1151, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

File "/usr/local/lib/python3.9/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update_/install_freebsd.py", line 66, in install_manual_impl

return self._install_scale(job, path)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update_/install_freebsd.py", line 86, in _install_scale

return self.middleware.call_sync(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1305, in call_sync

return methodobj(*prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update_/install.py", line 29, in install_scale

our_checksum = subprocess.run(["sha1", os.path.join(mounted, file)], **run_kw).stdout.split()[-1]

File "/usr/local/lib/python3.9/subprocess.py", line 528, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['sha1', '/var/tmp/firmware/squashfs-root/rootfs.squashfs']' returned non-zero exit status 1.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 359, in run

raise handled

middlewared.service_exception.CallError: [EFAULT] Command sha1 /var/tmp/firmware/squashfs-root/rootfs.squashfs failed (code 1):

sha1: /var/tmp/firmware/squashfs-root/rootfs.squashfs: No such file or directory

Note. I have downloaded the Update twice.

The Server is fully patched to 13.02-U2

The Server was rebooted after patching and again before trying to patch with second download

I have tried using the temp storage and my pool called TANK /mnt/tank. I always get the same errors.

On a second attempt using the temp storage i will get the /var/tmp/firmware drive is full. I am at a loss generally my

upgrades are flawless. Is there something i'm missing. that i need to do first?

Error: Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 355, in run

await self.future

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 391, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 975, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update.py", line 389, in file

await self.middleware.call('update.install_manual_impl', job, destfile, dest_extracted)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1278, in call

return await self._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1246, in _call

return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1151, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

File "/usr/local/lib/python3.9/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update_/install_freebsd.py", line 66, in install_manual_impl

return self._install_scale(job, path)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update_/install_freebsd.py", line 86, in _install_scale

return self.middleware.call_sync(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1305, in call_sync

return methodobj(*prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update_/install.py", line 29, in install_scale

our_checksum = subprocess.run(["sha1", os.path.join(mounted, file)], **run_kw).stdout.split()[-1]

File "/usr/local/lib/python3.9/subprocess.py", line 528, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['sha1', '/var/tmp/firmware/squashfs-root/rootfs.squashfs']' returned non-zero exit status 1.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 359, in run

raise handled

middlewared.service_exception.CallError: [EFAULT] Command sha1 /var/tmp/firmware/squashfs-root/rootfs.squashfs failed (code 1):

sha1: /var/tmp/firmware/squashfs-root/rootfs.squashfs: No such file or directory