JohanKarlsson

Cadet

- Joined

- Oct 13, 2022

- Messages

- 7

Dear all,

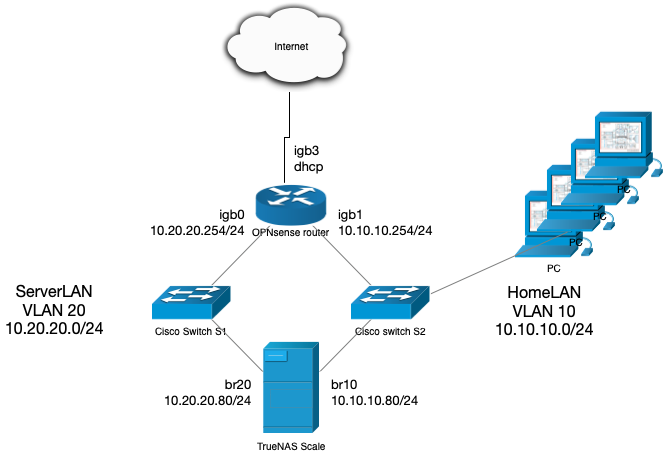

I can’t find a way to maintain a stable communication with TrueNAS Scale (TNS) when two bridge interfaces (br10 and br20) are active at the same time, each in a different subnet (HomeLAN, br10 and ServerLAN, br20). Both subnets are directly connected to the same OPNsense router as you can see in the provided network scheme below.

For this setup, each subnet is a separate VLAN on the same managed switch but I have not defined a trunk port on the switch and router because each VLAN is connected to a separate port on the router (hence I drawn two switches in the provided network scheme). The switch is a layer 2 switch. Currently, I don’t use ipv6. Only ipv4.

Yesterday I did a fresh install of TrueNAS Scale (TNS) 22.02.04 in the hope a config error slipped into my TNS, since I try to solve this issue since TNS 22.02.04. Unfortunately, the issue remains.

When I – from the HomeLAN (10.10.10.0/24 subnet) -- login to the webGUI (br20 – 10.20.20.80) of TrueNAS Scale by using http://ip_address_TNS_ServerLAN, the connection to the webGUI is unstable, meaning it tries to re-establish the connection showing me a message in the WebGUI:

"Waiting for Active TrueNAS controller to come up…"

I can repeat the issue if I just go to the Dashboard page of TNS. I then see the counters updated for the network interfaces of TNS (and do nothing). After about 40 seconds those counters stall. 30 seconds later I see the message "Waiting for Active TrueNAS controller to come up…" and about 5 seconds later TNS has back a life connection (for about 40 seconds). This cycle repeats over and over again.

Same for apps running on TNS when accessed by a web browser from the HomeLAN, for instance accessing the Ubiquti Unifi controller app suffers from the same re-establishing issue. It does restore the connection after about 30 seconds or so. Ditto for SMB file copy sessions.

When I do the same from the ServerLAN subnet 10.20.20.0/24, the connection to the webGUI and the apps is stable.

It makes no difference if I bind the TNS WebGUI only to the ServerLAN br20 ipv4 address. If I do, The GUI tells me it is bound only to br20, but when I look at the console of TNS it mentions the GUI is still accessible via both the ipv4 addresses of br10 and br20.

Both bridge interfaces don’t use DHCP but have static IP addresses assigned.

Aside from my Cisco switch, all network interface cards – including those in my OPNsense router -- are from Intel and are in full duplex Gbit speed mode.

Kubernetes on TNS (TNS WebGUI/Apps/Settings/Advanced Settings/) is using

For now, TNS webGUI is configured as 0.0.0.0 (TNS WebGUI/System settings/General/GUI/Web Interface IPv4 Address). Even when I restrict the WebGUI to only br20, the issue remains. Also for the apps.

When I check the log on my switch, I don’t see any STP related events. By experiment, I enabled Per VLAN Rapid STP instead of the default Rapid STP on my Cisco switch. The issue remains the same.

Disabling mDNS on the OPNsense router OR the TNS also makes no difference.

My local DNS server runs on my OPNsense router. Telling the local computers – including TNS -- to use another dns (8.8.8.8), did not make any difference (I did reboot TNS, the clients and the router).

Currently, no static routes are defined in TNS. As an experiment, I did create a static route: traffic for 10.10.10.0/24 has to use br10 but that did not help either. I believe it is also not needed since br10 is directly connected to that local broadcast domain.

When I ping the router on the br10 or br20 interface from a client in the HomeLAN, the response time I get back is consistent between 0.9ms and 1.2ms EVEN when I lost again the connection to the TNS WebGUI and see the message in my browser "Waiting for Active TrueNAS controller to come up".

At OSI layer 1, I also changed network cables with new ones (I only use CAT6). CAT 6 UTP should be more than enough for a Gbit a network infrastructure.

Support for jumbo frames (MTU 9000) is activated on the switch and TNS. OPNsense does not support MTU of 9000, but even when I configure network cards to work with MTU default (1500), the reconnect issue remains.

The thought came to my mind that this is a router issue, but I have had a similar dual NIC configuration on a MacOS based system with the same router hardware and configuration and faced no issues at all. The difference between MacOS and TNS – in my case -- is however that in MacOS default gateways are assigned on a per network interface card instead of per server as in TNS. I only gave one NIC in MacOS a default gateway.

So, again, it might be a router issue in regard to asymmetric routing. Thing is: if I tell TNS to only advertise apps and WebGUI on one network interface/bridge, and keep SMB file sharing available in the local broadcast domain only, I eliminate the issue of asymmetric routing. Or, am I thinking wrong here?

I ran out of options and ideas guys. I hope someone can give me the “golden” tip that solves this issue. I might response with a delay due to the time zone difference of 6 to 9 hours between US and Europe. Sorry if variants of this issue already have been asked. After searching for so many hours I have the impression I could not find the solution that could help me out.

Thank you for your time and thoughts.

Johan

I can’t find a way to maintain a stable communication with TrueNAS Scale (TNS) when two bridge interfaces (br10 and br20) are active at the same time, each in a different subnet (HomeLAN, br10 and ServerLAN, br20). Both subnets are directly connected to the same OPNsense router as you can see in the provided network scheme below.

For this setup, each subnet is a separate VLAN on the same managed switch but I have not defined a trunk port on the switch and router because each VLAN is connected to a separate port on the router (hence I drawn two switches in the provided network scheme). The switch is a layer 2 switch. Currently, I don’t use ipv6. Only ipv4.

Yesterday I did a fresh install of TrueNAS Scale (TNS) 22.02.04 in the hope a config error slipped into my TNS, since I try to solve this issue since TNS 22.02.04. Unfortunately, the issue remains.

What I try to accomplish:

- apps and management WebGUI of TrueNAS Scale are only accessible from the ServerLAN subnet via bridge interface br20. In other words: this traffic has to go over the router,

- SMB shares are accessible from both ServerLAN and HomeLAN subnets (via bridge interfaces br10 and br20) in order to keep SMB traffic in the local broadcast domain and reduce traffic that has to go over the router.

When the trouble begins

The trouble begins when both br10 and br20 are made active and get an ipv4 address assigned.When I – from the HomeLAN (10.10.10.0/24 subnet) -- login to the webGUI (br20 – 10.20.20.80) of TrueNAS Scale by using http://ip_address_TNS_ServerLAN, the connection to the webGUI is unstable, meaning it tries to re-establish the connection showing me a message in the WebGUI:

"Waiting for Active TrueNAS controller to come up…"

I can repeat the issue if I just go to the Dashboard page of TNS. I then see the counters updated for the network interfaces of TNS (and do nothing). After about 40 seconds those counters stall. 30 seconds later I see the message "Waiting for Active TrueNAS controller to come up…" and about 5 seconds later TNS has back a life connection (for about 40 seconds). This cycle repeats over and over again.

Same for apps running on TNS when accessed by a web browser from the HomeLAN, for instance accessing the Ubiquti Unifi controller app suffers from the same re-establishing issue. It does restore the connection after about 30 seconds or so. Ditto for SMB file copy sessions.

When I do the same from the ServerLAN subnet 10.20.20.0/24, the connection to the webGUI and the apps is stable.

It makes no difference if I bind the TNS WebGUI only to the ServerLAN br20 ipv4 address. If I do, The GUI tells me it is bound only to br20, but when I look at the console of TNS it mentions the GUI is still accessible via both the ipv4 addresses of br10 and br20.

Both bridge interfaces don’t use DHCP but have static IP addresses assigned.

Aside from my Cisco switch, all network interface cards – including those in my OPNsense router -- are from Intel and are in full duplex Gbit speed mode.

What I tried so far

First I thought it had something to do with TNS not ready for such a configuration, but now that I upgraded TNS to 22.12.2 the issue seem to remain.Kubernetes on TNS (TNS WebGUI/Apps/Settings/Advanced Settings/) is using

- br20 as Node IP,

- uses br20 interface for routing, and

- is using the OPNsense router as default gateway for the 10.20.20.0/24 subnet.

For now, TNS webGUI is configured as 0.0.0.0 (TNS WebGUI/System settings/General/GUI/Web Interface IPv4 Address). Even when I restrict the WebGUI to only br20, the issue remains. Also for the apps.

When I check the log on my switch, I don’t see any STP related events. By experiment, I enabled Per VLAN Rapid STP instead of the default Rapid STP on my Cisco switch. The issue remains the same.

Disabling mDNS on the OPNsense router OR the TNS also makes no difference.

My local DNS server runs on my OPNsense router. Telling the local computers – including TNS -- to use another dns (8.8.8.8), did not make any difference (I did reboot TNS, the clients and the router).

Currently, no static routes are defined in TNS. As an experiment, I did create a static route: traffic for 10.10.10.0/24 has to use br10 but that did not help either. I believe it is also not needed since br10 is directly connected to that local broadcast domain.

When I ping the router on the br10 or br20 interface from a client in the HomeLAN, the response time I get back is consistent between 0.9ms and 1.2ms EVEN when I lost again the connection to the TNS WebGUI and see the message in my browser "Waiting for Active TrueNAS controller to come up".

At OSI layer 1, I also changed network cables with new ones (I only use CAT6). CAT 6 UTP should be more than enough for a Gbit a network infrastructure.

Support for jumbo frames (MTU 9000) is activated on the switch and TNS. OPNsense does not support MTU of 9000, but even when I configure network cards to work with MTU default (1500), the reconnect issue remains.

Second thoughts

What puzzles me is why in TNS you can only define one default gateway (TNS WebGUI/Network/Global Configuration/Default Route) instead of on a per network or bridge interface. That way I could assign a default gateway only on the br20 bridge interface and no default gateway on the br10 bridge interface, so br10 would only serve the local broadcast domain it is connected to.The thought came to my mind that this is a router issue, but I have had a similar dual NIC configuration on a MacOS based system with the same router hardware and configuration and faced no issues at all. The difference between MacOS and TNS – in my case -- is however that in MacOS default gateways are assigned on a per network interface card instead of per server as in TNS. I only gave one NIC in MacOS a default gateway.

So, again, it might be a router issue in regard to asymmetric routing. Thing is: if I tell TNS to only advertise apps and WebGUI on one network interface/bridge, and keep SMB file sharing available in the local broadcast domain only, I eliminate the issue of asymmetric routing. Or, am I thinking wrong here?

I ran out of options and ideas guys. I hope someone can give me the “golden” tip that solves this issue. I might response with a delay due to the time zone difference of 6 to 9 hours between US and Europe. Sorry if variants of this issue already have been asked. After searching for so many hours I have the impression I could not find the solution that could help me out.

Thank you for your time and thoughts.

Johan