oRAirwolf

Explorer

- Joined

- Dec 6, 2016

- Messages

- 55

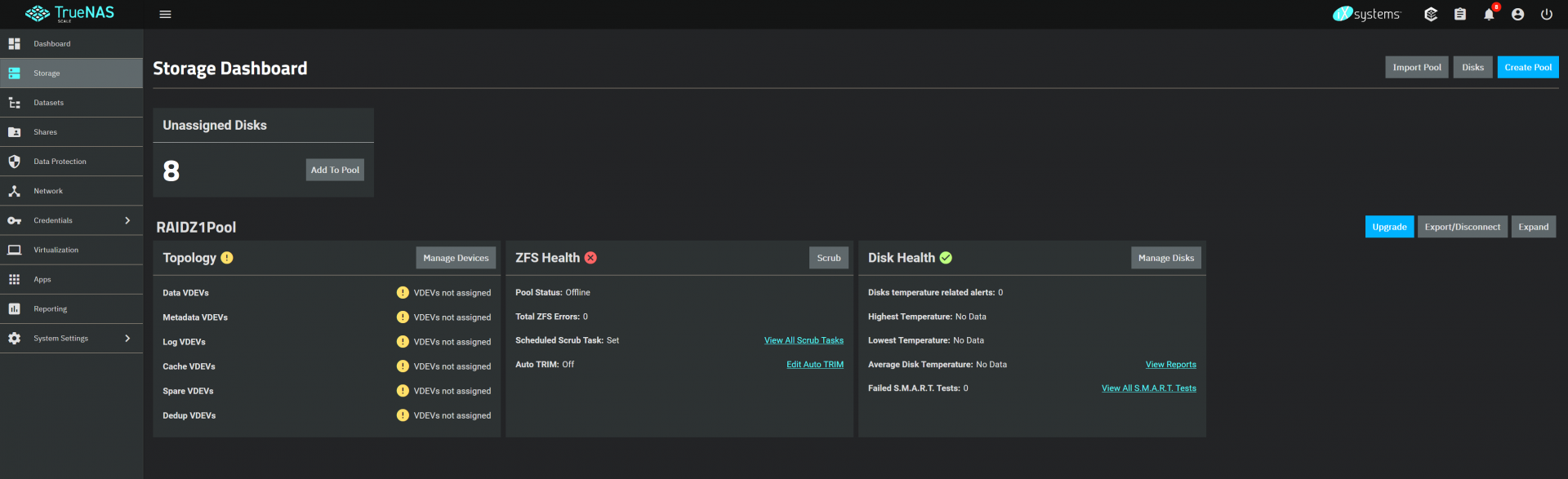

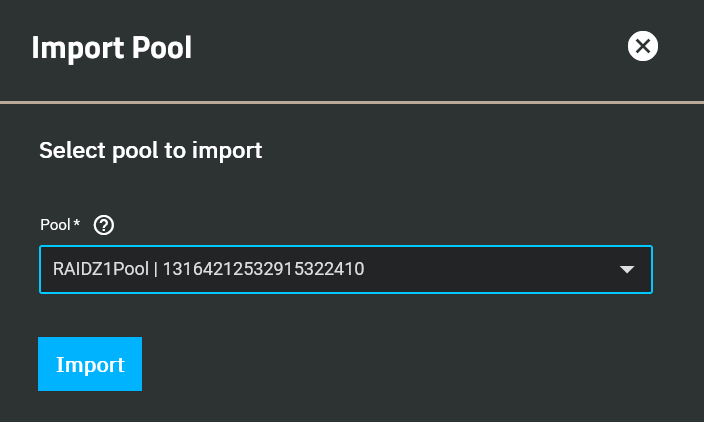

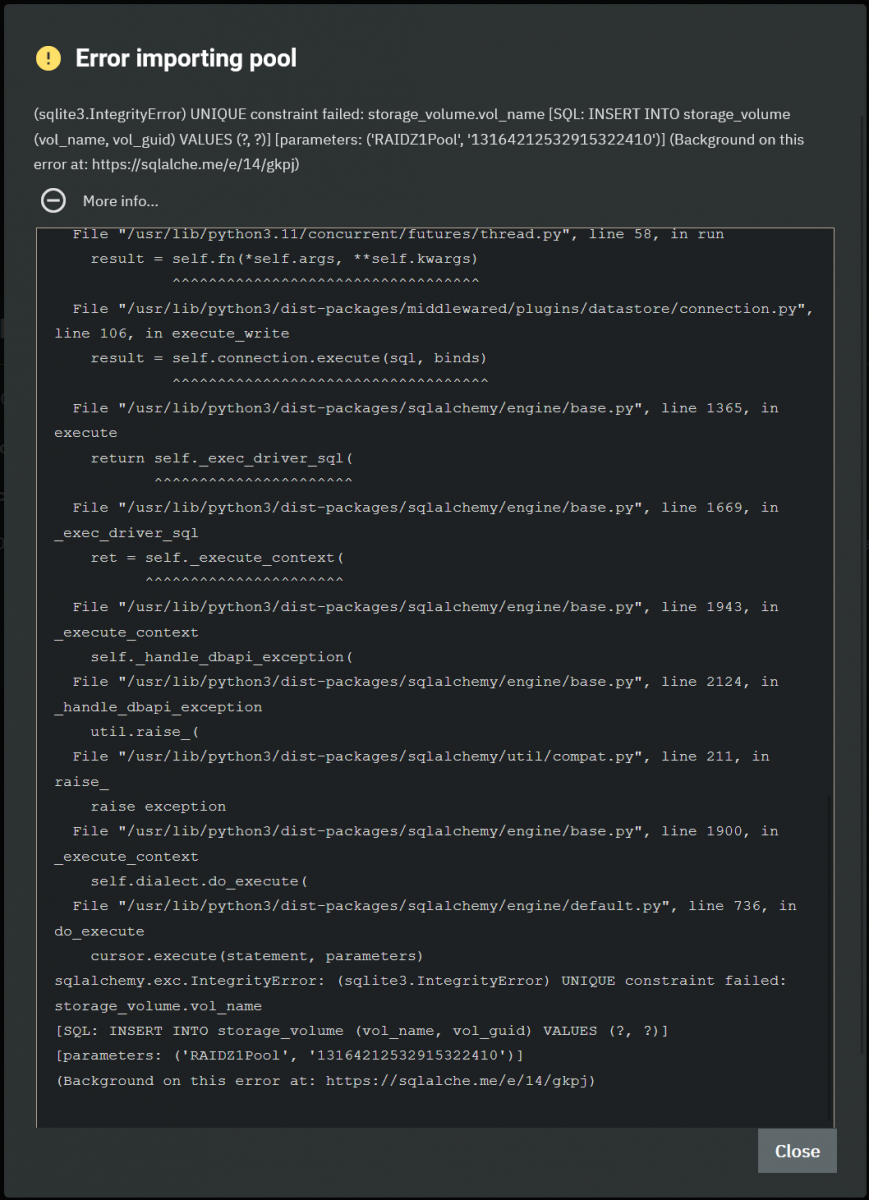

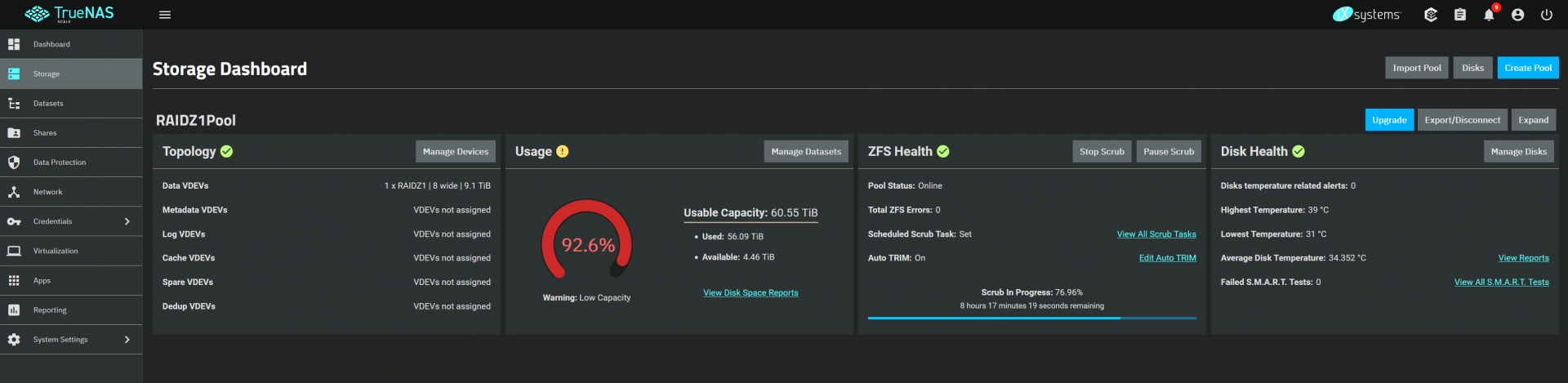

I am having a problem where my TrueNAS Scale server is having an issue importing the storage pool after an upgrade to version 23. When it first starts up, there is no pool attached and if go to Storage > Import Pool, I get the following error:

Afterwards, I see that the pool has in fact imported, however, I am unable to access it via SMB. I can see the server but when I navigate to it in Windows, it just shows an empty folder. This may be a separate issue, though. I was able to access the share before upgrading.

I am able to browse the share via SSH or the built in shell.

If I restart the server, the pool is again disconnected and I have to import it again to access it.

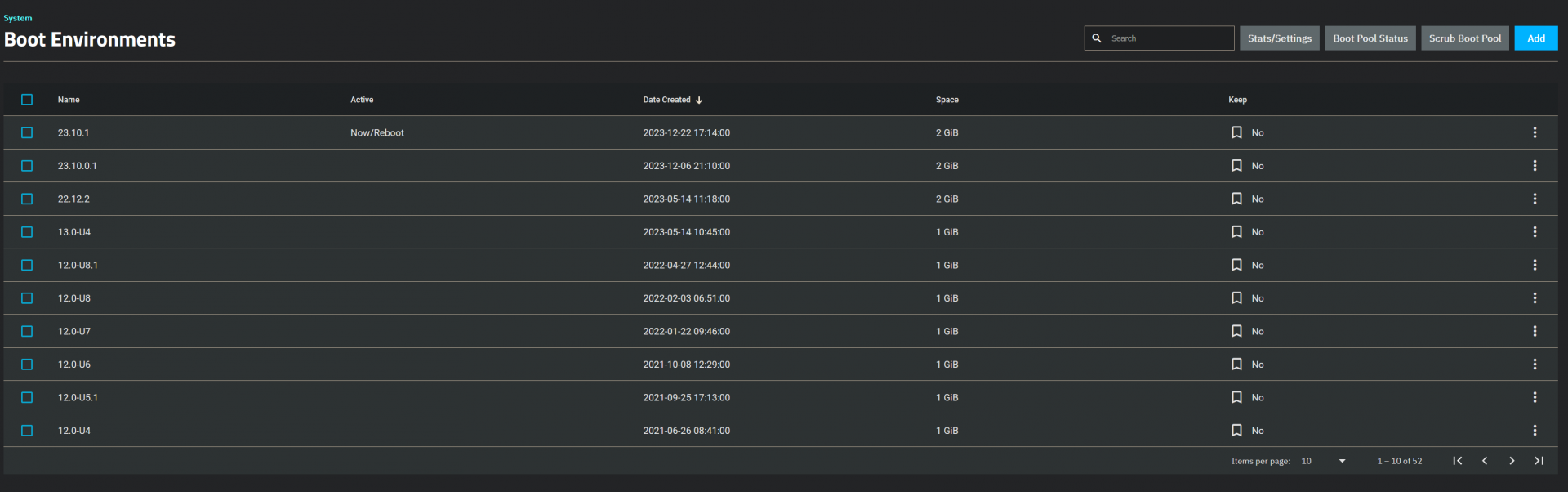

Here are my boot environments to show my past versions:

Any help would be greatly appreciated.

Code:

(sqlite3.IntegrityError) UNIQUE constraint failed: storage_volume.vol_name [SQL: INSERT INTO storage_volume (vol_name, vol_guid) VALUES (?, ?)] [parameters: ('RAIDZ1Pool', '13164212532915322410')] (Background on this error at: https://sqlalche.me/e/14/gkpj)Code:

Error: Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/base.py", line 1900, in _execute_context

self.dialect.do_execute(

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/default.py", line 736, in do_execute

cursor.execute(statement, parameters)

sqlite3.IntegrityError: UNIQUE constraint failed: storage_volume.vol_name

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 427, in run

await self.future

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 465, in __run_body

rv = await self.method(*([self] + args))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 177, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 44, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool_/import_pool.py", line 186, in import_pool

pool_id = await self.middleware.call('datastore.insert', 'storage.volume', {

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1399, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1342, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 177, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/datastore/write.py", line 62, in insert

result = await self.middleware.call(

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1399, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1353, in _call

return await self.run_in_executor(prepared_call.executor, methodobj, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1251, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/datastore/connection.py", line 106, in execute_write

result = self.connection.execute(sql, binds)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/base.py", line 1365, in execute

return self._exec_driver_sql(

^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/base.py", line 1669, in _exec_driver_sql

ret = self._execute_context(

^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/base.py", line 1943, in _execute_context

self._handle_dbapi_exception(

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/base.py", line 2124, in _handle_dbapi_exception

util.raise_(

File "/usr/lib/python3/dist-packages/sqlalchemy/util/compat.py", line 211, in raise_

raise exception

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/base.py", line 1900, in _execute_context

self.dialect.do_execute(

File "/usr/lib/python3/dist-packages/sqlalchemy/engine/default.py", line 736, in do_execute

cursor.execute(statement, parameters)

sqlalchemy.exc.IntegrityError: (sqlite3.IntegrityError) UNIQUE constraint failed: storage_volume.vol_name

[SQL: INSERT INTO storage_volume (vol_name, vol_guid) VALUES (?, ?)]

[parameters: ('RAIDZ1Pool', '13164212532915322410')]

(Background on this error at: https://sqlalche.me/e/14/gkpj)Code:

Dec 23 03:24:41 epsilonshrike kernel: block device autoloading is deprecated and will be removed. Dec 23 03:24:41 epsilonshrike kernel: md/raid1:md127: not clean -- starting background reconstruction Dec 23 03:24:41 epsilonshrike kernel: md/raid1:md127: active with 2 out of 2 mirrors Dec 23 03:24:41 epsilonshrike kernel: md127: detected capacity change from 0 to 4188160 Dec 23 03:24:41 epsilonshrike kernel: md: resync of RAID array md127 Dec 23 03:24:41 epsilonshrike kernel: block device autoloading is deprecated and will be removed. Dec 23 03:24:41 epsilonshrike kernel: md/raid1:md126: not clean -- starting background reconstruction Dec 23 03:24:41 epsilonshrike kernel: md/raid1:md126: active with 2 out of 2 mirrors Dec 23 03:24:41 epsilonshrike kernel: md126: detected capacity change from 0 to 4188160 Dec 23 03:24:41 epsilonshrike kernel: md: resync of RAID array md126 Dec 23 03:24:42 epsilonshrike kernel: block device autoloading is deprecated and will be removed. Dec 23 03:24:42 epsilonshrike kernel: md/raid1:md125: not clean -- starting background reconstruction Dec 23 03:24:42 epsilonshrike kernel: md/raid1:md125: active with 2 out of 2 mirrors Dec 23 03:24:42 epsilonshrike kernel: md125: detected capacity change from 0 to 4188160 Dec 23 03:24:42 epsilonshrike kernel: md: resync of RAID array md125 Dec 23 03:24:42 epsilonshrike kernel: block device autoloading is deprecated and will be removed. Dec 23 03:24:42 epsilonshrike kernel: md/raid1:md124: not clean -- starting background reconstruction Dec 23 03:24:42 epsilonshrike kernel: md/raid1:md124: active with 2 out of 2 mirrors Dec 23 03:24:42 epsilonshrike kernel: md124: detected capacity change from 0 to 4188160 Dec 23 03:24:42 epsilonshrike kernel: md: resync of RAID array md124 Dec 23 03:24:43 epsilonshrike kernel: Adding 2094076k swap on /dev/mapper/md127. Priority:-2 extents:1 across:2094076k FS Dec 23 03:24:44 epsilonshrike kernel: Adding 2094076k swap on /dev/mapper/md126. Priority:-3 extents:1 across:2094076k FS Dec 23 03:24:44 epsilonshrike kernel: Adding 2094076k swap on /dev/mapper/md125. Priority:-4 extents:1 across:2094076k FS Dec 23 03:24:44 epsilonshrike kernel: Adding 2094076k swap on /dev/mapper/md124. Priority:-5 extents:1 across:2094076k FS Dec 23 03:24:56 epsilonshrike kernel: md: md127: resync done. Dec 23 03:24:56 epsilonshrike kernel: md: md126: resync done. Dec 23 03:24:56 epsilonshrike kernel: md: md125: resync done. Dec 23 03:24:57 epsilonshrike kernel: md: md124: resync done.

Afterwards, I see that the pool has in fact imported, however, I am unable to access it via SMB. I can see the server but when I navigate to it in Windows, it just shows an empty folder. This may be a separate issue, though. I was able to access the share before upgrading.

I am able to browse the share via SSH or the built in shell.

If I restart the server, the pool is again disconnected and I have to import it again to access it.

Here are my boot environments to show my past versions:

Any help would be greatly appreciated.

Last edited: