Chewie198

Cadet

- Joined

- Jan 15, 2014

- Messages

- 8

I have a TrueNAS server that I've been maintaining for roughly a decade, going back to the FreeNAS 9 days. The first several years were on dedicated hardware and then eventually I migrated it to a Proxmox VM with dedicated SAS and networking passthrough on a Supermicro EYPC server. Last month I updated from TrueNAS Core 12.0-u8 to TrueNAS-SCALE-22.02.0.1 in order to take advantage of some of the improved synergies running Linux guests under KVM. The migration process itself completed successfully, and I only had to make a few manual changes afterwards to get my shares back online and networking configured correctly.

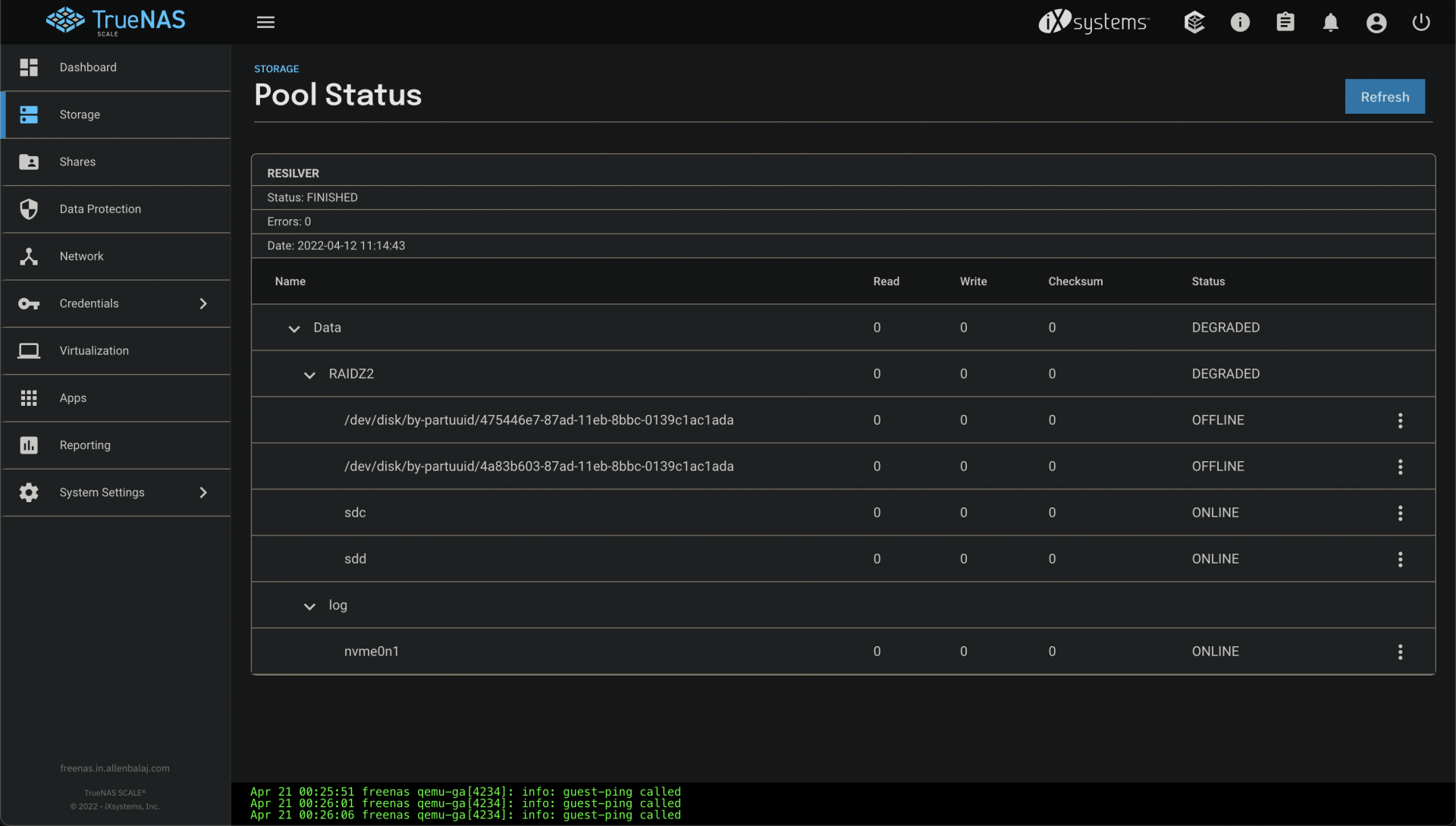

Functionally, I can access the data shares just fine, scrubs complete with no errors, and most of the dashboard functions normally, but certain operations like replications after the intial one are hanging indefinitely in waiting status or failing with errors. I'm also unable to update the read-only attribute on a mirrored pool with no errors. It just hangs on the spinning circle. The dashboard also reports that 4 out of the 6 CPU cores are at 100% perpetually, although the Proxmox metrics indicate that CPU usage for the VM is at less than 1%. I'm seeing repeated recoverable kernel panics being logged to the console, with no indication of why. One of my 4-drive RaidZ-2 arrays lost two drives roughly a month ago, and so I have a single alert in the console related to those two drives being taken offline (and physically removed from the server), but no other alerts are present. What might be causing these errors and how can I prevent them? I'm about to migrate the entire RaidZ-2 array to SSDs due to the failed drives and wanted to get some insight into this issue before I do so.

If it makes any difference to the troubleshooting process, I have a single Intel Optane NVMe drive attached to the pool as a log device as well. I have no errors ocurring in other VMs on the same server or the hypervisor itself, and no hardware errors logged in the BMC. This was also not occurring under TrueNAS Core 12.0-u8. Shutdown and startup of the VM takes over 15 fifteen minutes sometimes now during the Import or detach Pools phase, but eventually completes successfully.

Functionally, I can access the data shares just fine, scrubs complete with no errors, and most of the dashboard functions normally, but certain operations like replications after the intial one are hanging indefinitely in waiting status or failing with errors. I'm also unable to update the read-only attribute on a mirrored pool with no errors. It just hangs on the spinning circle. The dashboard also reports that 4 out of the 6 CPU cores are at 100% perpetually, although the Proxmox metrics indicate that CPU usage for the VM is at less than 1%. I'm seeing repeated recoverable kernel panics being logged to the console, with no indication of why. One of my 4-drive RaidZ-2 arrays lost two drives roughly a month ago, and so I have a single alert in the console related to those two drives being taken offline (and physically removed from the server), but no other alerts are present. What might be causing these errors and how can I prevent them? I'm about to migrate the entire RaidZ-2 array to SSDs due to the failed drives and wanted to get some insight into this issue before I do so.

If it makes any difference to the troubleshooting process, I have a single Intel Optane NVMe drive attached to the pool as a log device as well. I have no errors ocurring in other VMs on the same server or the hypervisor itself, and no hardware errors logged in the BMC. This was also not occurring under TrueNAS Core 12.0-u8. Shutdown and startup of the VM takes over 15 fifteen minutes sometimes now during the Import or detach Pools phase, but eventually completes successfully.

Code:

Apr 20 17:19:20 freenas kernel: task:txg_sync state:D stack: 0 pid: 3742 ppid: 2 flags:0x00004000 Apr 20 17:19:20 freenas kernel: Call Trace: Apr 20 17:19:20 freenas kernel: __schedule+0x282/0x870 Apr 20 17:19:20 freenas kernel: schedule+0x46/0xb0 Apr 20 17:19:20 freenas kernel: vcmn_err.cold+0x7e/0x80 [spl] Apr 20 17:19:20 freenas kernel: ? spl_kmem_cache_alloc+0x97/0x7c0 [spl] Apr 20 17:19:20 freenas kernel: ? zfs_btree_remove_idx+0xb4/0x9d0 [zfs] Apr 20 17:19:20 freenas kernel: ? zfs_btree_insert_into_leaf+0x233/0x2a0 [zfs] Apr 20 17:19:20 freenas kernel: zfs_panic_recover+0x6d/0x90 [zfs] Apr 20 17:19:20 freenas kernel: range_tree_add_impl+0x305/0xe40 [zfs] Apr 20 17:19:20 freenas kernel: ? lock_timer_base+0x61/0x80 Apr 20 17:19:20 freenas kernel: ? _cond_resched+0x16/0x40 Apr 20 17:19:20 freenas kernel: ? mutex_lock+0xe/0x30 Apr 20 17:19:20 freenas kernel: ? txg_list_add+0x7a/0x90 [zfs] Apr 20 17:19:20 freenas kernel: ? _cond_resched+0x16/0x40 Apr 20 17:19:20 freenas kernel: metaslab_free_concrete+0x11d/0x250 [zfs] Apr 20 17:19:20 freenas kernel: metaslab_free_impl+0xa9/0xe0 [zfs] Apr 20 17:19:20 freenas kernel: metaslab_free+0x168/0x190 [zfs] Apr 20 17:19:20 freenas kernel: zio_free_sync+0xda/0xf0 [zfs] Apr 20 17:19:20 freenas kernel: dsl_scan_free_block_cb+0x65/0x1a0 [zfs] Apr 20 17:19:20 freenas kernel: bpobj_iterate_blkptrs+0xfe/0x360 [zfs] Apr 20 17:19:20 freenas kernel: ? dsl_scan_free_block_cb+0x1a0/0x1a0 [zfs] Apr 20 17:19:20 freenas kernel: bpobj_iterate_impl+0x29a/0x550 [zfs] Apr 20 17:19:20 freenas kernel: ? dsl_scan_free_block_cb+0x1a0/0x1a0 [zfs] Apr 20 17:19:20 freenas kernel: dsl_scan_sync+0x552/0x1350 [zfs] Apr 20 17:19:20 freenas kernel: ? kfree+0xba/0x480 Apr 20 17:19:20 freenas kernel: ? bplist_iterate+0x115/0x130 [zfs] Apr 20 17:19:20 freenas kernel: spa_sync+0x5b3/0xfa0 [zfs] Apr 20 17:19:20 freenas kernel: ? mutex_lock+0xe/0x30 Apr 20 17:19:20 freenas kernel: ? spa_txg_history_init_io+0x101/0x110 [zfs] Apr 20 17:19:20 freenas kernel: txg_sync_thread+0x2e0/0x4a0 [zfs] Apr 20 17:19:20 freenas kernel: ? txg_fini+0x250/0x250 [zfs] Apr 20 17:19:20 freenas kernel: thread_generic_wrapper+0x6f/0x80 [spl] Apr 20 17:19:20 freenas kernel: ? __thread_exit+0x20/0x20 [spl] Apr 20 17:19:20 freenas kernel: kthread+0x11b/0x140 Apr 20 17:19:20 freenas kernel: ? __kthread_bind_mask+0x60/0x60 Apr 20 17:19:20 freenas kernel: ret_from_fork+0x22/0x30 Apr 20 17:21:20 freenas kernel: task:txg_sync state:D stack: 0 pid: 3742 ppid: 2 flags:0x00004000 Apr 20 17:21:20 freenas kernel: Call Trace: Apr 20 17:21:20 freenas kernel: __schedule+0x282/0x870 Apr 20 17:21:20 freenas kernel: schedule+0x46/0xb0 Apr 20 17:21:20 freenas kernel: vcmn_err.cold+0x7e/0x80 [spl] Apr 20 17:21:20 freenas kernel: ? spl_kmem_cache_alloc+0x97/0x7c0 [spl] Apr 20 17:21:20 freenas kernel: ? zfs_btree_remove_idx+0xb4/0x9d0 [zfs] Apr 20 17:21:20 freenas kernel: ? zfs_btree_insert_into_leaf+0x233/0x2a0 [zfs] Apr 20 17:21:20 freenas kernel: zfs_panic_recover+0x6d/0x90 [zfs] Apr 20 17:21:20 freenas kernel: range_tree_add_impl+0x305/0xe40 [zfs] Apr 20 17:21:20 freenas kernel: ? lock_timer_base+0x61/0x80 Apr 20 17:21:20 freenas kernel: ? _cond_resched+0x16/0x40 Apr 20 17:21:20 freenas kernel: ? mutex_lock+0xe/0x30 Apr 20 17:21:20 freenas kernel: ? txg_list_add+0x7a/0x90 [zfs] Apr 20 17:21:20 freenas kernel: ? _cond_resched+0x16/0x40 Apr 20 17:21:20 freenas kernel: metaslab_free_concrete+0x11d/0x250 [zfs] Apr 20 17:21:20 freenas kernel: metaslab_free_impl+0xa9/0xe0 [zfs] Apr 20 17:21:20 freenas kernel: metaslab_free+0x168/0x190 [zfs] Apr 20 17:21:20 freenas kernel: zio_free_sync+0xda/0xf0 [zfs] Apr 20 17:21:20 freenas kernel: dsl_scan_free_block_cb+0x65/0x1a0 [zfs] Apr 20 17:21:20 freenas kernel: bpobj_iterate_blkptrs+0xfe/0x360 [zfs] Apr 20 17:21:20 freenas kernel: ? dsl_scan_free_block_cb+0x1a0/0x1a0 [zfs] Apr 20 17:21:20 freenas kernel: bpobj_iterate_impl+0x29a/0x550 [zfs] Apr 20 17:21:20 freenas kernel: ? dsl_scan_free_block_cb+0x1a0/0x1a0 [zfs] Apr 20 17:21:20 freenas kernel: dsl_scan_sync+0x552/0x1350 [zfs] Apr 20 17:21:20 freenas kernel: ? kfree+0xba/0x480 Apr 20 17:21:20 freenas kernel: ? bplist_iterate+0x115/0x130 [zfs] Apr 20 17:21:20 freenas kernel: spa_sync+0x5b3/0xfa0 [zfs] Apr 20 17:21:20 freenas kernel: ? mutex_lock+0xe/0x30 Apr 20 17:21:20 freenas kernel: ? spa_txg_history_init_io+0x101/0x110 [zfs] Apr 20 17:21:20 freenas kernel: txg_sync_thread+0x2e0/0x4a0 [zfs] Apr 20 17:21:20 freenas kernel: ? txg_fini+0x250/0x250 [zfs] Apr 20 17:21:20 freenas kernel: thread_generic_wrapper+0x6f/0x80 [spl] Apr 20 17:21:20 freenas kernel: ? __thread_exit+0x20/0x20 [spl] Apr 20 17:21:20 freenas kernel: kthread+0x11b/0x140 Apr 20 17:21:20 freenas kernel: ? __kthread_bind_mask+0x60/0x60 Apr 20 17:21:20 freenas kernel: ret_from_fork+0x22/0x30