Hardware:

Supermicro X9SRH-7TF

Intel Xeon 2.1GHz E5-2620 v2 Processor

256GB RAM

45 x 8TB Ultrastar He8 3.5" 7200RPM SAS 6GB/s

3 x 11 disk RAIDZ-3

2 x 10GB Intel x540 onboard NICS

Freenas 9.3 mos current, stable. (Mirrored boot drives with newly installed OS)

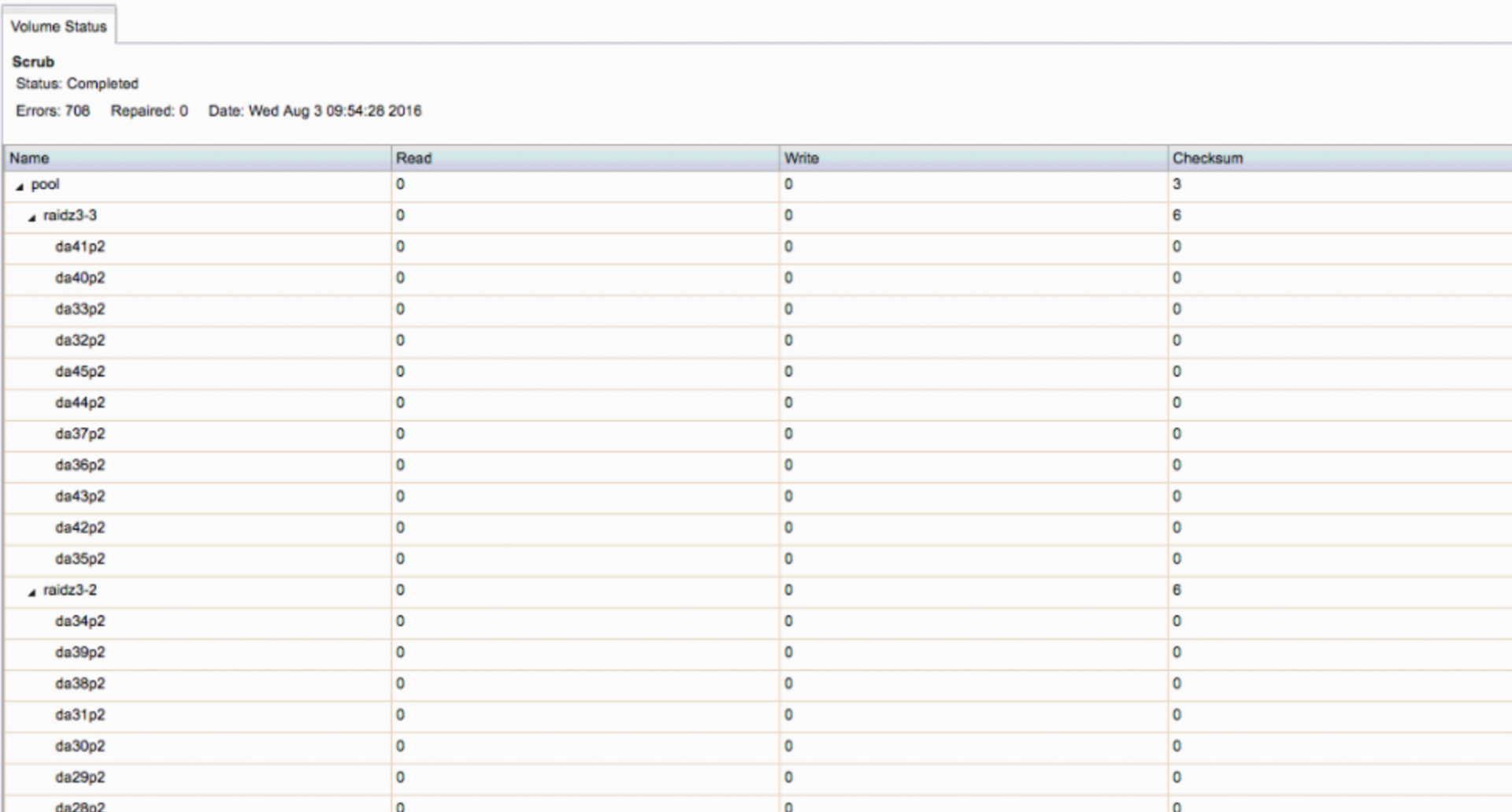

So everything is running stable. We went to add another external drive enclosure via mini-SAS connection. This would make 2 external drive enclosures in total. In doing so, a power issue was noticed as all the drives in that external enclosure came back as degraded due to excessive checksum errors. We replaced the board in the enclosure and most of the issue went away. Did a scrub on the zpool.

When we came back there were still errors that were uncorrectable.

The image above illustrates what the pool looked like before a scrub. After the scrub, all errors were gone EXCEPT the last one: pool/.system@auto-20160720.2300-2d:<0x0>

No matter what we do, that does not go away. And even after we run zpool clear, the checksums will go away on the pool and then accumulate on an individual vdev and NOT on specific drives.

Here is a shot to illustrate that:

That checksum error will continue to increase until it eventually degrades the pool.

The "Errors: 706 goes down significantly once a scrub completes, but then goes back up. Not exactly sure why but I speculate that it is due to errors in the metadata somewhere.

After doing some research, found this from Oracle's documentation: "

If non-zero errors are reported for a top-level virtual device, portions of your data might have become inaccessible"

So we know that there is corrupt metadata somewhere on the pool. I assume it is something to do with the pool./system snapshot task. The thing is, we know, and we want to tell the system that we just dont care about it. We have even cleared out all of the snapshots on the system, deleted the system/ folder and rebooted, reinstalled the OS and run multiple scrubs. We know the disks are solid as we have run countless SMART test and the likelihood of 6-8 SAS drives being bad right out of the box is ridiculous.

The question I have, is how to get this error out of the system. A scrub wont fix it, and I cant lock down where the file is to move it or delete it. Does anyone know any tricks? We have been troubleshooting this for the past 2 weeks to no avail. Any help is greatly appreciated. We do have onsite backups on LTO. But there is A LOT of data to backup and the backups do not contain changes or new rendering so we are trying to avoid that unless absolutely necessary.

As is, the system is actually performing great. Same as before all of this happened. It is just a nuisance to have the same error being reported and having to constantly clear the zpool status so it doesnt go degraded. So again, if there is a way to completely clear this error out as it is not critical to our needs and get back to status quo, that would be some magic I would greatly appreciate.

Thank you all.

Supermicro X9SRH-7TF

Intel Xeon 2.1GHz E5-2620 v2 Processor

256GB RAM

45 x 8TB Ultrastar He8 3.5" 7200RPM SAS 6GB/s

3 x 11 disk RAIDZ-3

2 x 10GB Intel x540 onboard NICS

Freenas 9.3 mos current, stable. (Mirrored boot drives with newly installed OS)

So everything is running stable. We went to add another external drive enclosure via mini-SAS connection. This would make 2 external drive enclosures in total. In doing so, a power issue was noticed as all the drives in that external enclosure came back as degraded due to excessive checksum errors. We replaced the board in the enclosure and most of the issue went away. Did a scrub on the zpool.

When we came back there were still errors that were uncorrectable.

The image above illustrates what the pool looked like before a scrub. After the scrub, all errors were gone EXCEPT the last one: pool/.system@auto-20160720.2300-2d:<0x0>

No matter what we do, that does not go away. And even after we run zpool clear, the checksums will go away on the pool and then accumulate on an individual vdev and NOT on specific drives.

Here is a shot to illustrate that:

That checksum error will continue to increase until it eventually degrades the pool.

The "Errors: 706 goes down significantly once a scrub completes, but then goes back up. Not exactly sure why but I speculate that it is due to errors in the metadata somewhere.

After doing some research, found this from Oracle's documentation: "

If non-zero errors are reported for a top-level virtual device, portions of your data might have become inaccessible"

So we know that there is corrupt metadata somewhere on the pool. I assume it is something to do with the pool./system snapshot task. The thing is, we know, and we want to tell the system that we just dont care about it. We have even cleared out all of the snapshots on the system, deleted the system/ folder and rebooted, reinstalled the OS and run multiple scrubs. We know the disks are solid as we have run countless SMART test and the likelihood of 6-8 SAS drives being bad right out of the box is ridiculous.

The question I have, is how to get this error out of the system. A scrub wont fix it, and I cant lock down where the file is to move it or delete it. Does anyone know any tricks? We have been troubleshooting this for the past 2 weeks to no avail. Any help is greatly appreciated. We do have onsite backups on LTO. But there is A LOT of data to backup and the backups do not contain changes or new rendering so we are trying to avoid that unless absolutely necessary.

As is, the system is actually performing great. Same as before all of this happened. It is just a nuisance to have the same error being reported and having to constantly clear the zpool status so it doesnt go degraded. So again, if there is a way to completely clear this error out as it is not critical to our needs and get back to status quo, that would be some magic I would greatly appreciate.

Thank you all.