Alan Latteri

Dabbler

- Joined

- Sep 25, 2015

- Messages

- 16

Hello,

I am getting max 240MB/s reads over NFS with 10gigE. All the iperf check out at almost line rate. To eliminate a possibility of disk bottleneck, I created a single stripe on a 400 GB Intel 750 NVMe, which has around 2000MB/s throughput.

Dell T630, 128 GB ram, 2 x quad 3.0 xeon, Perc 730 in HBA mode, Intel x520 network card direct connect cable to Dell X4012 switch. Client is using same network card, optically connected to switch. iperf between the 2 is line rate.

I've tried with/without auto tune. Latest 9.3 stable and nightly. w & w/o compression. NFS 3 & 4. Standard and 1M record sizes. Added more NFS threads. MTU 9000. I've tried adding -o rsize=1048576 to the NFS mount but it always sticks to rsize=131072. The speeds never goes above 240 MB/s.

If I use the same setup, same hardware but running Centos 7, I get line speeds, around 950 MB/s.

Something is up with FreeNAS. Please help, spent the past week trying to getting this working smoothly in FreeNAS.

Client side mount:

192.168.1.12:/mnt/beast/test/ /mnt/test nfs4 rw,relatime,vers=4,rsize=131072,wsize=131072,namlen=255,hard,proto=tcp,port=0,timeo=600,retrans=2,sec=sys,clientaddr=192.168.1.100,minorversion=0,local_lock=none,addr=192.168.1.12 0 0

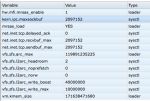

Attached are the tunables.

I am getting max 240MB/s reads over NFS with 10gigE. All the iperf check out at almost line rate. To eliminate a possibility of disk bottleneck, I created a single stripe on a 400 GB Intel 750 NVMe, which has around 2000MB/s throughput.

Dell T630, 128 GB ram, 2 x quad 3.0 xeon, Perc 730 in HBA mode, Intel x520 network card direct connect cable to Dell X4012 switch. Client is using same network card, optically connected to switch. iperf between the 2 is line rate.

I've tried with/without auto tune. Latest 9.3 stable and nightly. w & w/o compression. NFS 3 & 4. Standard and 1M record sizes. Added more NFS threads. MTU 9000. I've tried adding -o rsize=1048576 to the NFS mount but it always sticks to rsize=131072. The speeds never goes above 240 MB/s.

If I use the same setup, same hardware but running Centos 7, I get line speeds, around 950 MB/s.

Something is up with FreeNAS. Please help, spent the past week trying to getting this working smoothly in FreeNAS.

Client side mount:

192.168.1.12:/mnt/beast/test/ /mnt/test nfs4 rw,relatime,vers=4,rsize=131072,wsize=131072,namlen=255,hard,proto=tcp,port=0,timeo=600,retrans=2,sec=sys,clientaddr=192.168.1.100,minorversion=0,local_lock=none,addr=192.168.1.12 0 0

Attached are the tunables.

Attachments

Last edited: