Sorry to hear you are having trouble! Interesting that adding an SLOG seems to fix the issue. The interpretation is you are getting too many sync writes for the RAIDZ2 pool to handle without an SLOG.

It would be interesting to monitor the sync write volume and find out what volume you are seeing. Depending on the client/workload you could have a high sustained number of sync, or a more bursty profile (which is what I would expect for an iscsi vmware datastore, though even that might be "constant" but a lighter load).

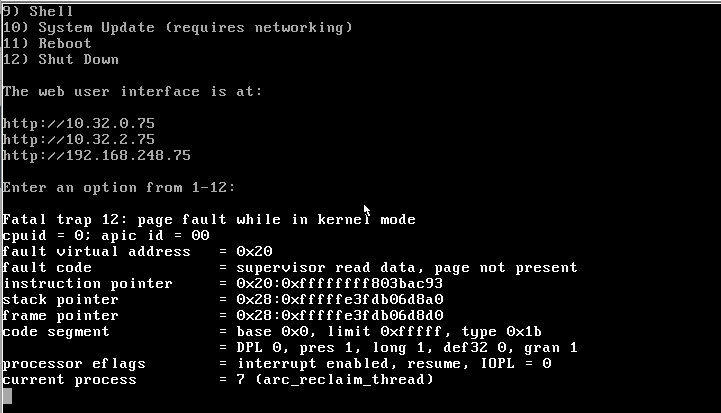

It sounds like you are just running out of memory. I suspect the SLOG helps because you can flush to ZIL fast enough to not require more memory buffering, where as on the hdd pool, given that it's slower, you need more RAM buffering. It tries to grab some memory and cannot. Crash.

I think arc_reclaim thread (which I think is responsible for reducing the arc back down to it's target size if it's over) is nominally called about 1x/second I think. So in one particular second you have a large volume of sync writes that come in, the arc is already maxed or over, and that starts the request for more memory, leading to the crash.

I wonder if you just limit the arc max size down several GB (seems like you have plenty of memory at 256GB. How much of that is arc when it's maxed?)? You should then have memory left for the arc to expand for that one second as needed.

Just initial thoughts having read the thread. I'm by no means an expert here, but might be worth a shot if you are experimenting.