Amsoil_Jim

Contributor

- Joined

- Feb 22, 2016

- Messages

- 175

Running on 11.2Beta3

This morning a cronjob ran to do a scrub of my main pool and it usually takes about 2 hours and at 6am I get an email of scrub and smart test results and a config file.

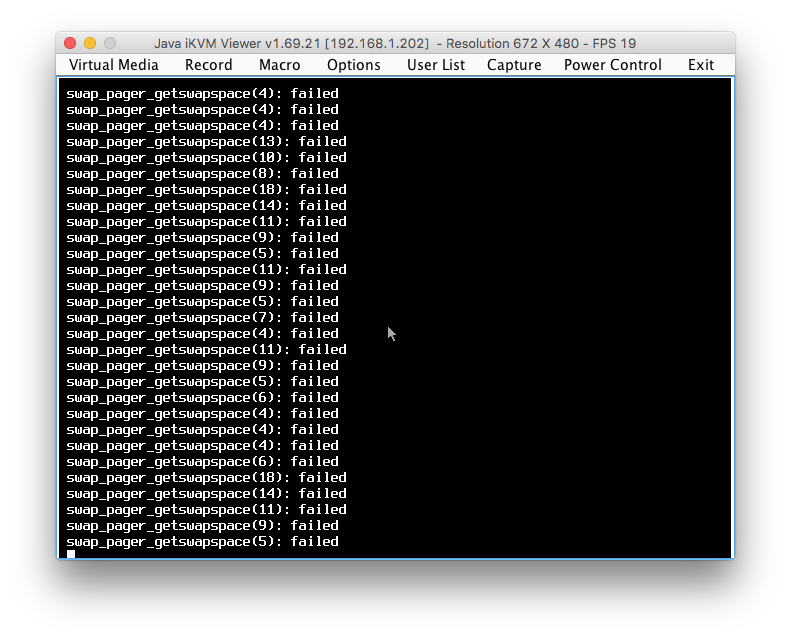

Well I didn't get that email. I attempted the navigate to the GUI and it was unresponsive, the jails were also unresponsive. So i went to the IPMI and pulled up the iKVM viewer to see this error posting over and over.

I don't get why the system is even using swap when it has 192GB of RAM. I restarted the system and is operating again.

Is this just an issue with the Beta?

This morning a cronjob ran to do a scrub of my main pool and it usually takes about 2 hours and at 6am I get an email of scrub and smart test results and a config file.

Well I didn't get that email. I attempted the navigate to the GUI and it was unresponsive, the jails were also unresponsive. So i went to the IPMI and pulled up the iKVM viewer to see this error posting over and over.

I don't get why the system is even using swap when it has 192GB of RAM. I restarted the system and is operating again.

Is this just an issue with the Beta?