-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

- Forums

- Archives

- FreeNAS (Legacy Software Releases)

- FreeNAS Help & support

- General Questions and Help

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Swap with 9.10

- Thread starter hugovsky

- Start date

- Status

- Not open for further replies.

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

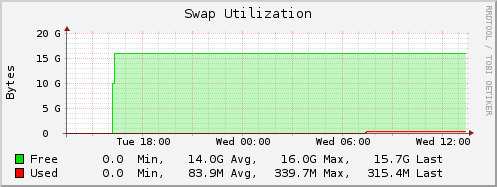

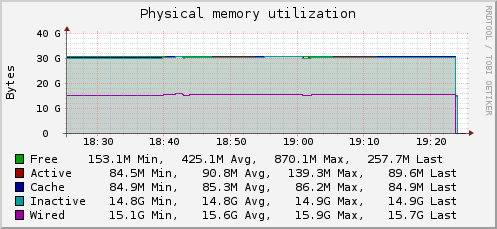

This still seems to be an issue. I've just begun replicating/rsyncing data to a new 24TB (of 32TB physical) with FreenNAS 9.10.1 with 32GB of RAM. Actual usage will be about 5-10TB.

A day later, its sitting on 315MB of idle swap (I could tell something was wrong because it took a long time to login to the GUI).

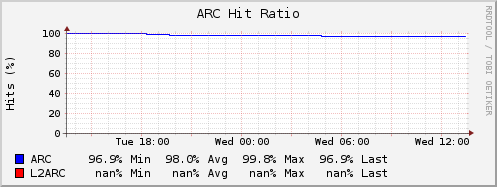

Maybe I need more RAM, but with 97% arc hit ratio, I don't think so.

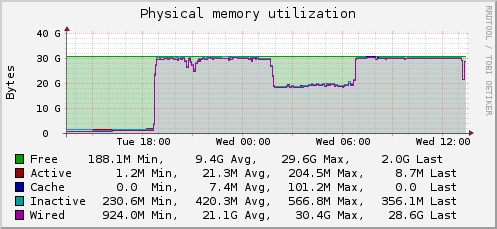

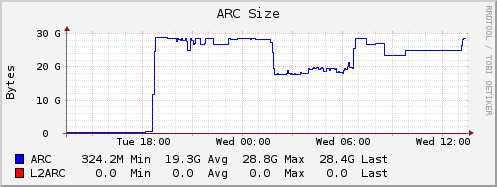

You can see that 330MB or so was swapped at about 7AM when the ARC decided to grow at 7am causing 30.4GB to become Wired. As far as I can tell, nothing is scheduled for 7am. The replication finished at 1am, and the rsync to an iscsi volume is ongoing.

Free memory did get down to 188MB.

Any idea of any tuneables to fiddle with to avoid this behaviour?

A day later, its sitting on 315MB of idle swap (I could tell something was wrong because it took a long time to login to the GUI).

Maybe I need more RAM, but with 97% arc hit ratio, I don't think so.

You can see that 330MB or so was swapped at about 7AM when the ARC decided to grow at 7am causing 30.4GB to become Wired. As far as I can tell, nothing is scheduled for 7am. The replication finished at 1am, and the rsync to an iscsi volume is ongoing.

Free memory did get down to 188MB.

Any idea of any tuneables to fiddle with to avoid this behaviour?

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

I've written a significantly improved version of my original proof-of-concept script.

https://forums.freenas.org/index.ph...ny-used-swap-to-prevent-kernel-crashes.46206/

This version doesn't do anything if anything doesn't need to be done, it uses swapinfo, so its accurate, and its suitable for using with cron.

https://forums.freenas.org/index.ph...ny-used-swap-to-prevent-kernel-crashes.46206/

This version doesn't do anything if anything doesn't need to be done, it uses swapinfo, so its accurate, and its suitable for using with cron.

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

I've been doing some digging.

With my script I mentioned above I see silly amounts of swap every now and again... few hundred bytes.

Anyway, I was looking through sysctl parameters... and ended up googling one... and found...

This thread:

https://lists.freebsd.org/pipermail/freebsd-stable/2015-July/082741.html

The crux of the thread is that a heavy heavy system is running out of the ability to page because arc is fighting with the VM.

This leads to this bug report:

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=187594

Which is is 10.0, but hasn't been merged into 10.1 either. And the patch to fix it.

The bolded seems to be the issue we are seeing.

The How To Repeat seems to exactly describe the issue, cache heavy work-load, TB+ sized pool. Free RAM drops, while ARC stays pinned at max (ie 30GB) in my case, and other stuff gets paged out... surely ARC should be shrinking if there is paging... and its not.

The patch blames this line in zfs arc.c source:

And says, this is trying to keep 25% free, but because the functions don't do what the author of the code thinks they do, it doesn't actually work.

The patch implements a tunable :

Which defaults to 25% as was the original ZFS authors' intent, but actually implements the tunable in what seems to be a very complete way.

So. Does anyone want to try to work out how to test the patch ;)

With my script I mentioned above I see silly amounts of swap every now and again... few hundred bytes.

Anyway, I was looking through sysctl parameters... and ended up googling one... and found...

This thread:

https://lists.freebsd.org/pipermail/freebsd-stable/2015-July/082741.html

The crux of the thread is that a heavy heavy system is running out of the ability to page because arc is fighting with the VM.

This leads to this bug report:

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=187594

Which is is 10.0, but hasn't been merged into 10.1 either. And the patch to fix it.

ZFS can be convinced to engage in pathological behavior due to a bad

low-memory test in arc.c

The offending file is at

/usr/src/sys/cddl/contrib/opensolaris/uts/common/fs/zfs/arc.c; it allegedly

checks for 25% free memory, and if it is less asks for the cache to shrink.

(snippet from arc.c around line 2494 of arc.c in 10-STABLE; path

/usr/src/sys/cddl/contrib/opensolaris/uts/common/fs/zfs)

Code:#else /* !sun */ if (kmem_used() > (kmem_size() * 3) / 4) return (1); #endif /* sun */

Unfortunately these two functions do not return what the authors thought

they did. It's clear what they're trying to do from the Solaris-specific

code up above this test.

The result is that the cache only shrinks when vm_paging_needed() tests

true, but by that time the system is in serious memory trouble and by

triggering only there it actually drives the system further into paging,

because the pager will not recall pages from the swap until they are next

executed. This leads the ARC to try to fill in all the available RAM even

though pages have been pushed off onto swap. Not good.

The bolded seems to be the issue we are seeing.

How-To-Repeat: Set up a cache-heavy workload on large (~terabyte sized or bigger)

ZFS filesystems and note that free RAM drops to the point that

starvation occurs, while "wired" memory pins at the maximum ARC

cache size, even though you have other demands for RAM that should

cause the ARC memory congestion control algorithm to evict some of

the cache as demand rises. It does not.

The How To Repeat seems to exactly describe the issue, cache heavy work-load, TB+ sized pool. Free RAM drops, while ARC stays pinned at max (ie 30GB) in my case, and other stuff gets paged out... surely ARC should be shrinking if there is paging... and its not.

The patch blames this line in zfs arc.c source:

Code:

if (kmem_used() > (kmem_size() * 3) / 4)

return (1);

And says, this is trying to keep 25% free, but because the functions don't do what the author of the code thinks they do, it doesn't actually work.

The patch implements a tunable :

vfs.zfs.arc_freepage_percent_targetWhich defaults to 25% as was the original ZFS authors' intent, but actually implements the tunable in what seems to be a very complete way.

So. Does anyone want to try to work out how to test the patch ;)

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

The FreeBSD guys aren't necessary convinved that that is the right solution, but is heading towards it.

Interestingly

This is a revision, 3 years ago, which changed how the VM pager worked out its targets.

Which leads to:

Which is that.... I notice that there's a rounding error here...

Which is what I'm seeing... I think.

Interestingly

In fact, I think that it was this commit

http://svnweb.freebsd.org/changeset/base/254304 that broke a balance between the

page cache and ZFS ARC.

This is a revision, 3 years ago, which changed how the VM pager worked out its targets.

Follow-up:

21 days at this point of uninterrupted uptime, inact pages are stable as

is the free list, wired and free are appropriate and varies with load as

expected, ZERO swapping and performance is and has remained excellent,

all on a very-heavily used fairly-beefy (~24GB RAM, dual Xeon CPUs)

production system under 10-STABLE.

Been running this on stable/8 for a week now on 3 separate machines.

All appears stable and under heavy load, we can certainly see the new

reclaim firing early and appropriately when-needed (no longer do we

have programs getting swapped out).

Interestingly, in our testing we've found that we can force the old

reclaim (code state prior to applying Karl's patch) to fire by sapping the

few remaining pages from unallocated memory. I do this by exploiting

a little known bug in the bourne-shell to leak memory (command below).

sh -c 'f(){ while :;do local b;done;};f'

Watching "top" in the un-patched state, we can see Wired memory grow

from ARC usage but not drop. I then run the above command and "top"

shows an "sh" process with a fast-growing "SIZE", quickly eating up about

100MB per second. When "top" shows the Free memory drop to mere KB

(single pages), we see the original (again, unpatched) reclaim algorithm

fire

and the Wired memory finally starts to drop.

After applying this patch, we no longer have to play the game of "eat

all my remaining memory to force the original reclaim event to free up

pages", but rather the ARC waxes and wanes with normal applicate usage.

However, I must say that on stable/8 the problem of applications going to

sleep is not nearly as bad as I have experienced it in 9 or 10.

We are happy to report that the patch seems to be a win for stable/8 as

well because in our case, we do like to have a bit of free memory and the

old reclaim was not providing that. It's nice to not have to resort to

tricks to

get the ARC to pare down.

I have now been running the latest delta as posted 26 March -- it is

coming up on two months now, has been stable here and I've seen several

positive reports and no negative ones on impact for others. Performance

continues to be "as expected."

Is there an expectation on this being merged forward and/or MFC'd?

Having run it for a few months on a number of boxes now, my general impression is that it seems like it goes a little _too_ far (with default options anyway; I haven't tried any tuning) toward making the ARC give up its lunch money to anybody who looks threateningly at it. It feels like it should be a bit more aggressive, and historically was and did fine.

However, it's still _much_ nicer than the unpatched case, where the rest of the system starves and hides out in the swap space. So from here, while perhaps imperfect and in need of some tuning work, it's still a significant improvement on the prior state, so landing it sounds just fine to me.

Which leads to:

After further discussion we're like to ask users to test setting vfs.zfs.arc_free_target = (vm.stats.vm.v_free_min / 10) * 11

Code:

sysctl vfs.zfs.arc_free_target vm.stats.vm.v_free_min vfs.zfs.arc_free_target: 56375 vm.stats.vm.v_free_min: 51254

Which is that.... I notice that there's a rounding error here...

That will result in the VM system going DEEPLY into contention before ARC is freed up on my production system here it will cut the free page count before ARC is freed by 2/3rds!

IMHO this will result in forced paging behavior and stalls.

Which is what I'm seeing... I think.

The issue that led to me looking at this in the first place is that holding ARC until the pagedaemon has started to consider swapping is pretty easy to argue as "always wrong", and getting close enough to the line that an image activation or RAM request from an application shoves you over the line results in paging activity to the swap that should instead be an eviction of space held by the ARC. As for an argument that this will result in some oscillation of the ARC space that's correct but it's exactly what *should* happen; when memory pressure is low it's perfectly fine to use all the free space for disk caching, but when it's not, no matter how transiently that may be the case, you *never, ever* want to page in an attempt to hold ARC in RAM as you're now trading one prospective physical I/O for two guaranteed ones!

It appears that the latest change puts that back in play, and that's exactly what the original patch was intended to, and did, stop.

Last edited by a moderator:

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

As such perhaps those looking at this issue might contemplate using Samba with a Windows machine mounting the ZFS volume over a high-bandwidth (e.g. Gigabit or better) network connection as a test case. It sure works out well for me in that regard. A directory copy via drag-n-drop of some very large files and directories during a "cd /usr/src/release;make clean;make memstick" on a moderately-sized RAM machine may be sufficient to cause trouble. I can do so pretty much at-will on my production machine as noted above since that system is pretty busy, provided I allow vm.v_free_target to be invaded by the ARC.

As soon as I don't allow that to happen the stalls disappear.

The base behavior during these "pauses" during writes (if you have multiple pools and the one root is on is not affected -- otherwise you're screwed as you can't activate any image until it clears) is that one or more of the I/O channels associated with a pool has fairly heavy I/O activity on it but the others (that should, given the pool's organization) do not. Since a write cannot return until all the pool devices that must be written to have their I/O completed the observed behavior (I/O "hangs" without any errors being reported) matches what you see with iostat. The triggering event appears to be that paging (even in very small volume!) is initiated due to an attempt to hold ARC cache when the system is under material VM pressure, defined as allowing the ARC to remain sacrosanct beyond the invasion of vm.v_free_target. It does not take much paging during these heavy write I/O conditions to cause it either; I have seen it with only a few hundred blocks on the swap, and the swap is NOT going to ZFS but rather is to a dedicated partition on the boot drive -- so the issue is quite-clearly not contention within ZFS between block I/O and swap activity.

Yep, multi-TB shares... gigabit SMB... check, check.

Silly amounts of swap... check.

People have been going swimming in this regard since at least FreeBSD-8 with ZFS filesystems and the usual refrain when it happens to someone is that their hardware is broken or they need more RAM. Neither of those assertions has proved up and a lot of unnecessary RAM has likely been bought and disk drives condemned without cause chasing one's tail in this regard, never mind the indictments leveled against various and sundry high-performance disk adapters that appear to have been unfounded as well. The difference in system performance between 99.2% cache hit rates and 99.7% with a doubling of system memory is close to nil but the dollar impact is not especially when a one-line code change could have avoided the expense.

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Comment 128

After much additional back and forth with Steve I believe I have identified the reason for #2 in my reported results and have developed a patch to address it.

What we are doing right now is essentially violence served upon the ZFS code in an attempt to force it to pressure the VM system to reclaim memory that can then be used for ARC.

While setting arc_free_target = vm_free_target helps this situation, and in fact in my testing doubling both arc_free_target and vm_free_target helped *even more*, it did not result in a "complete" fix.

I now understand why.

In short the dirty memory that ZFS internally allocates for writes can be enough to drive the system into aggressive paging, even when it is trying to aggressively reclaim ARC. The problem occurs when large I/O requirements "stack up" before the ARC can evict which forces free memory below the paging threshold. This in turn causes the ZFS I/O system to block on paging (that is, it is blocked waiting for the VM system to give it memory and the VM system must page to do so), which is a pathological condition that should never exist. I can re-create this behavior "at will".

At present "as delivered" (with or without Steve's patch) the code will allow up to 10% of system RAM to be used for dirty I/Os or 4GB, which ever is less. The problem is that on a ~12GB system that is 1.2GB of RAM, and that exceeds the difference between free_target and the paging threshold (by a *lot*!) A similar problem exists for other configurations; at 16GB the difference between free_min and free_target (by default) is ~250MB but 10% of the installed memory is 1.6GB, or roughly SIX TIMES the margin available. If you allow the ARC to invade beyond free_target then the problem gets worse for obvious reasons. This is why setting it to free_target helps, and in fact why when I *doubled* free_target it helped even more -- but did not completely eliminate the pathology, and I was still able to force paging to occur by simply loading up on big write I/Os.

I propose that I now have a fix instead of a workaround.

Note that for VERY large RAM configurations (~250GB+) the maximum dirty buffer allocation (4GB) is small enough that you will not invade the paging threshold. In addition if you are not write I/O bound in the first place you won't run into the problem since the system never builds the write queue depth and thus you don't get bit by this at all.

But if you throw large write I/Os at the system at a rate that exceeds what your disks can actually perform at there is a decent probability of forcing the VM system into a desperation swap condition which destroys performance and produces the "hangs." *Any* workload that produces large write I/O requests that exceed the disk performance available is potentially subject to this behavior to some degree, and the more large write I/O stress you put on the system the more-likely you are to run into it. Workloads that mix sync I/Os on the same spindles as large async write I/Os are especially bad because the sync I/Os slow total throughput available dramatically. In addition during a resilver, especially on a raidz(x) volume where I/O performance is materially impaired (it is often cut by anywhere between 50-60% during that operation) you're begging for trouble for the same reason.

Steve pointed me at a blog describing some of the changes in the I/O subsystem in openzfs along with how the code handles dirty pages internally and, with some code-perusing the missing piece fell into place.

The solution I have come up with is to add dynamic sizing of the dirty buffer pool to the code that checks for the need to evict ARC. By dynamically limiting the outstanding dirty I/O RAM demand to the margin between the current free memory and the system's paging threshold even ruinously nasty I/O backlogs no longer cause the system to page and you no longer get ZFS I/O threads in a blocking state.

I can now run on my test system with an intentionally faulted and resilvering raidz2 pool, dramatically cutting the I/O capacity of the system, while hammering the system with I/O demand at well beyond 300% of

what it can deliver. It remains stable over more than an hour of that sustained treatment; without this patch the SMB-based copies fail within a couple of minutes and the system becomes unresponsive for upwards of a minute at a time.

The patch is attached above; it applies against Steve's refactor for Stable-10 pulled as of Friday morning.

Please consider testing it if you have an interest in this issue.

Thanks.

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

One wonders if this issue is actually the reason 8GB has been declared as the minimum these days.

Comment 142

I was originally seeing these issues, and now that I'm looking into it, I might be seeing the stalls etc as well, on a small 8GB system that I've been abusing. Smashing it with large linear SMB/AFP (multi hundred GB transfers at a time) and resilvering/scrubbing, offlining drives...

You know, verifying that FreeNAS 9.10 will be up to going into production ;)

Comment 142

I can easily produce workloads here that cause either system stalls or (in a couple of cases I can produce on occasion) kernel panics due to outright RAM exhaustion with the parameters set where Steve and, apparently the rest of the core team wants them.

ZFS' code was originally designed to remain well beyond the paging threshold in RAM consumption. If you look at the original ARC code and how it determines sizing strategy this is apparent both in the comments and the code itself. Unfortunately this is a problem on FreeBSD because the VM system is designed to essentially sleep and do nothing until available system memory drops below vm.v.free_target.

So if you use ZFS on FreeBSD, and there was no change made to that strategy, over time inactive and cache pages would crowd out the ARC cache until it dropped to the minimum. This is unacceptable for obvious reasons so on FreeBSD the the code is (ab)used to pressure the VM system by intentionally invading the vm.v.free_target threshold, causing pagedaemon to wake up and clean up the kitchen floor of all the old McDonalds' hamburger wrappers. There is in fact apparently a belief in the core team that the ARC's eviction code should remain asleep until RAM reaches the vm.pageout_wakeup_thresh barrier, effectively using ARC to force the system to dance on the edge of the system's hard paging threshold. This is, in my opinion, gross violence upon the original intent of the ZFS code because causing the system to swap in preference to keeping a (slightly) larger disk cache is IMHO crazy, but this is the where my recent work has focused at their request. I've acceded to it for testing purposes (although I'll never run *my* systems configured there, and the patches I've got here don't have it set there) because if I can get the system to be stable with it set at that value it will run (much) better with what I believe is a more-sane choice for that parameter (vfs.zfs.arc_free_target), and that I (and everyone else) can tune easily.

UMA is much faster but it gets its speed in part by "cheating." That is, it doesn't actually return memory to the system when it's told to free memory right away; the overhead is deferred and taken "at convenient (or necessary) times" instead of for each allocation and release. It also has the characteristic of grabbing quite-large chunks of RAM at impressive rates of speed for its internal use within its internal bucketing system. The lazy release, while good for performance, can get you in a lot of trouble if you're dancing near the limits of RAM exhaustion and before that release happens an allocation is requested that cannot be filled with the existing free buckets in the UMA and thus a further actual RAM allocation is required.

As written ZFS currently calls the UMA reap routines in the arc maintenance thread. This, in a heavy I/O load environment with memory near exhaustion (on purpose due to the above) leads to pathology. If the system is forced to swap to fill a memory request for ZFS it is possible for the zio_ thread in question to block on that request until the pagedaemon can clear enough room for for allocation to be granted.

I have instrumented the ZFS allocation code sufficiently with dtrace to show that with heavy read/write I/O loads, especially on a degraded pool that is resilvering (thus cutting the maximum I/O performance materially) even though the ARC code *should* force eviction of sufficient cache to prevent materially invading the paging threshold with UMA on the fact that it does not reap until the ARC maintenance thread gets to it means I can produce *mid-triple-digit* free RAM page counts (that is, about two *megabytes*!) immediately following allocation requests -- and wicked, nasty stall conditions, some lasting for several minutes where anything requiring image activation (or recall from the swap) is non-responsive as all (or virtually all) of the zio_ threads are blocked waiting for the pagedaemon. This is on a 12GB machine with a normal paging threshold of 21,000 pages. The interesting thing about the pathology is that it presents as what appears to be a "resonance" sort of behavior; that is, it creates an oscillating condition in free memory that gets worse against a constant load profile until the system starts refusing service (the "stalls") and, if I continue to press my I/O demand into that condition I have managed to produce a couple of panics from outright RAM exhaustion.

The original patch I wrote dramatically reduced the risk because it pulled the ZFS ARC cache out of the paging threshold and to the vm_free_target point. It did not completely eliminate it due to the UMA interaction problem, which took a *lot* of effort to instrument sufficiently to prove. Pulling ARC out of the paging threshold *and* limiting dirty buffer memory to the difference between current free RAM and the paging threshold on a dynamic basis completely eliminates the problem (because it prevents that invasion of the threshold from happening in real time, and thus the reap calls from the arc maintenance thread is sufficient) but that apparently is not considered an "acceptable" fix. Turning off UMA works as well by removing the oscillation dynamic entirely but has a performance hit associated with it.

My current effort is to build a heuristic inside in the ARC ram allocator that will detect the incipient onset of this condition. I am currently able to get to the point where the code "dampens" the oscillation sufficiently to prevent outright RAM exhaustion, but not quickly enough to remain out of danger of a stall. That's not good enough because once the degenerative behavior starts it gets progressively worse for a constant workload. There's no guarantee I'll get where I want to go with this effort but I'm giving it my best shot as I'm aware that UMA is considered a "good thing" in terms of performance.

I'll post further code if/when I get something that survives my torture test with UMA on and doesn't throw dtrace probe data that makes me uncomfortable.

At present I believe I can get there.

I was originally seeing these issues, and now that I'm looking into it, I might be seeing the stalls etc as well, on a small 8GB system that I've been abusing. Smashing it with large linear SMB/AFP (multi hundred GB transfers at a time) and resilvering/scrubbing, offlining drives...

You know, verifying that FreeNAS 9.10 will be up to going into production ;)

Last edited:

- Joined

- Feb 15, 2014

- Messages

- 20,194

It might exacerbate the issue, but I don't see this outright causing gratuitous corruption, as @jgreco and @cyberjock used to see back in the day.

I could imagine this interacting with a race condition of some sort, though...

In any case, 8GB is a sound minimum to allow for a decently-sized ARC, so it shouldn't change.

I could imagine this interacting with a race condition of some sort, though...

In any case, 8GB is a sound minimum to allow for a decently-sized ARC, so it shouldn't change.

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

It might exacerbate the issue, but I don't see this outright causing gratuitous corruption, as @jgreco and @cyberjock used to see back in the day.

I could imagine this interacting with a race condition of some sort, though...

In any case, 8GB is a sound minimum to allow for a decently-sized ARC, so it shouldn't change.

Yes, its a good minimum. Be even better if it was a recommended minimum rather than a "you'll suffer corruption if you violate it" minimum.

If true memory exhaustion occurs it causes a kernel panic, while ZFS is blocked trying to do critical metadatery sortof stuff... and couldn't that cause corruption?

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Comment 143

I believe I have a smoking gun on the UMA memory allocator misbehavior.

zio_buf_65536: 65536, 0, 656, 40554, 25254813, 0, 0, 41MB, 2.475GB, 2.515GB

That's a particularly pathological case that is easily reproduced. All that is required is, on a degraded pool (to slow it's maximum I/O rate down) to do a read of all files on it to /dev/null (e.g. "find . -type f -exec cat {} >/dev/null \;") and then at the same time perform a copy of files within the pool (e.g. "pax -r -w -v somedirectory/* anotherdirectory/")

Here's the problem -- at the moment I took that snapshot there were 656 of those buffers currently in use, but over 40,000, or 2.475 *gigabytes*, of RAM "free" in that segment of the pool. In other words *rather than reassign one of the existing 40,000+ "free" UMA buffers the system went and grabbed another one, and it did it over 40,000 times!*

This pathology of handing back new memory instead of re-using the "free" buffers in that pool that the system already has continues until the ZFS low memory warnings trip, then the reap routine is called and magically that 3GB+ of RAM reappears all at once. In the meantime the ARC cache has been evicted on bogus memory pressure.

This is broken, badly -- those free buffers should be reassigned without taking a new allocation.

The impact of the pathology is extraordinary under heavy load as it produces extremely large oscillations in the system RAM state which both ZFS and the VM system have no effective means to deal with.

The correct fix will require finding out why UMA is not returning a free buffer that it has in the cache instead of allocating a new one, but until the UMA code is repaired the approach in comment 140, which includes disabling UMA, appears to be the only sane option.

Last edited:

- Joined

- Feb 15, 2014

- Messages

- 20,194

I think a rollback should be able to revert such damage, since it's no different from your typical kernel panic/power outage scenario - and those don't generally cause corrupted pools, unless something else was wrong.Yes, its a good minimum. Be even better if it was a recommended minimum rather than a "you'll suffer corruption if you violate it" minimum.

If true memory exhaustion occurs it causes a kernel panic, while ZFS is blocked trying to do critical metadatery sortof stuff... and couldn't that cause corruption?

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

The correct fix will require finding out why UMA is not returning a free buffer that it has in the cache instead of allocating a new one, but until the UMA code is repaired the approach in comment 140, which includes disabling UMA, appears to be the only sane option.

Code:

# sysctl vfs.zfs | grep uma vfs.zfs.zio.use_uma: 1

A smoking gun?

Last edited by a moderator:

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Leaving karl's research into the zfs/vm/uma fight to the death, and going after vfs.zfs.zio.use_uma leads to some very promising results!

A link to this forum...

https://forums.freenas.org/index.php?threads/freebsd-10-1-released.24811/

Karl's research indicates that UMA uses up all the free ram, but ZFS doesn't shrink the ARC because it knows the ram is free... just not reclaimed... and so pager has to scrounge and pages out.

A link to this forum...

https://forums.freenas.org/index.php?threads/freebsd-10-1-released.24811/

FreeBSD 10.1 Released

Here is the stuff this community is probably most interested in:

The vfs.zfs.zio.use_uma sysctl(8) has been re-enabled. On multi-CPU machines with enough RAM, this can easily double zfs(8) performance or reduce CPU usage in half. It was originally disabled due to memory and KVA exhaustion problem reports, which should be resolved due to several changes in the VM subsystem. [r260338]

Karl's research indicates that UMA uses up all the free ram, but ZFS doesn't shrink the ARC because it knows the ram is free... just not reclaimed... and so pager has to scrounge and pages out.

Last edited by a moderator:

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

So, taking into account everything I've learned... I went back and had a look at my 32GB system...

Wired = 15.7GB.

Inactive = 14.9GB

Free = 256MB + a bit.

That's a helluva lot of Inactive.

ARC Size is 13GB. Wired is almost a Proxy for ARC size.

So, I suspect, Inactive is mostly UMA free pages, which are being held around... just in case...

I wonder if this would look different with UMA disabled.

Wired = 15.7GB.

Inactive = 14.9GB

Free = 256MB + a bit.

That's a helluva lot of Inactive.

ARC Size is 13GB. Wired is almost a Proxy for ARC size.

So, I suspect, Inactive is mostly UMA free pages, which are being held around... just in case...

I wonder if this would look different with UMA disabled.

- Status

- Not open for further replies.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Swap with 9.10"

Similar threads

- Replies

- 2

- Views

- 3K

- Replies

- 6

- Views

- 3K

- Replies

- 2

- Views

- 3K

- Replies

- 3

- Views

- 2K