Edit: Jump down to Post #3 and you'll see that "zpool remove poolname log-vdev" got rid of the broken LOG vdev's confusion.

----------------------

Good reader, long story short, in trying to fix a simple issue I seem to have created a bigger one.

Help me answer this question:

A) Can I fix the problem described below, or,

B) Do I need to back up my pool, destroy it, rebuild it, and restore my data?

Extra credit: Do you think that the UI needs to be fixed to prevent this sort of disaster?

Thanks in advance!

----------------------------

I had a perfectly good system:

TrueNAS SCALE 23.10.1

deadpool (loved the movie)

DATA stripe of 5 mirror VDEVs (10 x 6TB SATA HDD drives)

LOG VDEV Optane SSD (110GB)

That was all working fine and dandy.

Then I got a message that one of the mirror members had an unrecoverable checksum error.

(offending drive is boldfaced)

So, I did the following:

- checked the SMART log for that drive - no error

- ran a scrub on the pool (~10 hours) - no errors

- ran both a SMART SHORT and SMART LONG self-test - no errors

- documentation suggests I run "zpool clear"

- THEN I poked the EXPAND button on the Storage dashboard..... BIG MISTAKE

Now it appears that the LOG device has been appended as another DATA vdev!

BUT the SSD had previously been successfully assigned as a LOG on the pool!

Now the system appears to be confused as to whether the SSD is still the LOG device or not:

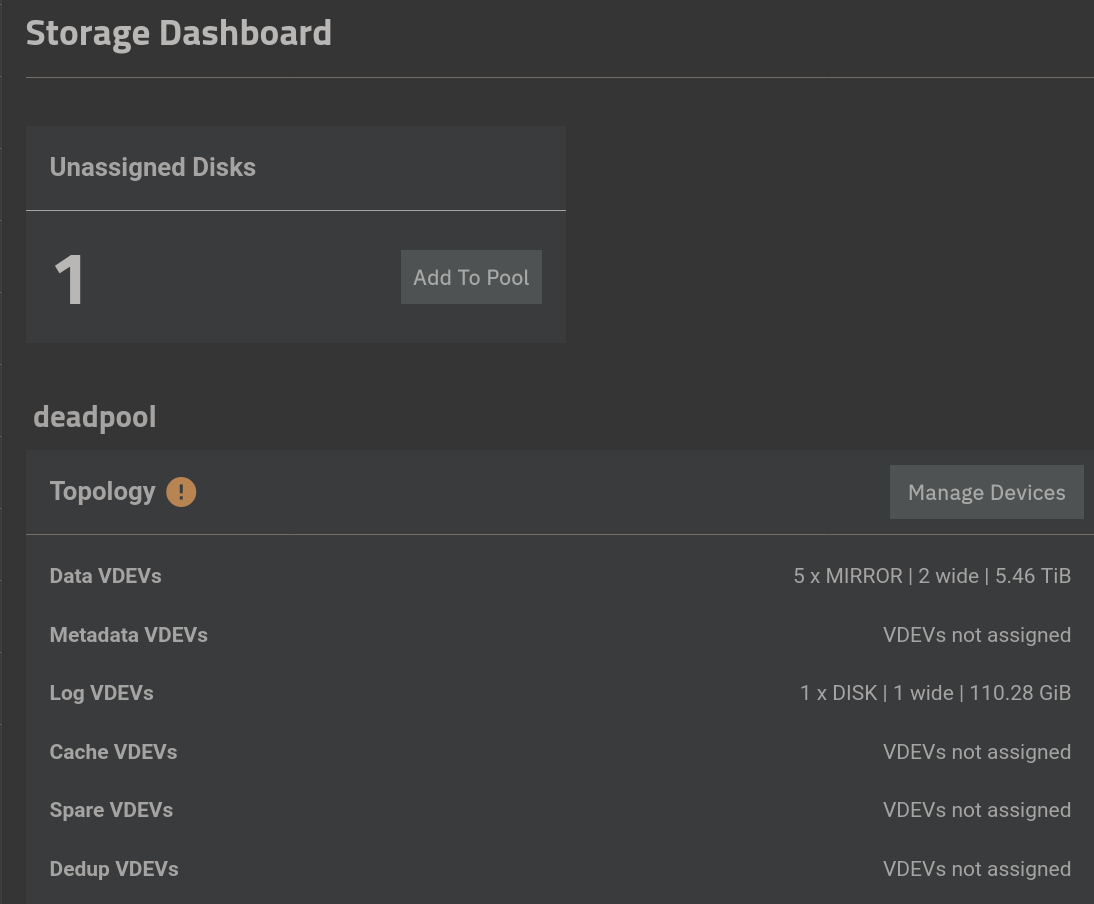

Instead of showing no Unassigned Disks, I get this:

So I rebooted the server. Now my real problems begin:

Running zpool status gives me this:

The way I interpret this is that the LOG device is not available, because it is now a DATA vdev, correct? So am I up the creek?

----------------------

Good reader, long story short, in trying to fix a simple issue I seem to have created a bigger one.

Help me answer this question:

A) Can I fix the problem described below, or,

B) Do I need to back up my pool, destroy it, rebuild it, and restore my data?

Extra credit: Do you think that the UI needs to be fixed to prevent this sort of disaster?

Thanks in advance!

----------------------------

I had a perfectly good system:

TrueNAS SCALE 23.10.1

deadpool (loved the movie)

DATA stripe of 5 mirror VDEVs (10 x 6TB SATA HDD drives)

LOG VDEV Optane SSD (110GB)

That was all working fine and dandy.

Then I got a message that one of the mirror members had an unrecoverable checksum error.

(offending drive is boldfaced)

Code:

# zpool status deadpool

pool: deadpool

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub repaired 0B in 09:51:23 with 0 errors on Sat Feb 10 03:51:27 2024

config:

NAME STATE READ WRITE CKSUM

deadpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

5ca8ef8d-a70a-11eb-aac5-a8a15937dbc6 ONLINE 0 0 0

c6702ffc-c408-11eb-a335-a8a15937dbc6 ONLINE 0 0 1

mirror-1 ONLINE 0 0 0

63dc06fb-a70a-11eb-aac5-a8a15937dbc6 ONLINE 0 0 0

f0fd5e07-c69c-11eb-aea5-a8a15937dbc6 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

657d1fa3-a70a-11eb-aac5-a8a15937dbc6 ONLINE 0 0 0

6c234139-c40b-11eb-a335-a8a15937dbc6 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

391b0432-dd76-11eb-950d-a8a15937dbc6 ONLINE 0 0 0

393b4155-dd76-11eb-950d-a8a15937dbc6 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

761be833-daec-4c39-8321-65ee9e8568b6 ONLINE 0 0 0

448ca77e-8020-41a3-a69e-11b8f09562cb ONLINE 0 0 0

logs

ecb518e7-03e5-44fb-8bcc-8e1e1b1dbc29 ONLINE 0 0 0

errors: No known data errorsSo, I did the following:

- checked the SMART log for that drive - no error

- ran a scrub on the pool (~10 hours) - no errors

- ran both a SMART SHORT and SMART LONG self-test - no errors

- documentation suggests I run "zpool clear"

- THEN I poked the EXPAND button on the Storage dashboard..... BIG MISTAKE

Now it appears that the LOG device has been appended as another DATA vdev!

BUT the SSD had previously been successfully assigned as a LOG on the pool!

Now the system appears to be confused as to whether the SSD is still the LOG device or not:

Instead of showing no Unassigned Disks, I get this:

So I rebooted the server. Now my real problems begin:

Running zpool status gives me this:

Code:

# zpool status

pool: deadpool

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

scan: scrub repaired 0B in 09:51:23 with 0 errors on Sat Feb 10 03:51:27 2024

config:

NAME STATE READ WRITE CKSUM

deadpool DEGRADED 0 0 0

mirror-0 ONLINE 0 0 0

5ca8ef8d-a70a-11eb-aac5-a8a15937dbc6 ONLINE 0 0 0

c6702ffc-c408-11eb-a335-a8a15937dbc6 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

63dc06fb-a70a-11eb-aac5-a8a15937dbc6 ONLINE 0 0 0

f0fd5e07-c69c-11eb-aea5-a8a15937dbc6 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

657d1fa3-a70a-11eb-aac5-a8a15937dbc6 ONLINE 0 0 0

6c234139-c40b-11eb-a335-a8a15937dbc6 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

391b0432-dd76-11eb-950d-a8a15937dbc6 ONLINE 0 0 0

393b4155-dd76-11eb-950d-a8a15937dbc6 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

761be833-daec-4c39-8321-65ee9e8568b6 ONLINE 0 0 0

448ca77e-8020-41a3-a69e-11b8f09562cb ONLINE 0 0 0

logs

15381800400422709162 UNAVAIL 0 0 0 was /dev/disk/by-partuuid/ecb518e7-03e5-44fb-8bcc-8e1e1b1dbc29

errors: No known data errors The way I interpret this is that the LOG device is not available, because it is now a DATA vdev, correct? So am I up the creek?

Last edited: