Hi,

I have 2 Truenas scale boxes, both running 23.10.2

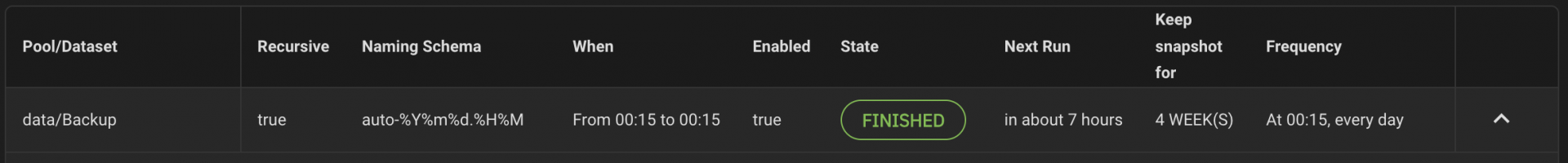

on the main one, let's say the source, I have a periodic snapshot task like this

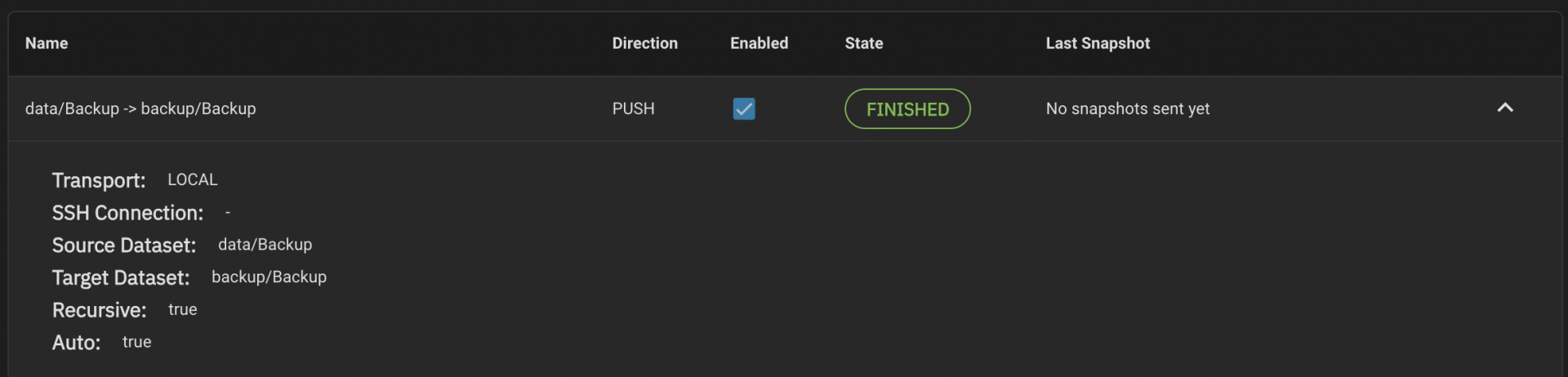

I want to replicate twice. Once locally, in another local pool, and once to the remote NAS box

The local replication task is like

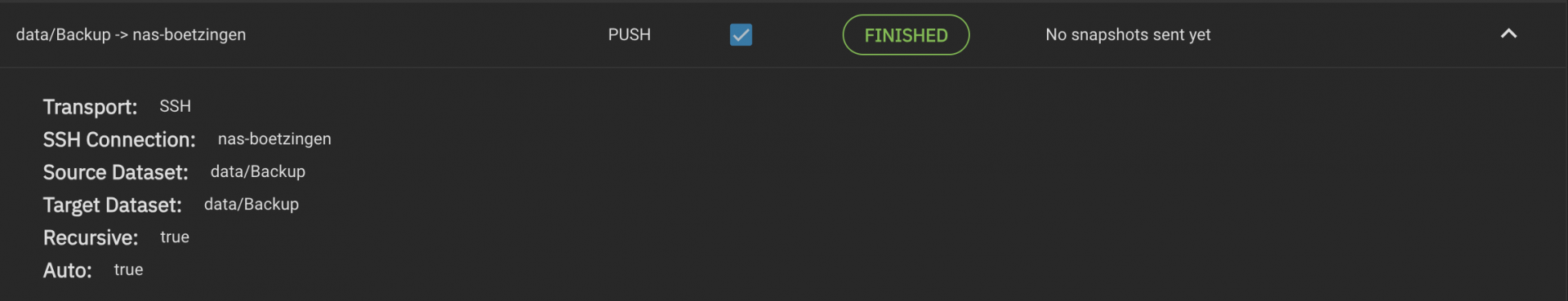

and the replication task to send it to the remote machine is like

The problem is that I see strange zettarepl error messages when the replication task runs

The `backup` pool exists only on the source Truenas server.

It does not exist on the remote Truenas machine, and the replication task to this remote machine is set to send to the `data` pool (which does exist), so why does it even try to access the `backup` pool on the remote server??

My remote machine eventually got full because snapshots were never deleted there, and that's how I noticed there was a problem with the retention on the remote server.

Note that this is working for years. I only recently upgraded the source machine from Truenas Core to Truenas scale.

Any idea how to fix this?

Thanks

I have 2 Truenas scale boxes, both running 23.10.2

on the main one, let's say the source, I have a periodic snapshot task like this

I want to replicate twice. Once locally, in another local pool, and once to the remote NAS box

The local replication task is like

and the replication task to send it to the remote machine is like

The problem is that I see strange zettarepl error messages when the replication task runs

Code:

[2024/03/21 17:38:18] INFO [MainThread] [zettarepl.scheduler.clock] Interrupted [2024/03/21 17:38:18] INFO [MainThread] [zettarepl.zettarepl] Scheduled tasks: [<Replication Task 'task_1'>] [2024/03/21 17:38:18] INFO [replication_task__task_1] [zettarepl.replication.pre_retention] Pre-retention destroying snapshots: [] [2024/03/21 17:38:18] INFO [replication_task__task_1] [zettarepl.replication.run] No snapshots to send for replication task 'task_1' on dataset 'data/Backup' [2024/03/21 17:38:19] INFO [Thread-1573] [zettarepl.paramiko.retention] Connected (version 2.0, client OpenSSH_9.2p1) [2024/03/21 17:38:20] INFO [Thread-1573] [zettarepl.paramiko.retention] Authentication (publickey) successful! [2024/03/21 17:38:20] ERROR [retention] [zettarepl.replication.task.snapshot_owner] Failed to list snapshots with <Shell(<SSH Transport(root@10.200.3.1)>)>: ExecException(1, "cannot open 'backup/Backup': dataset does not exist\n"). Assuming remote has no snapshots [2024/03/21 17:38:21] INFO [retention] [zettarepl.zettarepl] Retention destroying local snapshots: [] [2024/03/21 17:38:21] INFO [retention] [zettarepl.zettarepl] Retention on <LocalTransport()> destroying snapshots: [] [2024/03/21 17:38:23] INFO [retention] [zettarepl.zettarepl] Retention on <SSH Transport(root@10.200.3.1)> destroying snapshots: []

The `backup` pool exists only on the source Truenas server.

It does not exist on the remote Truenas machine, and the replication task to this remote machine is set to send to the `data` pool (which does exist), so why does it even try to access the `backup` pool on the remote server??

My remote machine eventually got full because snapshots were never deleted there, and that's how I noticed there was a problem with the retention on the remote server.

Note that this is working for years. I only recently upgraded the source machine from Truenas Core to Truenas scale.

Any idea how to fix this?

Thanks