scurrier

Patron

- Joined

- Jan 2, 2014

- Messages

- 297

I'm seeing some behavior from my FreeNAS box that I believe is strange. Basically, writes to my shares are always GBE fast, reads are only GBE fast if cached.

Problem description:

So it seems that without caching, the share's sequential reads are slow and can't max out GBE. Why is it so slow without caching?

More information:

I have extensively tested the read speeds of these arrays and I know that they are much faster than GBE is. This is all sequential, no excuses.

FTP can max out the connection:

I have no sysctls or tunables. atime is turned off on this dataset.

Here's my FreeNAS hardware:

Intel i3-4130

Corsair 2x8GB RAM (CT2KIT102472BD160B)

Supermicro X10SL7-F (onboard IT-flashed LSI 2308 for 8 SAS / onboard Intel for 6 SATA)

Supermicro 933T-R760B, 3U 15-bay hotswap case

My network is all GBE, cables are at least CAT 5E, and there is only one switch between these machines. I am transferring to/from a windows 8 machine's SSD using a Marvell Yukon NIC, but the phenomenon is the same on other machines in my house.

I could definitely live with these speeds but I just hate a nagging problem that I don't understand. Appreciate anyone's input as to what else I should look into.

Problem description:

- FreeNAS has two shares via smb/cifs, hosted on two different RAIDZ2 arrays:

-A 6-disk RAIDZ2 of modern Seagate 4TB NAS drives.

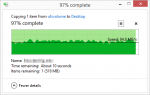

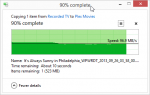

-A 4-disk RAIDZ2 of oldish Seagate 1.5TB consumer drives. - Sequential writes to the shares can ALWAYS max out my GBE, hovering around 109-112 MB/s. This is an example 20 gig file transfer, to show you how solid it is. When it finishes, hard drive activity stops immediately. The drives do not have to catch up to the RAM cache or anything. Picture of the write transfer:

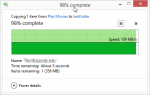

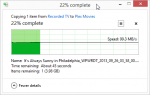

- When I first sequentially read a file from a share, it hovers around 90-100 MB/s. If I cancel the transfer mid-way through...(shortly after this point:

)

)

...and then read the same file again, it will peg around 110 MB/s until it gets to the point where I cancelled before. Then it will slow back down to the 90-100 MB/s speeds I was originally getting. You can see this effect here:

So it seems that without caching, the share's sequential reads are slow and can't max out GBE. Why is it so slow without caching?

More information:

I have extensively tested the read speeds of these arrays and I know that they are much faster than GBE is. This is all sequential, no excuses.

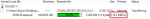

FTP can max out the connection:

I have no sysctls or tunables. atime is turned off on this dataset.

Here's my FreeNAS hardware:

Intel i3-4130

Corsair 2x8GB RAM (CT2KIT102472BD160B)

Supermicro X10SL7-F (onboard IT-flashed LSI 2308 for 8 SAS / onboard Intel for 6 SATA)

Supermicro 933T-R760B, 3U 15-bay hotswap case

My network is all GBE, cables are at least CAT 5E, and there is only one switch between these machines. I am transferring to/from a windows 8 machine's SSD using a Marvell Yukon NIC, but the phenomenon is the same on other machines in my house.

I could definitely live with these speeds but I just hate a nagging problem that I don't understand. Appreciate anyone's input as to what else I should look into.