So, after only 20 mins of system uptime, my CPUs are pegged at 100%. I believe it's got something to do with the Raid card consuming the SMART data at this point.

This error repeats in the terminal, over and over again, every couple of minutes. I'm assuming something is getting hung, and the process keeps running without terminating, but I've no idea there.

The disks have never once reported temperatures. I've got a mix of old and new drives in there, (The new ones are IronWolf NAS Pro 4TB drives)

simple top output:

12 smartctl tasks =?= 12 disks in the pool?

TrueNAS has never been able to figure out what the disks were, but it can pick up serial#, the model# and size, but none of the smart stuff comes through.

If I had to guess that's the fault of the raid card, even though it's in JBOD mode. I tried to replace it with a newer card, but the backplane doesn't seem to like my new SAS3 card (LSI SAS9340-8i ServeRAID M1215). Isn't SAS backwards compatible?

CPU: Dual Intel(R) Xeon(R) CPU 5160 @ 3Ghz

Mobo: X7DB3

Ram: 8x2Gb

Controller: Acrea arc-1880i in JBOD mode

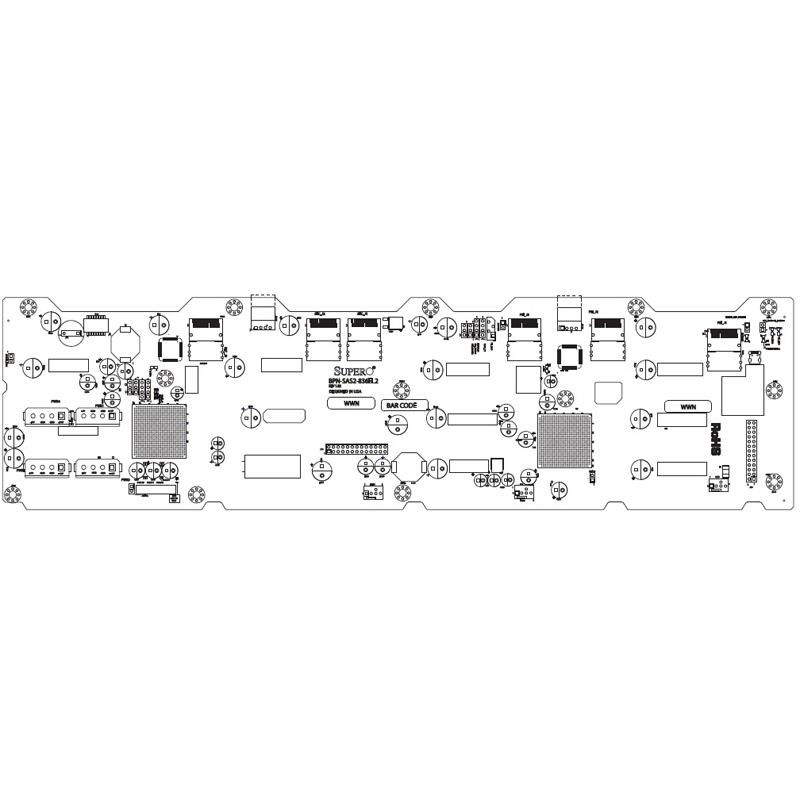

Backplane: bpn-sas-836el1

This error repeats in the terminal, over and over again, every couple of minutes. I'm assuming something is getting hung, and the process keeps running without terminating, but I've no idea there.

Code:

May 28 15:03:33 truenas 1 2021-05-28T15:03:33.399886-04:00 truenas.home.local collectd 1674 - - Traceback (most recent call last):

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 63, in read

temperatures = c.call('disk.temperatures', self.disks, self.powermode)

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 63, in read

temperatures = c.call('disk.temperatures', self.disks, self.powermode)

File "/usr/local/lib/python3.8/site-packages/middlewared/client/client.py", line 429, in call

raise ClientException(c.error, c.errno, c.trace, c.extra)

middlewared.client.client.ClientException: Lock is not acquired.

May 28 15:08:33 truenas 1 2021-05-28T15:08:33.422123-04:00 truenas.home.local collectd 1674 - - Traceback (most recent call last):

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 63, in read

temperatures = c.call('disk.temperatures', self.disks, self.powermode)

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 63, in read

temperatures = c.call('disk.temperatures', self.disks, self.powermode)

File "/usr/local/lib/python3.8/site-packages/middlewared/client/client.py", line 429, in call

raise ClientException(c.error, c.errno, c.trace, c.extra)

middlewared.client.client.ClientException: Lock is not acquired.

The disks have never once reported temperatures. I've got a mix of old and new drives in there, (The new ones are IronWolf NAS Pro 4TB drives)

simple top output:

Code:

last pid: 2942; load averages: 13.14, 11.87, 9.10 up 0+00:46:56 15:22:21

61 processes: 14 running, 47 sleeping

CPU: 14.3% user, 0.0% nice, 85.7% system, 0.0% interrupt, 0.0% idle

Mem: 854M Active, 675M Inact, 33M Laundry, 28G Wired, 1528M Free

ARC: 26G Total, 69M MFU, 25G MRU, 120M Anon, 67M Header, 207M Other

25G Compressed, 30G Uncompressed, 1.24:1 Ratio

Swap: 6144M Total, 6144M Free

PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND

2815 root 1 81 0 15M 5224K RUN 1 3:01 33.15% smartctl

2892 root 1 81 0 15M 5224K RUN 1 1:08 32.20% smartctl

2891 root 1 81 0 15M 5232K RUN 2 1:08 30.88% smartctl

2750 root 1 81 0 15M 5232K RUN 1 5:27 30.80% smartctl

2816 root 1 81 0 15M 5232K CPU3 3 3:00 30.69% smartctl

2692 root 1 81 0 15M 5232K RUN 2 8:50 29.48% smartctl

2626 root 1 81 0 15M 5224K RUN 2 12:55 29.27% smartctl

2880 root 1 81 0 15M 5232K RUN 2 1:13 29.17% smartctl

2804 root 1 81 0 15M 5224K RUN 2 3:07 29.05% smartctl

2679 root 1 81 0 15M 5224K CPU2 2 9:01 28.83% smartctl

2624 root 1 81 0 15M 5232K RUN 1 12:57 28.31% smartctl

2749 root 1 81 0 15M 5224K CPU1 1 5:27 28.14% smartctl

273 root 45 52 0 625M 498M kqread 1 1:41 15.11% python3.8

2048 root 1 79 0 189M 168M RUN 0 17:06 12.37% smbd

287 root 3 20 0 199M 160M usem 0 0:07 1.16% python3.8

1956 root 1 20 0 170M 151M kqread 0 0:06 0.14% smbd

2932 root 1 20 0 13M 3984K CPU0 0 0:00 0.09% top

2130 root 1 20 0 11M 2820K pause 2 0:00 0.01% iostat

256 root 1 20 0 11M 1964K select 3 0:00 0.01% devd

2504 root 1 20 0 28M 14M select 1 0:00 0.01% sshd

1649 www 1 20 0 35M 9144K kqread 0 0:00 0.01% nginx

1552 ntpd 1 20 0 19M 6864K select 3 0:00 0.01% ntpd

1639 root 8 20 0 36M 16M select 3 0:03 0.00% rrdcached

259 root 1 20 0 17M 6360K select 2 0:00 0.00% zfsd

1940 root 1 20 0 88M 70M kqread 1 0:00 0.00% winbindd

1674 root 11 20 0 85M 44M nanslp 3 0:37 0.00% collectd

288 root 3 20 0 211M 161M usem 0 0:07 0.00% python3.8

285 root 3 20 0 199M 160M piperd 2 0:07 0.00% python3.8

286 root 3 20 0 199M 160M usem 0 0:06 0.00% python3.8

284 root 3 20 0 199M 160M usem 0 0:06 0.00% python3.8

1680 avahi 1 20 0 13M 4324K select 3 0:01 0.00% avahi-daemon

432 root 1 20 0 58M 46M zevent 3 0:01 0.00% python3.8

1831 root 1 52 0 48M 35M ttyin 0 0:00 0.00% python3.8

1931 root 1 21 0 172M 153M kqread 3 0:00 0.00% smbd

1947 daemon 3 20 0 71M 48M kqread 2 0:00 0.00% python3.8

12 smartctl tasks =?= 12 disks in the pool?

TrueNAS has never been able to figure out what the disks were, but it can pick up serial#, the model# and size, but none of the smart stuff comes through.

If I had to guess that's the fault of the raid card, even though it's in JBOD mode. I tried to replace it with a newer card, but the backplane doesn't seem to like my new SAS3 card (LSI SAS9340-8i ServeRAID M1215). Isn't SAS backwards compatible?

CPU: Dual Intel(R) Xeon(R) CPU 5160 @ 3Ghz

Mobo: X7DB3

Ram: 8x2Gb

Controller: Acrea arc-1880i in JBOD mode

Backplane: bpn-sas-836el1